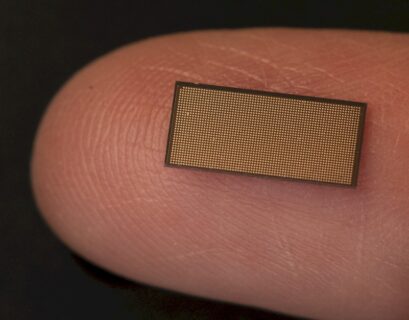

A cutting-edge new device has the potential to accelerate AI training at an unprecedented pace, presenting itself as either a remarkable tool with immense advantages or a societal menace that exclusively benefits those in positions of power. The Wafer Scale Engine 3 (WSE-3) chip, the powerhouse behind the Cerebras CS-3 AI supercomputer, achieves a remarkable peak performance of 125 petaFLOPS, establishing itself as the fastest AI chip ever developed by Cerebras Systems. Notably, it boasts an exceptional level of flexibility.

Training an AI model typically demands an immense volume of data before it can generate an endearing yet slightly uncanny image, such as a small cat rousing its owner, a process that engulfs over 100 families in its wake. However, this innovative device, accompanied by its associated computer components, significantly expedites and enhances the efficiency of the training process.

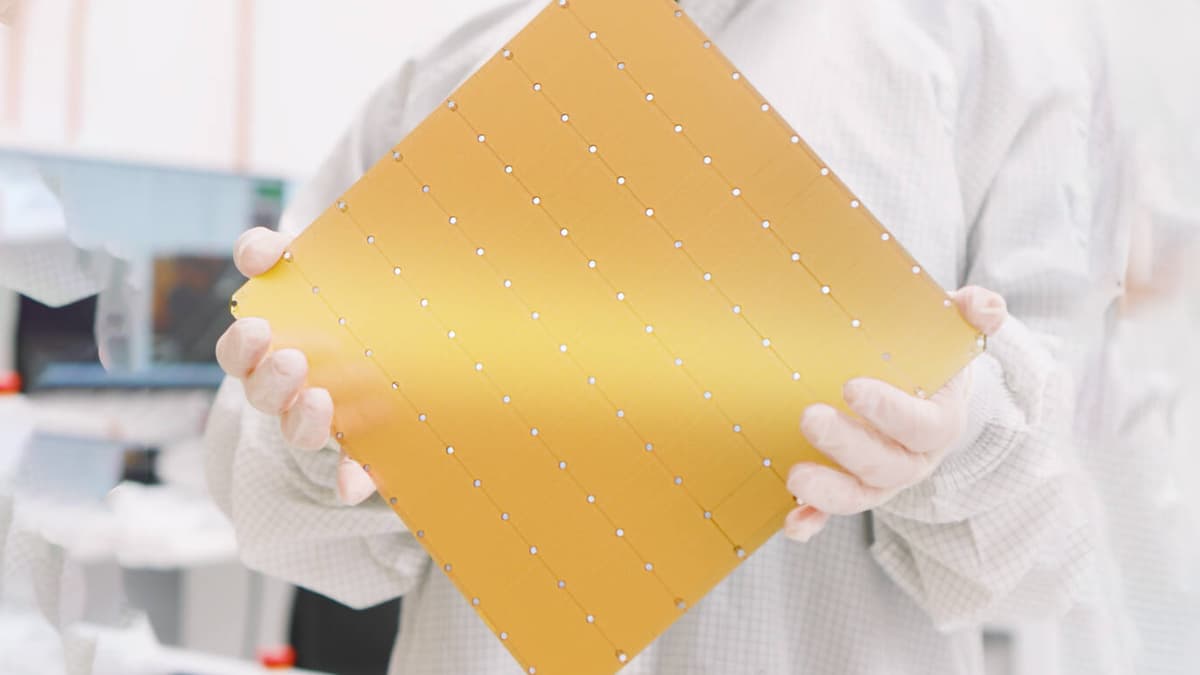

Each WSE-3 unit, approximately the size of a standard pie box, houses an astonishing four trillion transistors, delivering fifty percent greater efficiency compared to the company’s previous model (which also held the previous world record) at an equivalent cost and power consumption. When integrated with the CS-3 system, these units demonstrate the capability to provide the computational power of an entire room filled with servers within a single compact unit resembling a mini-fridge.

According to Cerebras, the CS-3 is equipped with 900,000 AI cores and 44 GB of on-device memory, delivering a maximum Artificial Intelligence performance of 125 petaFLOPS. Theoretically, this positions it among the top 10 supercomputers globally, although definitive performance comparisons are pending benchmark evaluations.

For data storage requirements, users can select from external memory capacities of 1.5 TB, 12 TB, or an extensive 1.2 Petabytes (1,200 TB). The CS-3 has the capacity to train AI models incorporating up to 24 trillion parameters – a substantial leap considering that most current AI models operate in the billions of parameters range, with projections suggesting that GPT-4 could reach around 1.8 trillion. Cerebras asserts that the CS-3 can effortlessly train a one-trillion-parameter model, a task that conventional GPU-based systems struggle with.

The scalable design of the WSE-3 chips’ wafer production process enables the CS-3 to be expanded, with the potential to cluster up to 2,048 units into an incomprehensibly powerful supercomputer. While the leading supercomputers today operate slightly above one exaFLOP, this configuration could support up to 256 exaFLOPS. This level of computational prowess, as per the company’s claims, would facilitate the training of a Llama 70B model from scratch within a single day.

AI models are progressing at an alarming pace, and advancements like this technology are poised to elevate the standards even further. Regardless of one’s stance, it appears inevitable that AI will permeate various aspects of both professional roles and personal interests at an accelerated rate.