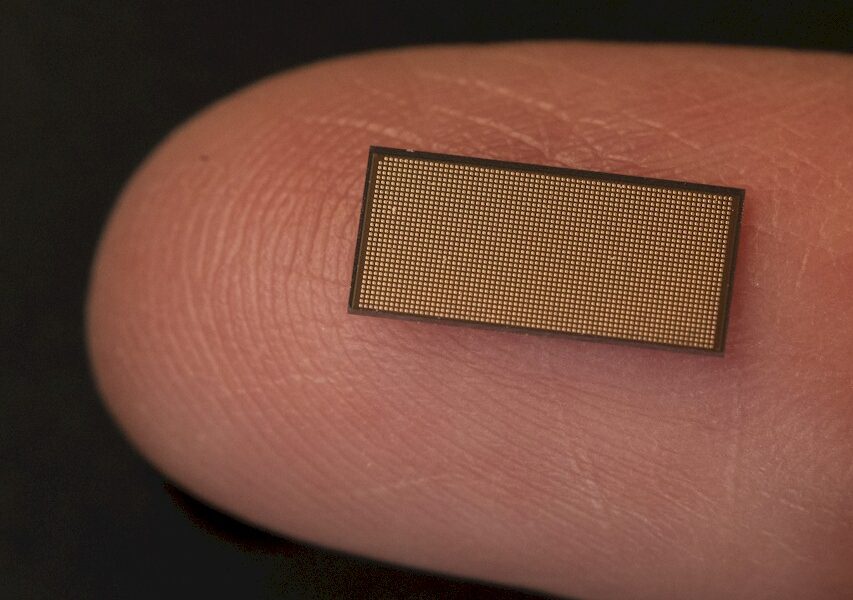

Not many devices in the datacenter have been etched with the Intel 4 process, which is the chip maker’s spin on 7 nanometer extreme ultraviolet immersion lithography. But Intel’s Loihi 2 neuromorphic processor is one of them, and Sandia National Laboratories is firing up a supercomputer with 1,152 of them interlinked to create what Intel is calling the largest neuromorphic system every assembled.

With Nvidia’s top-end “Blackwell” GPU accelerators now pushing up to 1,200 watts in their peak configurations, and require liquid cooling, and other accelerators no doubt following as their sockets get inevitably bigger as Moore’s Law scaling for chip making slows, this is a good time to take a step back and see what can be done with a reasonably scaled neuromorphic system, which not only has circuits which act more like real neurons used in real brains and also burn orders of magnitude less power than the XPUs commonly used in the datacenter for all kinds of compute.

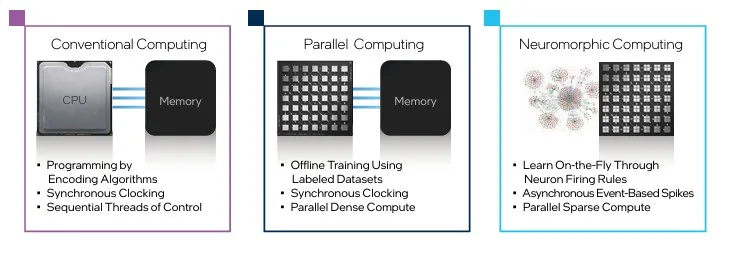

Next-generation computing architectures are something we obviously keep an eye on here at The Next Platform, just in case a particular flavor of dataflow engine, neuromorphic processor, or quantum computer advances sufficiently to work at scale on real-world workloads. We have been keeping an eye on the TrueNorth effort by IBM, which is derived from work that Big Bluedid with the US Defense Advanced Research Projects Agency, the Akida neuromorphic processor from BrainChip, the memristor-based and synaptic-inspired storage of Knowm, and of course the Loihi 1 and Loihi 2 family of chips from Intel.

It is the Loihi 2 neuromorphic processor that is at the heart of the new Hala Point system that is at Sandia being put through its paces to see how it can be applied to various artificial intelligence workloads and how this compares to AI approaches based on CPUs, GPUs, and other compute engines. Sandia likes to test put new architectures, given that this is one of the mandates of the national HPC labs around the world.

The first Loihi neuromorphic chip from Intel Labs, the research arm of the chip maker, was launched in September 2017 and appeared in the Pohoiki Beach system, which had 64 of these Loihi 1 processors linked to each other, in July 2019. At the time, Intel said that the Loihi 1 chip, which implements a spiking neural network architecture like the fatty tissue you carry around in your noggin, was roughly equivalent to 130,000 neurons and 128 million synapses. Intel eventually scaled up a system called Pohoiki Springs that had over 800 Loihi chips linked together and provided over 100 million neurons for AI models to make use of. (A human brain has about 100 billion neurons, or a factor of 1,000X more, just for context.)

The Pohoiki Beach and Pohoiki Springs machines were made available out of Intel Labs to hundreds of AI researchers and has not yet been commercialized. And neither will be the Halo Point system based on the second-generation Loihi 2 neuromorphic processor, which was announced in September 2021. But it is at least going into Sandia, which has created a tool called Whetstone that can convert various kinds of convolutional neural networks running on CPUs and GPUs to spiking neural networks running on machines like those based on the Loihi and Loihi 2 chips.

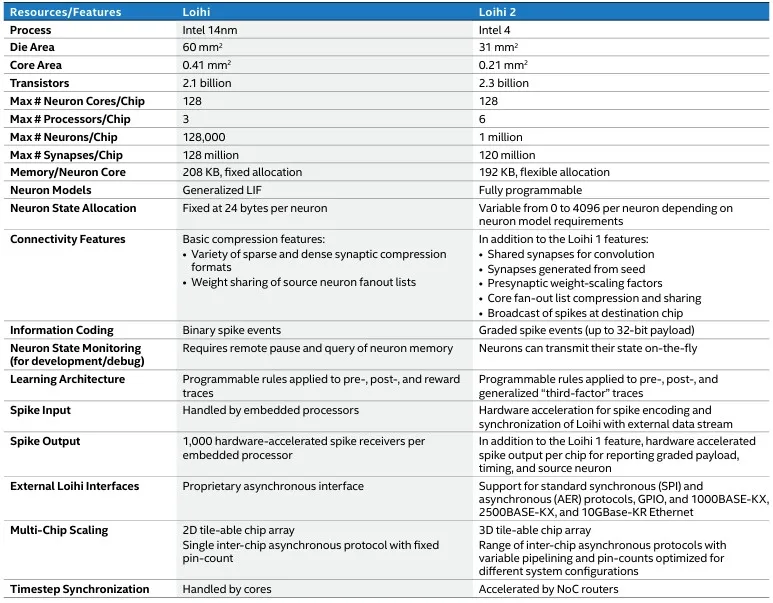

By shifting to the Intel 4 process, the Loihi 2 chip could be roughly half the area of the Loihi 1 and have the same number of neuron cores, 8X more neurons at 1 million, and nearly the same number of synapses (123 million) as the Loihi 1. Here are the speeds and feeds of the two chips:

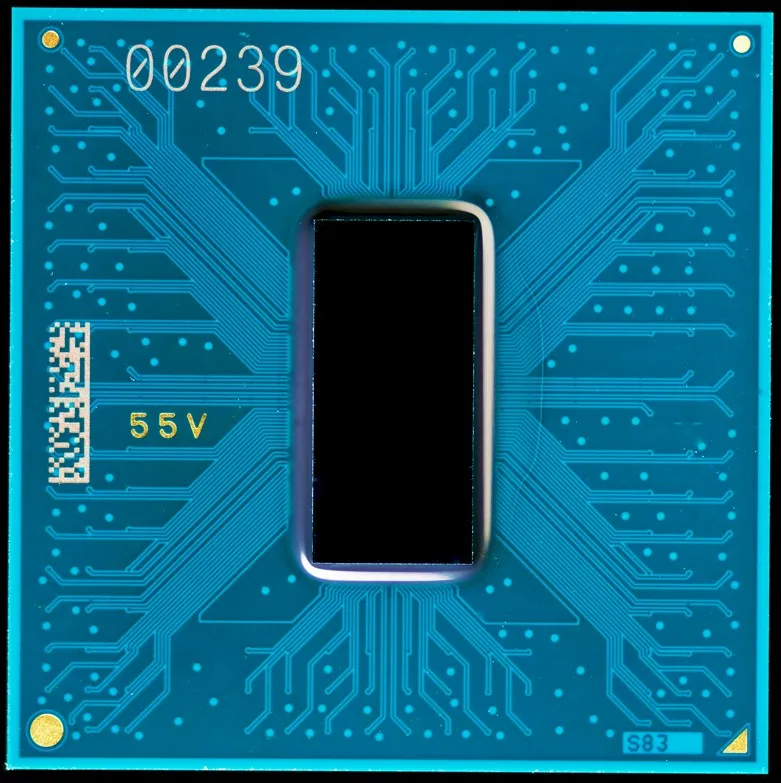

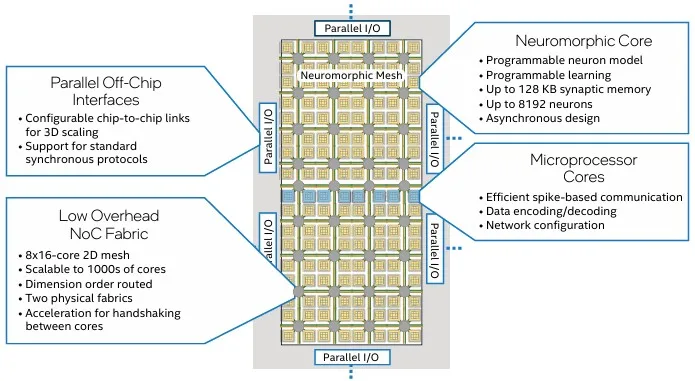

And here is a block diagram of the Loihi 2 chip for your review:

The number of embedded processor cores on the Loihi device, which are programmed using C or Python and which are used to encode and decode data used by the spiking neural network software, was doubled up to six from three on the Loihi 1.

Which brings us up to Halo Point. With 1,152 of these Loihi 2 chips, the cluster of machines now at Sandia has 140,544 neuron cores and 2,304 host X86 cores. Those neuron cores implement 1.15 billion neurons, which is about 1 percent of what the human brain has and roughly equivalent to the brain of an owl. This Halo Point machine has a total of 138.2 billion synapses.

That is about a factor of 10X more brain power than the Pohoiki Springs machine that researchers around the world were playing with for the past two years.

There are a dozen cards in the Halo Point server nodes, and each card includes six Loihi 2 compute complexes with eight Loihi 2 chips each. That’s 48 Loihi 2 chips per card and that works out to 576 Loihi 2 chips in a 6U rack mounted enclosure. So this supercomputer, which burns a mere 2,600 watts, fits in 12U of a 42U rack.

Halo Point is able to process 380 trillion synaptic operations per second and 240 trillion neuron operations per second and has an aggregate memory bandwidth of 16 PB per second. Running sparse deep neural networks at 8-bit data resolution, this 12U system can do an equivalent of 20 petaops of crunching at a power efficiency of 15 teraops per watt.

Why stop there? A mere 174 of these 6U enclosures – a mere 87X increase in computing power over the Halo Point system – would have the same number of “neurons” as the human brain.

The fun bit is the human brain burns about 20 watts, and those 174 enclosures would burn 226.2 kilowatts of juice, or about 11,310X that of the brain. Such a 100 billion neuron cluster of Loihi 2 chips would take up 25 racks of space, which is 1.55 million cubic inches of space compared to the 1,200 cubic inches of the average human brain. The brain is 128,900X more space efficient than such a cluster of Loihi 2 chips could be.

Here’s the funner bit: If Moore’s Law could double chip density every two years without any effort, it would only take 34 years to match the brain on space efficiency and only 27 years to match the brain on energy efficiency. 3D stacking, which is absolutely possible with the Loihi chips, would shorten that time perhaps and make a more reasonable compute complex.

We have a more interesting question: What happens if you could make a simulated human brain with 1 trillion simulated neurons, or 10 trillion simulated neurons, instead of real human brain with 100 billion real neurons? Would it be “smarter,” whatever that means?

If we survive this current extinction-level event, rest assured of one thing: Someone will try to answer those questions. And it may not take all that much money to do it. This sounds a lot cheaper than trying to do it with heaven only knows how many GPUs.