Music, widely recognized as the universal language, constitutes a core element in all societies, transcending cultural boundaries. Despite the vast diversity in cultural environments, there exists a potential shared element of “musical instinct.”

A team of scientists from KAIST, overseen by Professor Hawoong Jung from the Physics Department, has utilized an artificial neural network model to unveil the spontaneous emergence of musical instincts within the human brain, devoid of explicit training.

Led by Dr. Gwangsu Kim from the Physics Department at KAIST (now associated with MIT’s Department of Brain and Cognitive Sciences) and Dr. Dong-Kyum Kim (currently linked with IBS), the study has been featured in Nature Communications under the title “Spontaneous emergence of rudimentary music detectors in deep neural networks.”

Prior research endeavors have focused on delineating the similarities and differences in music across various cultures, aiming to comprehend its universal origins. A study published in Science in 2019 revealed that music is a prevalent phenomenon in ethnically diverse communities, characterized by akin rhythmic patterns and melodies. Furthermore, neuroscientists have pinpointed the auditory cortex in the human brain as the focal point for processing musical stimuli.

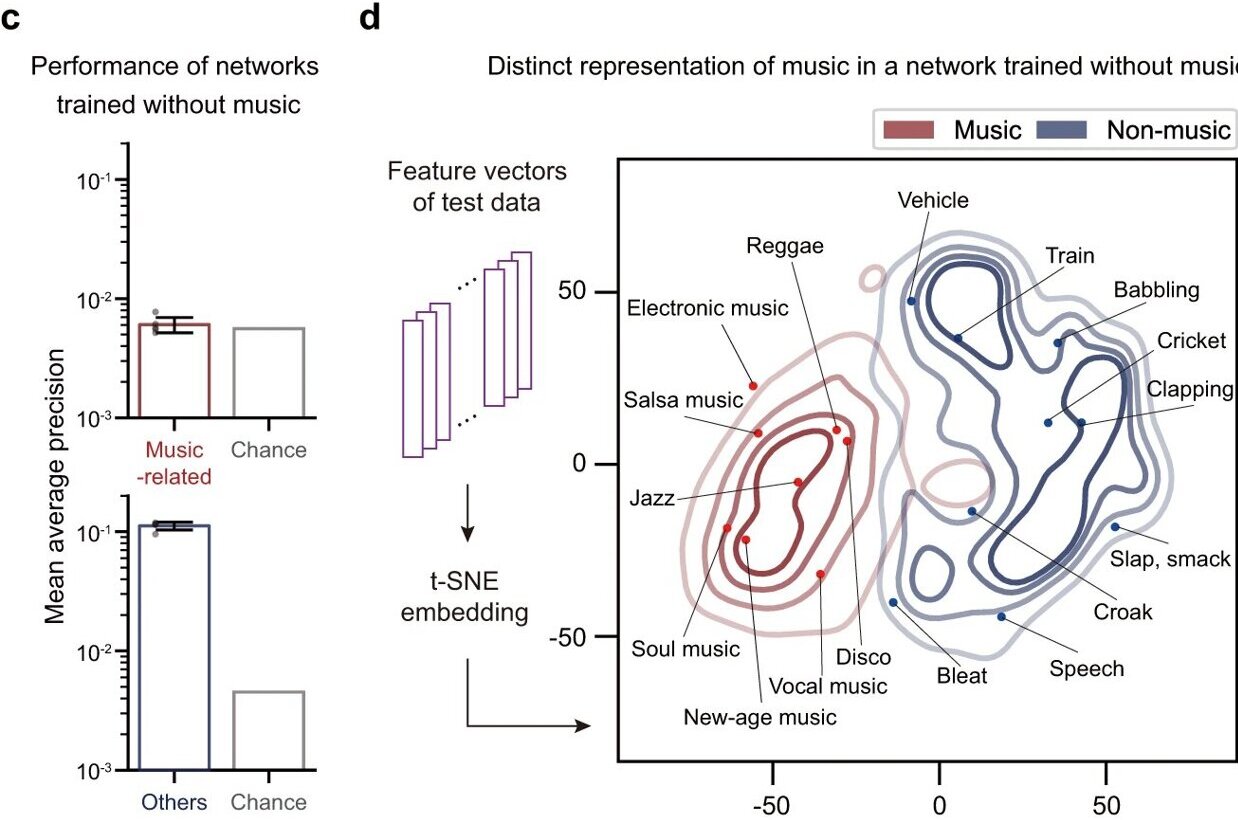

Professor Jung’s team employed an artificial neural network model to illustrate that cognitive mechanisms for music evolve spontaneously through the processing of natural auditory stimuli, sans explicit musical education. Leveraging AudioSet, a comprehensive sound database by Google, the researchers trained the artificial neural network to identify diverse sound types.

Interestingly, the researchers noted specific neurons within the neural network that displayed selective responses to music, indicating the spontaneous emergence of neurons exhibiting minimal reactions to non-musical sounds such as animal noises or mechanical sounds, but heightened responses to a variety of musical genres, including instrumental and vocal compositions.

These music-selective neurons within the artificial neural network showcased response patterns analogous to those observed in the auditory cortex of the human brain. Notably, these neurons encoded the temporal structure of music, as evidenced by their reduced response to music fragments rearranged into shorter intervals. This characteristic was consistent across various music genres, spanning classical, pop, rock, jazz, and electronic music.

Additionally, suppressing the activity of these music-selective neurons significantly impeded the cognitive processing of other natural sounds. This implies that the neural processing involved in music perception aids in the interpretation of diverse auditory stimuli, suggesting that “musical ability” could represent an evolutionary adaptation to enhance natural sound processing.

Professor Jung highlighted, “Our findings indicate that evolutionary forces have contributed to establishing a universal framework for processing musical information across different cultures.” He emphasized the potential implications of the research, envisioning the artificially constructed model with human-like musicality as a valuable tool for applications such as AI music generation, musical therapy, and studies on musical cognition.

While recognizing the study’s limitations, Professor Jung clarified, “This research does not delve into the subsequent developmental stages post-music learning, focusing instead on the foundational aspects of processing musical information during early development.”