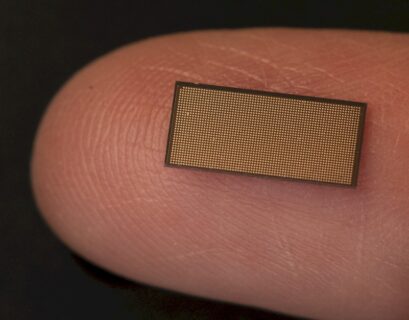

California-based AI company Groq has introduced the LPU Inference Engine, which has surpassed competitors in recent benchmarks, showcasing its ability to swiftly process information and provide quick responses. This innovative solution addresses compute density and memory bandwidth challenges, enhancing processing speeds for demanding applications like Large Language Models (LLM). By reducing the time required to calculate each word, sequences of text can be generated more efficiently.

The Language Processing Unit, a key component of Groq’s inference engine, delivers rapid responses by processing data and generating tokens at an impressive rate. Groq’s internal testing revealed outstanding performance, achieving over 300 tokens per second per user with the Llama-2 (70B) LLM from Meta AI. In subsequent public benchmarking, Groq outperformed major cloud-based inference providers, demonstrating its superiority in speed and efficiency.

ArtificialAnalysis.ai conducted independent benchmarks and confirmed Groq’s exceptional throughput of 241 tokens per second, significantly outpacing other hosting services. This remarkable speed enhancement opens up new possibilities for large language models, as stated by Micah Hill-Smith, co-creator at ArtificialAnalysis.ai. Groq’s LPU Inference Engine excelled in various metrics such as total response time, throughput consistency, and latency vs. throughput, necessitating adjustments to accommodate its outstanding performance.

Jonathan Ross, CEO and founder of Groq, emphasized the company’s mission to democratize AI capabilities and empower the entire AI community. The validation of Groq’s LPU Inference Engine as the fastest option for running Large Language Models underscores its impact on accelerating development and innovation in AI applications. Early access to the Groq API, including Llama 2 (70B), Mistral, and Falcon, is available for approved users to experience the engine’s capabilities firsthand.