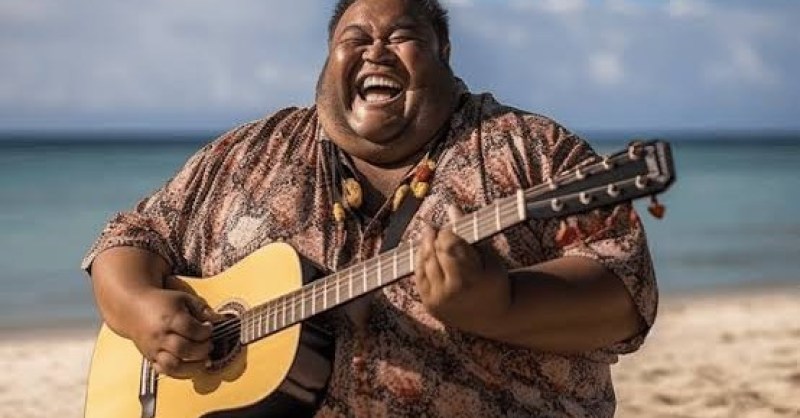

When you search for “Israel Kamakawiwoʻole” on Google, you won’t immediately come across one of the singer’s iconic album covers or a performance image with his ukulele. Instead, the top result displays a picture of a man seated on a beach, sporting a cheerful smile. However, this image is not a genuine photograph of the artist but rather an AI-generated fabrication. Clicking on this image redirects you to the Midjourney subreddit where a series of such images were originally shared.

This discovery was initially brought to light by Ethan Mollick on X (formerly Twitter), a professor at Wharton specializing in AI research.

Upon closer examination, the artificial nature of the image becomes apparent. The simulated depth of field effect appears inconsistent, the shirt texture seems distorted, and notably, a finger is missing from the man’s left hand. Despite the advancements in AI-generated visuals, these discrepancies make such creations relatively easy to distinguish upon scrutiny.

The concerning aspect lies in these fabricated images surfacing prominently in search results for a well-known individual without any distinguishing watermarks or indicators of their AI origin. While Google does not explicitly vouch for the authenticity of its image search outcomes, the lack of transparency in this instance raises significant alarm.

Several plausible explanations exist for this specific occurrence. Given that the Hawaiian singer, commonly referred to as Iz, passed away in 1997, Google’s algorithm tends to prioritize serving up-to-date information to users. However, with limited recent coverage or discussions surrounding Iz, it’s understandable why the algorithm seized upon this content. Although the impact on Iz may seem negligible, the potential implications could be far more severe in other contexts.

Even if such occurrences are not widespread in search results, this incident underscores the necessity for Google to establish guidelines in this regard. At the very least, there should be clear identification for AI-generated images to prevent any misinformation. Providing users with the option to filter out such content automatically would be a step in the right direction. Nevertheless, considering Google’s vested interest in AI-generated content, there are apprehensions that it may choose to integrate such content surreptitiously without explicit labeling.