Gamers have a rich history of serving as benchmarks for evaluating the effectiveness of artificial intelligence (AI). While methods based on activity theory showed promise in certain limited-information blackjack variations, research and learning-based approaches struggled in numerous high-information games. Collaborating with EquiLibre Technologies, Sony AI, Amii, and Midjourney, experts from Google’s DeepMind project have introduced “Student of Games” (SoG) as a versatile engine that integrates previous endeavors. By amalgamating directed research, self-play comprehension, and game-theoretic reasoning, SoG represents a significant advancement towards developing universal algorithms adaptable to any gaming environment, showcasing remarkable empirical performance in both extensive perfect and imperfect information games. The experts have illustrated that SoG is robust, progressively achieving flawless gameplay with enhanced computational capabilities and approximation. Noteworthy successes include excelling in chess and Go, outperforming leading agents in heads-up no-limit Texas hold’em poker, and surpassing the state-of-the-art agent in Scotland Yard, underscoring the significance of guided research, learning, and game-theoretic reasoning in imperfect information games.

A machine was trained to master a tabletop game to the extent of surpassing human players, demonstrating the remarkable progress in artificial intelligence. This recent study marks a significant stride towards the development of artificial general intelligence, empowering computers to undertake tasks previously deemed beyond their scope.

Unlike conventional table game-playing computers tailored for specific games like chess, researchers have crafted a sophisticated artificial intelligence capable of diversifying skills to compete across various game types.

SoG: Unveiling the Power of “Student Of Games”

SoG, or “Student Of Games,” holds immense practical utility by amalgamating exploration, learning, and game theory research into unified algorithms. SoG incorporates a GT-CFR approach for learning through autonomous self-play and CVPNs, emerging as a reliable algorithm for both perfect and imperfect information games. As computational resources expand, SoG is poised to deliver superior approximations of minimax-optimal strategies. Notably, in Leduc blackjack, where enhanced search leads to refined test-time approximations, SoG stands out from conventional RL systems devoid of search functionality.

Key Strengths of SoG

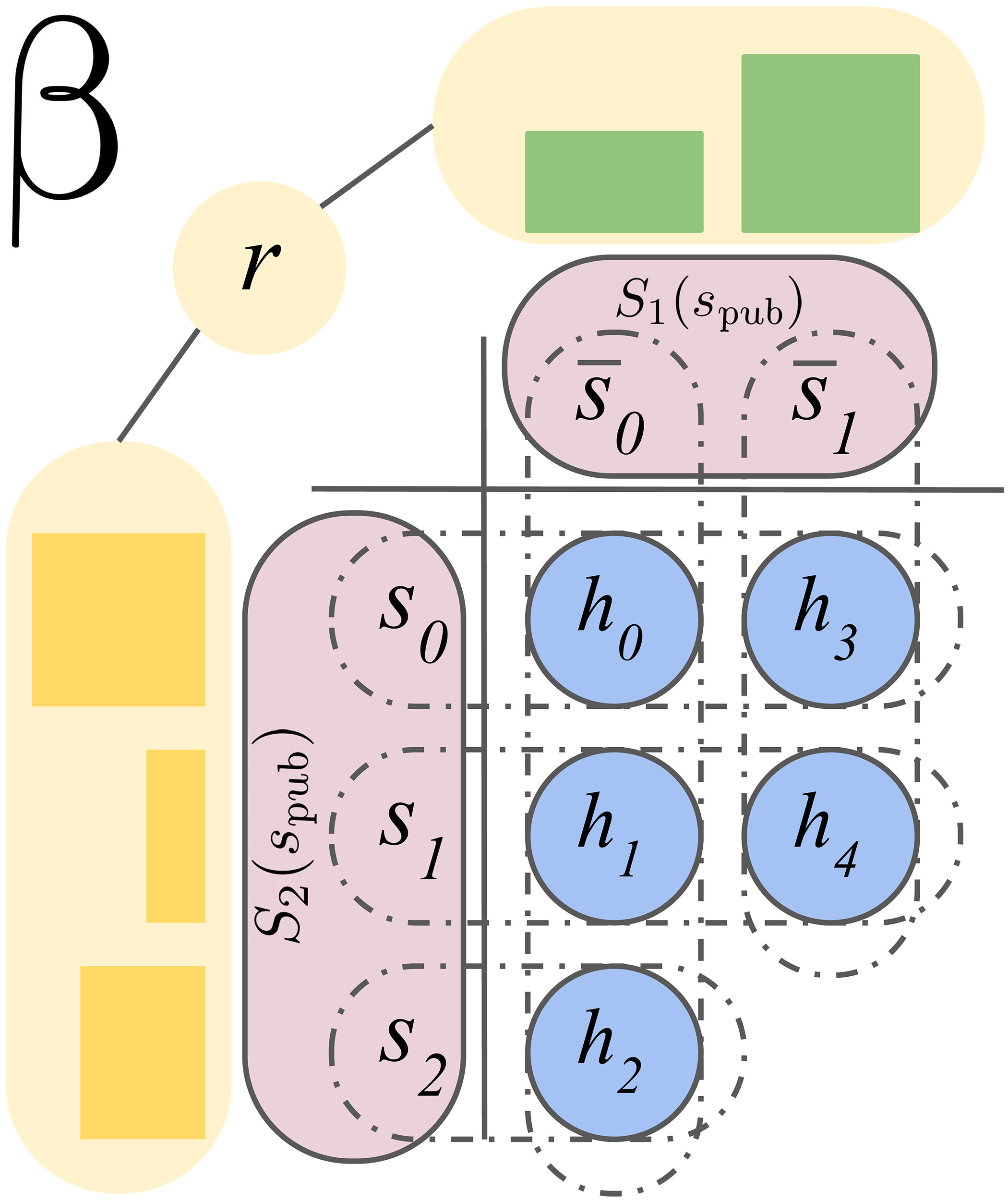

Employing growing-tree counterfactual regret minimization (GT-CRF), SoG enhances the significance of subgames linked to critical future states, a versatile approach applicable across scenarios. Additionally, effective self-play training, involving value-and-policy networks based on game outcomes and iterative sub-searches from prior scenarios, equips SoG to excel in diverse problem domains encompassing both perfect and imperfect information. This marks a pivotal advancement towards universally applicable techniques adaptable to any context, overcoming inherent challenges in imperfect information games.

Algorithmic Insights

The SoG methodology leverages sound self-play to guide agents, combining expertly tuned GT-CFR searches and CVPNs to formulate policies based on the current state, facilitating random action sampling. The GT-CFR process unfolds in two stages: an expansion phase and a regret updating stage, culminating in a refined tree structure. Training data for value and policy networks is generated during self-play, incorporating full-game trajectories and search queries, aiding in network adjustments and historical value target releases.

Limitations and Future Directions

- Poker betting abstractions may be replaced by a general action-reduction strategy for expansive activity spaces.

- The current data-intensive nature of SoG could be mitigated through conceptual designs sampling world states, potentially reducing computational costs.

- Exploring the feasibility of achieving comparable performance with fewer resources remains an intriguing avenue, given the substantial computational demands in challenging domains.

The team’s optimism regarding SoG’s adaptability across diverse game genres is supported by its victories over AI adversaries and human players in Go, chess, Texas Hold’em poker, and Scotland Yard, underscoring its potential for broader gaming applications.