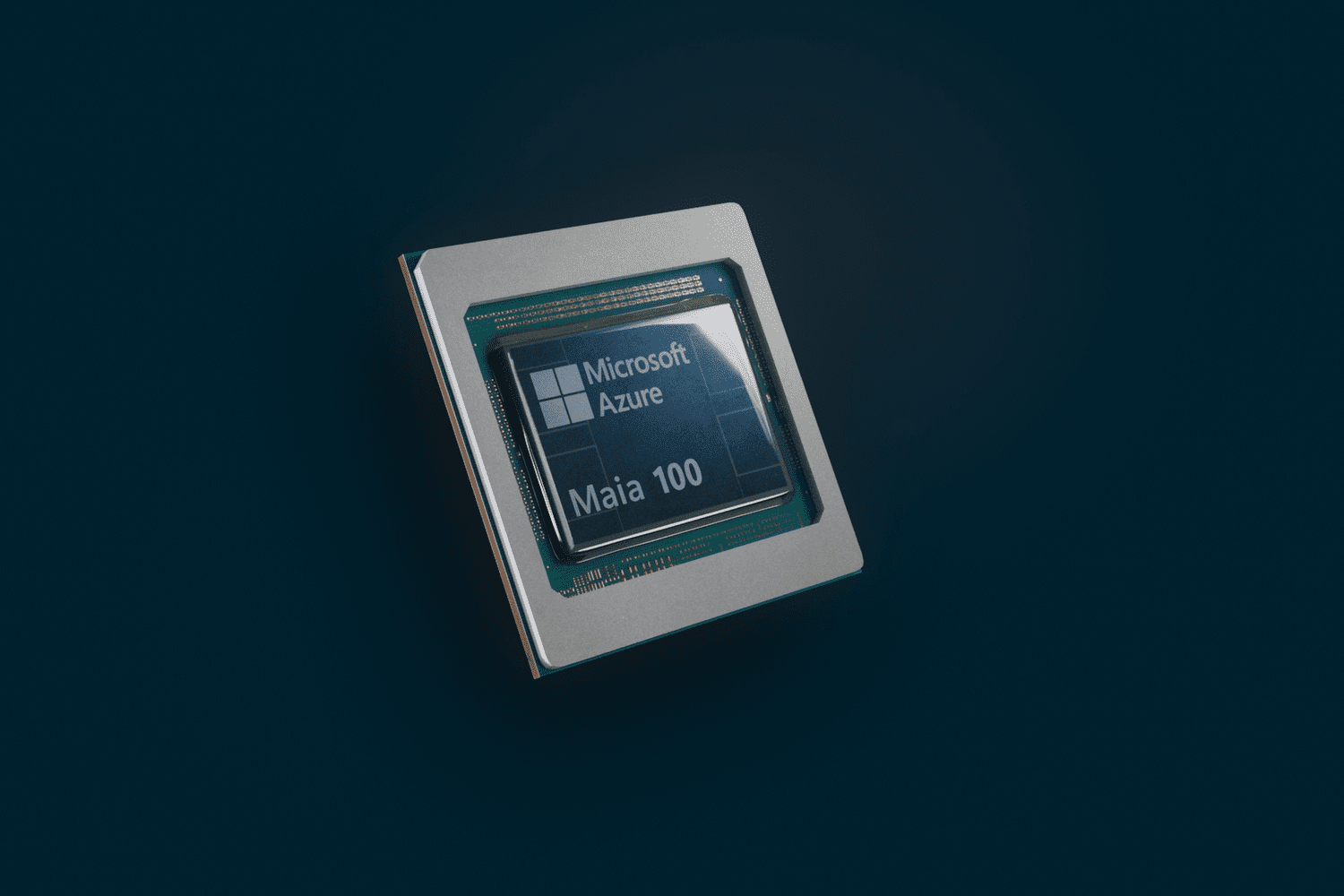

In response to a surge in the demand for AI tools, Microsoft (MSFT) introduced the Azure Maia AI Accelerator, a specialized chip designed for artificial intelligence (AI) tasks and generative AI (genAI).

During Microsoft Ignite, the annual developer conference held on Wednesday, the company unveiled the Azure Maia AI Accelerator alongside various AI-related updates, such as enhancements to its genAI application, Copilot, and its cloud platform, Cloud.

Scott Guthrie, the executive vice president of Microsoft’s Cloud + AI Group, emphasized that Microsoft is establishing the necessary infrastructure to foster AI innovation. He highlighted that the Azure Maia AI Accelerator enables the company to expand its supply chain and offer users a wider range of system options.

This announcement follows closely on the heels of Nvidia’s (NVDA) introduction of the H200, its latest and most powerful graphics processing unit (GPU) tailored for driving AI systems, as competition in the AI technology sector heats up. Bank of America analysts described the H200 as a significant advancement from the previous leading H100 models, with availability slated to commence in the second quarter of 2024.

Nvidia holds a prominent position in the AI hardware market, with its technology powering AI systems at various businesses, including industry giants like Amazon (AMZN), Google, and notably Microsoft. Microsoft also revealed its collaboration with Nvidia, particularly in the realm of Cloud services, while expanding its industry partnerships, including those with Nvidia. Speculations suggest that the Azure Maia AI Accelerator could potentially replace Nvidia chips in the cloud infrastructure.

Microsoft disclosed its plans to incorporate the upgraded Nvidia H200 into its offerings next year to enhance large-scale inferencing capabilities. Additionally, a preview of the new NC H100 v5 Virtual Machine Series, tailored for the HP100 system, was released to the public.