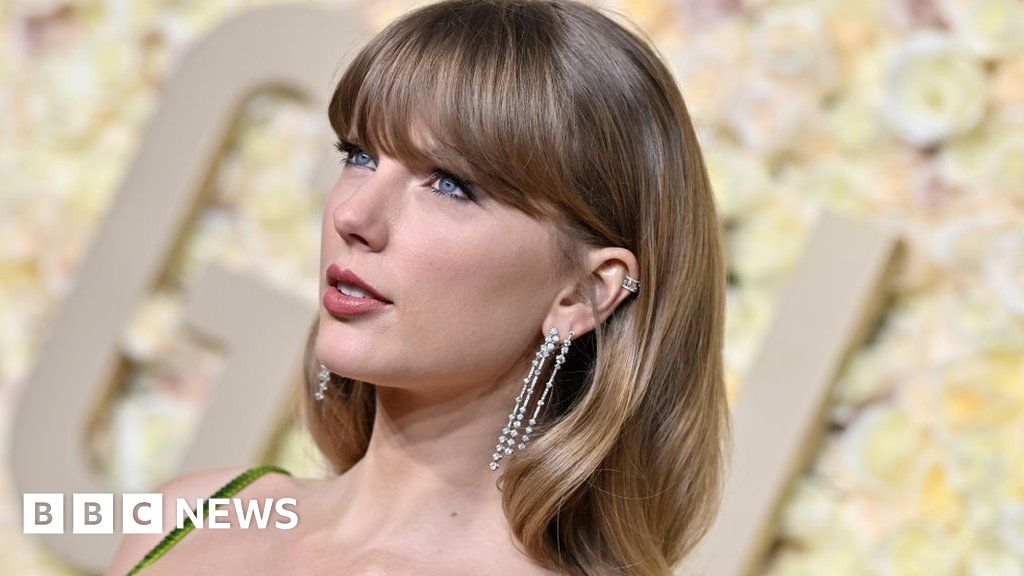

Social media platform X has taken measures to block searches for Taylor Swift following the circulation of explicit AI-generated images of the singer on the platform.

According to X’s head of business operations, Joe Benarroch, this action is considered temporary and is primarily focused on ensuring user safety. Users attempting to search for Taylor Swift on the platform are greeted with an error message prompting them to reload the page.

The dissemination of fake graphic images of the singer gained significant traction earlier this week, garnering millions of views and raising concerns among both US officials and Swift’s fanbase.

In response to the situation, fans actively flagged posts and accounts sharing the fabricated images, flooding the platform with authentic photos and videos of Taylor Swift under the hashtag “protect Taylor Swift.”

X, previously known as Twitter, issued a statement on Friday denouncing the posting of non-consensual nudity on its platform, emphasizing a strict prohibition against such content. The platform’s teams are diligently working to remove all identified images and take necessary actions against the responsible accounts.

The timeline for when X initiated the block on searches for Taylor Swift remains unclear, as does any history of similar actions taken against other public figures or terms on the platform.

Mr. Benarroch explained in his communication with the BBC that this precautionary measure aligns with the platform’s commitment to prioritizing safety.

The matter also captured the attention of the White House, which expressed alarm over the proliferation of AI-generated photos. The press secretary highlighted the disproportionate impact on women and girls, emphasizing the need for legislative measures to address the misuse of AI technology on social media platforms.

US lawmakers have echoed these sentiments, advocating for new legislation to criminalize the creation of deepfake images, which utilize AI to manipulate individuals’ faces or bodies in videos. The absence of federal laws specifically targeting the sharing or production of deepfake content underscores the urgency for regulatory action at both state and national levels.

In the UK, the Online Safety Act of 2023 made the sharing of deepfake pornography illegal, signaling a proactive stance against the dissemination of digitally altered explicit content.