At Artevelde University of Applied Sciences in Belgium, Mira is pursuing a course in global business studies. Recently, she was taken aback to discover that 40% of her paper had been flagged as having been generated by an application, as indicated by an artificial intelligence detection tool. Perplexed by this revelation, she sought assistance on Twitter to address the issue.

Mira, whose real identity was kept confidential, expressed her concerns to The Daily Beast, stating, “I was certain that I did not utilize any AI assistance, which made me anxious about the originality of my work.” Subsequently, she confided in her physician about her predicament, unsure of how to prove the authorship of her paper. While awaiting a response from her doctor, who agreed to review the paper, Mira grappled with the situation.

The implications of this discovery weighed heavily on her, leading her to contemplate rejecting the project and potentially even dropping the entire course if the AI detection continued to flag a significant portion of her work. This experience has heightened Mira’s anxiety about future essay writing endeavors, particularly challenging for her as a non-native English speaker.

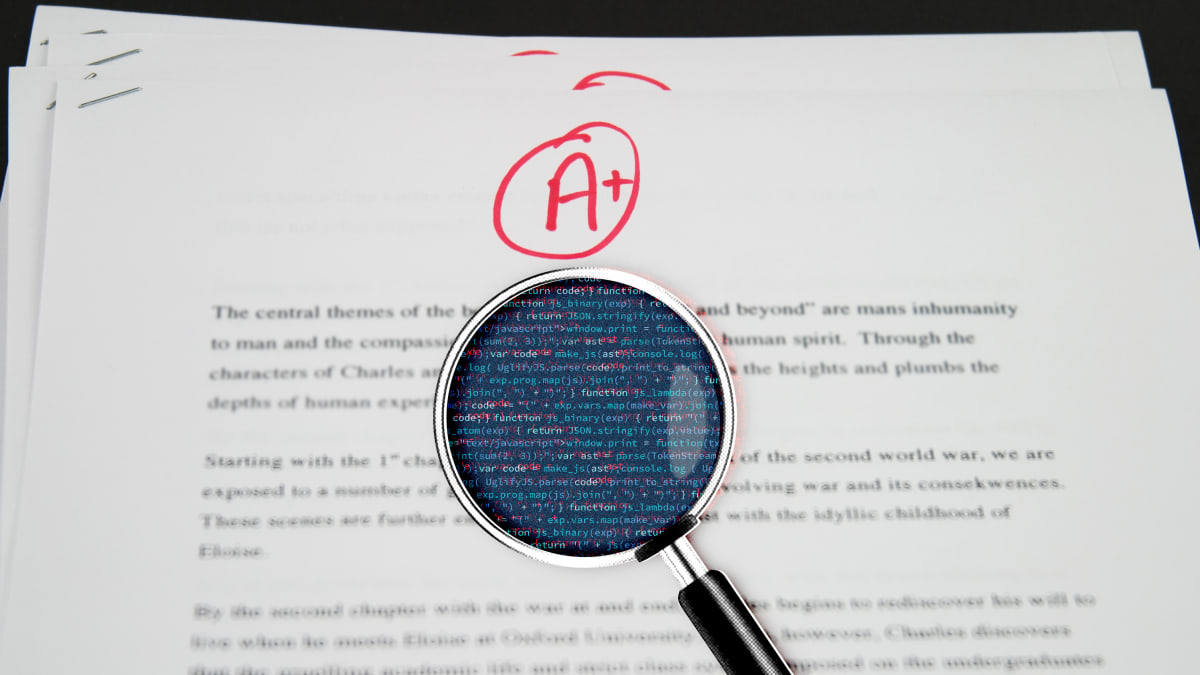

Mira’s encounter with AI detection is not an isolated incident. Following the emergence of ChatGPT, individuals have taken to social media to share their concerns and experiences of being wrongly accused of using AI to plagiarize their assignments. Some have recounted instances where false accusations of submitting AI-generated work not only impacted their grades but also took a toll on their mental well-being, prompting them to withdraw from academic pursuits.

Educators, institutions, and experts are now faced with the task of addressing the shortcomings of AI detection tools and the implications of relying on such technology in the educational realm. The swift proliferation of generative AI models, like ChatGPT, has caught many off guard, leading to unforeseen challenges in academia. Vinu Sankar Sadasivan, an assistant professor of computer science at the University of Maryland, highlighted these concerns in a pre-print paper on the efficacy of AI detectors.

Sadasivan emphasized that the rapid rise of powerful language models, such as ChatGPT, has raised significant concerns within the academic community due to the potential risks of misuse, such as plagiarism. The hasty adoption of AI detectors without a comprehensive understanding of their functioning or reliability has resulted in instances where students have been wrongly accused of academic dishonesty, as illustrated in a widely circulated Twitter post.

The Evolving Educational Landscape

Janelle Shane, an AI researcher and author, offered a broader perspective on the issue based on her own encounters with detection tools. Shane noted a shift in her perception after witnessing instances of false positives generated by these detectors. While initially intrigued by their evaluative capabilities, she began to question their reliability, especially in high-stakes scenarios where accusations of plagiarism could have severe repercussions.

Shane remarked, “Even though I knew I authored my own book, I could identify false positives within it as well.” The implications of relying on detection tools, particularly in cases where academic integrity is paramount, have sparked widespread discussions and raised concerns among individuals sharing similar experiences.

According to Shane, the deterministic nature of tools like ChatGPT, which lack contextual memory between interactions, can lead to erroneous conclusions. In one instance, ChatGPT struggled to discern whether a given text was human-generated when presented with an assignment by a professor.

In a setting featuring instructors working alongside ChatGPT, the rapid advancements and deployment of AI have introduced numerous unforeseen challenges, catching both educators and students off guard.

For individuals like Mira, who are neurodivergent or non-native English speakers, navigating these challenges becomes even more daunting. A Stanford study published in the book Patterns in July 2023 revealed a clear bias against AI detection tools when assessing texts from non-native English speakers. Shane further noted a similar “bias” observed in the evaluation of works by neurodivergent artists.

Rua M. Williams, an associate professor of user experience design at Purdue University, shared an incident where recipients assumed AI had authored their communication, highlighting the prevalent misconceptions surrounding AI-generated content. Williams emphasized the need to address this “AI panic,” particularly among practitioners, which may unfairly target individuals with diverse linguistic backgrounds or communication styles.

Alain Goudey, an associate professor specializing in electrical engineering at NEOMA Business School, underscored how AI detectors assess texts based on their “perplexity,” often leading to erroneous flags for non-native English speakers. Goudey explained that the use of common English words tends to lower the perplexity score, potentially misidentifying texts as AI-generated. Conversely, employing more sophisticated vocabulary can raise the perplexity score, indicating human authorship.

Furthermore, Goudey highlighted the additional burden placed on non-native English speakers, who already face challenges in language acquisition, when their work is inaccurately flagged as AI-generated. This misclassification can be detrimental and discouraging for individuals striving to master a new language.

T. Wade Langer Jr., a professor at the University of Alabama Honors College, acknowledged these concerns and emphasized the importance of engaging in dialogue rather than hastily assigning blame based on AI detection results. Langer views AI tools as a starting point for discussions with students, prioritizing a collaborative approach over punitive measures.

Navigating issues of scientific integrity and academic misconduct can significantly impact individuals’ mental well-being, underscoring the need for open dialogue and understanding within educational settings. Rather than relying solely on automated assessments, educators should strive to foster a culture of integrity through meaningful conversations and nuanced evaluations.

Sam Altman, the CEO of OpenAI, announced plans to develop enhanced tools for ChatGPT customization during the company’s 2023 designer conference. While these advancements aim to facilitate student use of chatbots for academic assistance, concerns linger regarding the long-term impact on creativity and potential biases perpetuated by such technologies.

Experts like Sadasivan caution against overlooking the nuanced implications of AI tools and advocate for a reevaluation of their role in education. As technology outpaces traditional pedagogy, educators must adapt to these changes while safeguarding against potential pitfalls. A shift in perspective is needed to harness the benefits of AI tools without compromising academic integrity or stifling creativity.

Langer emphasized the importance of refraining from using AI tools as definitive measures of student performance, urging educators to prioritize dialogue and engagement over simplistic assessments. Upholding academic integrity requires a commitment to thorough evaluation and understanding, transcending quick fixes or rigid standards.