The realm of Artificial Intelligence (AI) is making significant strides in technology advancement, primarily attributed to the remarkable capabilities of Large Language Models (LLMs). These models, rooted in Natural Language Processing, Understanding, and Generation, showcase exceptional aptitude and potential across various industries.

A recent breakthrough in research introduces Phind-70B, a cutting-edge AI model aimed at enhancing developers’ coding experiences worldwide. Developed by a team of researchers, Phind-70B sets out to narrow the gap in execution speed and code quality compared to its predecessors, including the renowned GPT-4 Turbo.

Phind-70B, an evolution of the CodeLlama-70B model with an additional 50 billion tokens, boasts a swift operational pace of up to 80 tokens per second. This model excels in providing rapid feedback to coders, facilitating instant responses to technical queries.

In addition to its speed, Phind-70B stands out for its ability to generate intricate code sequences and grasp complex contexts through its 32K token context window. This feature significantly enhances the model’s capability to offer comprehensive and relevant coding solutions, resulting in impressive performance metrics.

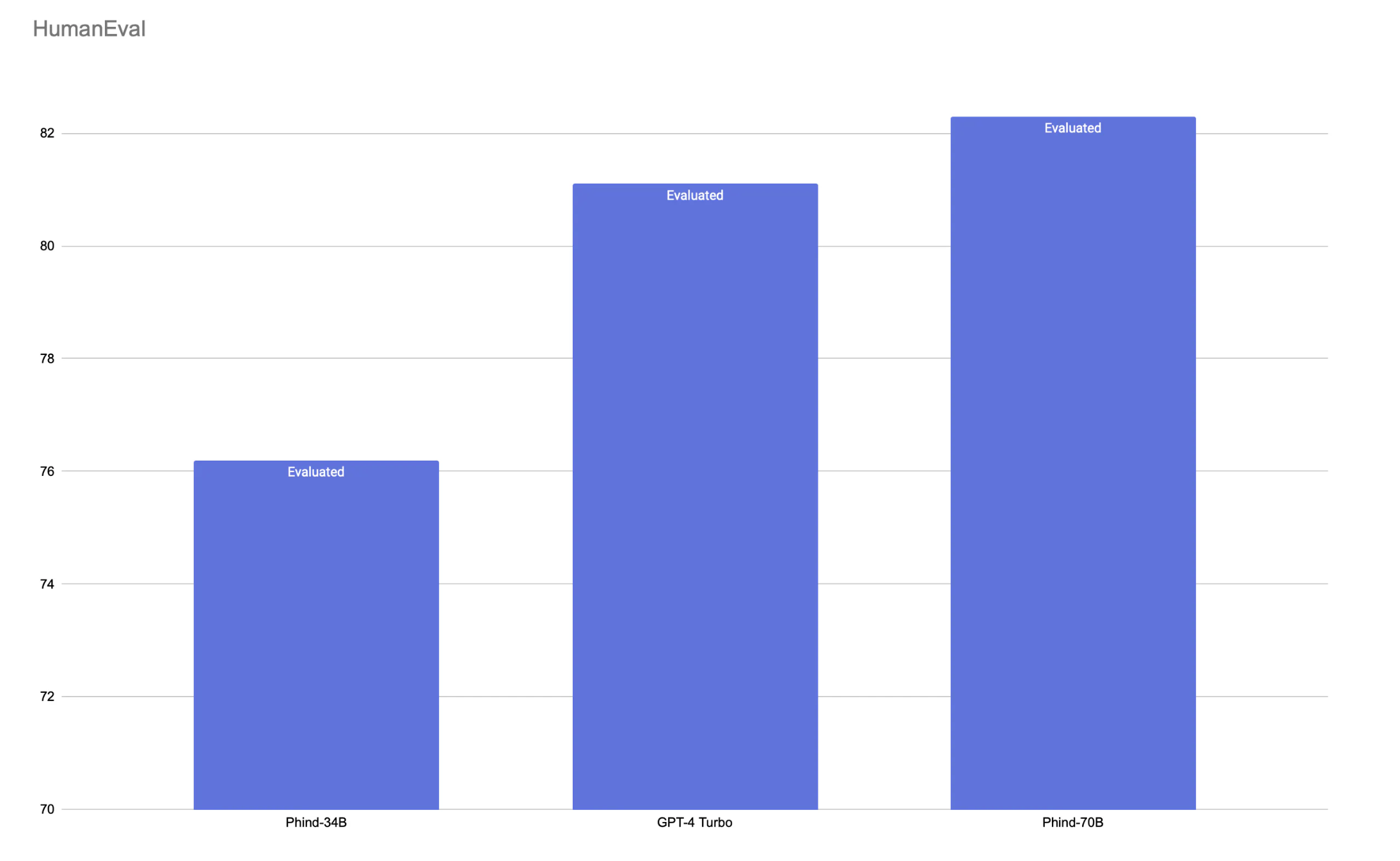

According to the research team, Phind-70B outperforms GPT-4 Turbo in the HumanEval benchmark, achieving an 82.3% score compared to GPT-4 Turbo’s 81.1%. While on Meta’s CRUXEval dataset, Phind-70B scored 59% in contrast to GPT-4 Turbo’s 62%. Despite a slight lag behind GPT-4 Turbo in this benchmark, it’s important to note that these metrics may not fully reflect the model’s efficacy in practical coding scenarios. Phind-70B shines in real-world applications, showcasing exceptional code generation proficiency and a proactive approach in producing detailed code samples.

The remarkable performance of Phind-70B can be largely attributed to its speed, which is four times faster than GPT-4 Turbo. Leveraging the TensorRT-LLM library from NVIDIA on the latest H100 GPUs has significantly enhanced the model’s efficiency and inference performance.

Collaborating with cloud partners SF Compute and AWS, the development team ensured optimal infrastructure for training and deploying Phind-70B. To broaden accessibility, Phind-70B offers a login-free trial, with an option for a Phind Pro subscription catering to users seeking enhanced features and capabilities for a more comprehensive coding assistance experience.

Looking ahead, the Phind-70B team plans to release the weights for the Phind-34B model and eventually make the Phind-70B model weights public, fostering a culture of collaboration and innovation.

In essence, Phind-70B epitomizes innovation, poised to elevate the developer experience through unparalleled speed and code quality. By enhancing the efficiency, accessibility, and impact of AI-supported coding, Phind-70B represents a significant advancement in the field.

Check out the Blog and Tool for more details on this research project. All credit goes to the dedicated researchers behind this initiative. Stay updated by following us on Twitter and Google News. Join our ML SubReddit with over 38k members, our 41k-strong Facebook Community, Discord Channel, and LinkedIn Group.

If you appreciate our work, you’ll love our newsletter. Don’t miss out on joining our Telegram Channel for the latest updates. Explore our FREE AI Courses for more learning opportunities.

Tanya Malhotra, a final year undergraduate student at the University of Petroleum & Energy Studies, Dehradun, is pursuing a BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning. She is a Data Science enthusiast with strong analytical and critical thinking skills, coupled with a keen interest in acquiring new competencies, leading teams, and managing tasks efficiently.

🐝 Join the Fastest Growing AI Research Newsletter Read by Researchers from Google, NVIDIA, Meta, Stanford, MIT, Microsoft, and many others…