Pop sensation Taylor Swift recently found herself at the center of a deepfake scandal, with AI-generated explicit images circulating widely on the social media platform X. This disturbing trend sparked immediate backlash from various quarters, including politicians and tech leaders.

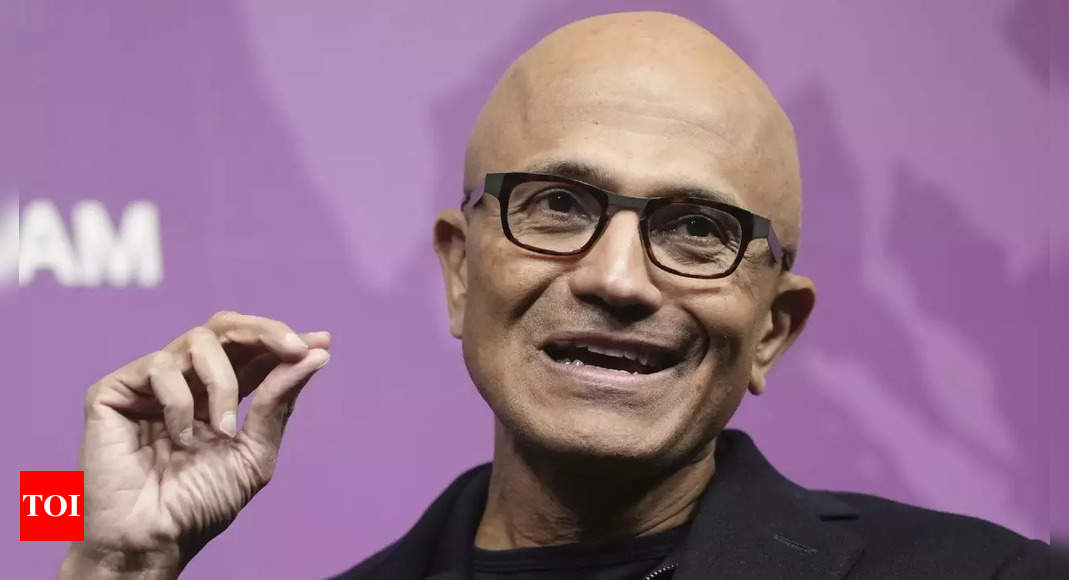

Among those condemning the deepfakes was Microsoft CEO Satya Nadella, who described the situation as “alarming and terrible,” emphasizing the urgency for action. Nadella stressed the need for stringent regulations to ensure the creation of safer online content, highlighting the ongoing efforts in this direction. The incident underscores the critical issues surrounding online security and the ethical dilemmas posed by advancements in AI technology.

In an interview with NBC, Nadella underscored the importance of establishing robust guardrails to govern technology effectively and promote the production of secure content. He emphasized the necessity for a global consensus on norms and collaboration between legal authorities, law enforcement agencies, and tech platforms to regulate such incidents effectively.

The origins of these explicit deepfake images can be traced back to a Telegram channel notorious for circulating AI-generated adult content. According to a report by 404 Media, these illicit images of Taylor Swift were reportedly generated using a free Microsoft text-to-image AI tool.

While AI tools typically prohibit the creation of such content, previous studies have revealed vulnerabilities that can be exploited to manipulate these systems into producing inappropriate material by inputting specific prompts.

The circulation of these fake explicit images elicited strong reactions from Taylor Swift’s fans, as well as political figures, including the White House, who expressed outrage over the violation of the pop star’s privacy. One particularly egregious image reportedly garnered a staggering 47 million views on X, underscoring the widespread impact of such incidents on popular figures.