Upon the recent enactment of the Artificial Intelligence Act, the European Union is poised to implement some of the most stringent AI regulations globally. The legislation designates certain AI applications as “unacceptable,” making them illegal except for specific purposes such as government use, law enforcement, and scientific research under defined conditions.

Similar to the General Data Protection Regulation (GDPR), this new EU law will impose fresh obligations on all entities conducting business across the 27 member states, not solely those headquartered within the region. The primary objective, as articulated by the drafters, is to safeguard the rights and freedoms of citizens while simultaneously promoting innovation and entrepreneurship. However, the extensive 460-page Act encompasses more than just these aspects.

For businesses operating in Europe or catering to European consumers, it is imperative to grasp the key implications of this legislation to adapt proactively to potential significant changes.

Commencement of the Act:

The European Parliament endorsed the Artificial Intelligence Act on March 13, with the anticipation of formal adoption by the European Council shortly. While the complete enforcement may span up to 24 months, certain provisions, such as the proscription of specific practices, could be implemented within six months.

Analogous to GDPR, this grace period allows organizations to ensure compliance, beyond which non-compliance could result in substantial penalties. The fines are structured in tiers, with severe violations of the “unacceptable uses” prohibition attracting penalties of up to 30 million euros or six percent of the global turnover, whichever is greater.

Moreover, reputational damage could prove even more detrimental for businesses found infringing the new regulations. Trust plays a pivotal role in the realm of AI, and entities demonstrating untrustworthiness are likely to face repercussions from consumers.

Prohibited AI Applications:

The Act underscores that AI should prioritize human-centric values, serving as a tool to enhance human well-being. Consequently, the EU has outlawed several potentially harmful applications of AI, including behavior manipulation, biometric profiling for inferring sensitive beliefs, discriminatory social scoring systems, and remote biometric identification in public spaces.

While exceptions exist, delineating scenarios where law enforcement agencies can utilize “unacceptable” AIs, such as counterterrorism efforts and search operations, there remain uncertainties regarding interpretation. The Act’s emphasis on mitigating potential harm signifies a crucial aspect of the new regulations.

Nonetheless, the ambiguity in certain provisions necessitates further elucidation on enforcement mechanisms to comprehend the full implications.

Classification of AI Risk Levels:

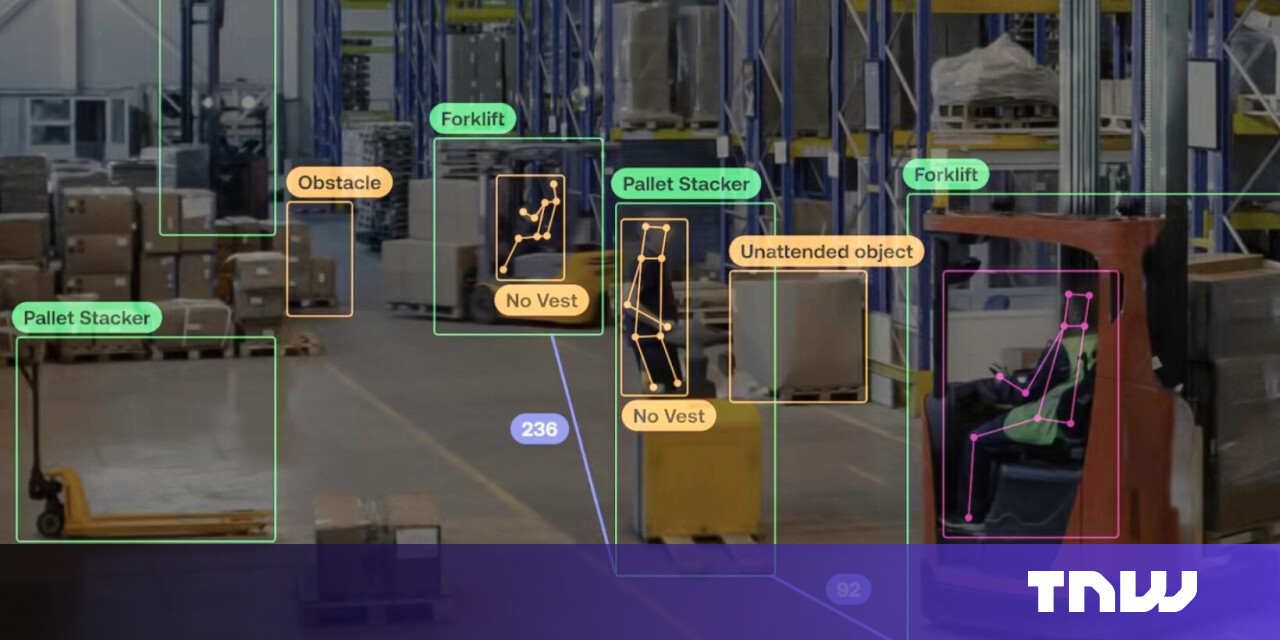

Apart from prohibited applications, the Act categorizes AI tools into high, limited, and minimal risk segments. High-risk AI encompasses domains like autonomous vehicles and medical technologies, subjecting businesses in these sectors to stringent regulations concerning data integrity and security.

Conversely, limited and minimal-risk AI applications, such as entertainment or creative processes, entail fewer obligations but still mandate transparency and ethical utilization of intellectual property.

Emphasis on Transparency:

Transparency emerges as a cornerstone of the Act, albeit with some interpretational challenges, particularly concerning safeguarding trade secrets. The legislation mandates clear labeling of AI-generated content to combat deception and disinformation threats.

Furthermore, it imposes disclosure requirements, especially on developers of high-risk systems, pertaining to functionality, data usage, and human oversight. While ensuring transparency is pivotal for fostering trust in AI applications, the practical enforcement of these provisions remains a subject of scrutiny.

Implications for Future AI Regulation:

The enactment of the EU Artificial Intelligence Act signifies a proactive stance towards addressing the regulatory complexities associated with AI technologies. While the legislation marks a significant milestone, the subsequent focus should be on establishing robust regulatory frameworks and enforcement mechanisms.

Anticipated global regulations, including those in the USA and China, underscore the imperative for businesses worldwide to prepare for evolving AI governance landscapes. Understanding the risk profiles of AI tools, prioritizing transparency, and staying abreast of regulatory developments are pivotal for organizational readiness.

Ultimately, cultivating an ethical AI culture, characterized by clean data, explainable algorithms, and risk mitigation strategies, is paramount for navigating the evolving regulatory milieu effectively.