It marked a pivotal moment akin to the iconic iPhone launch when OpenAI unveiled ChatGPT in late 2022. This AI tool boasted humanlike capabilities to craft content, address personalized inquiries, and even crack jokes, sparking widespread fascination. The emergence of this foundational model, a machine learning model trained on extensive datasets, thrust AI into the spotlight.

However, this new chapter in AI’s narrative swiftly gave rise to apprehensions regarding its potential to disseminate misinformation and fabricate false information through “hallucinations.” Many critics cautioned that in the hands of businesses, AI technologies could catalyze issues ranging from data breaches to bias in recruitment and substantial job displacements.

Alexandra Reeve Givens, the CEO of the Center for Democracy & Technology, emphasized the need to not only acknowledge the breakthrough in foundational models but also address the myriad use cases challenging businesses across various sectors.

The imperative for the corporate realm is crystal clear: any purportedly responsible company must deploy AI technologies in a manner that averts societal threats and risks to the business and its stakeholders. The crux lies in maintaining trustworthiness in AI applications, as highlighted by Ken Chenault, former CEO of American Express and co-chair of the Data & Trust Alliance, stressing the distinct nature of AI and machine learning models and the necessity for continual scrutiny of their underlying premises.

While AI harbors the potential for positive impacts by enabling advancements in areas like sustainable food production and enhanced access to healthcare and renewable energy, it also poses challenges. The high energy consumption associated with training chatbots and generating content, coupled with concerns regarding transparency and ethical standards, could impede progress towards the UN’s Sustainable Development Goals.

Despite the risks, AI stands poised to accelerate advancements in climate objectives, from optimizing electric grid efficiency to leveraging analytics for real-time monitoring of deforestation and carbon emissions. Mike Jackson from Earthshot Ventures underscored the transformative potential of AI in expediting change for the climate, heralding a significant boon.

Navigating the promise and pitfalls of AI demands a judicious balance between progress and caution for companies across sectors. Robust testing of AI models, coupled with the formulation of policies to mitigate inadvertent harm and inequities, is imperative to avert the dreaded loss of control that organizations fear.

( )

)

In a notable incident in 2023, New York lawyer Steven Schwartz faced ridicule in court for incorporating fabricated citations and opinions generated by ChatGPT in his legal brief. This episode shed light on the propensity of AI programs to commit glaring errors, raising concerns about their application in critical industries such as nuclear power and aviation, where errors could prove catastrophic.

Beyond jeopardizing physical safety, AI has the potential to introduce biases into crucial decisions, spanning hiring practices, law enforcement, and lending criteria. In the healthcare sector, apprehensions range from data breaches to reliance on models trained on datasets overlooking marginalized communities.

Maintaining public trust emerges as a paramount concern for companies, with a KPMG survey underscoring the imperative of ethical AI development. Carl Carande, US head of advisory at KPMG, emphasized the criticality of robust frameworks and safeguards in navigating the ethical terrain of AI applications.

( )

)

A vendor in the loop

While companies strive to demonstrate responsible AI deployment, the onus extends beyond internal operations to encompass external vendors. The governance and controls in AI procurement are pivotal, as highlighted by Alexandra Reeve Givens from the Center for Democracy & Technology, underscoring the downstream ramifications for customers in case of lapses.

Notably, a mere 11% of institutional investors in a CFA Institute survey identified reliance on third-party vendors as a risk in AI and big data adoption. Acknowledging this gap, the Data & Trust Alliance published a guide evaluating human resources vendors’ capability to mitigate bias in algorithmic systems.

( )

)

Watchful eyes

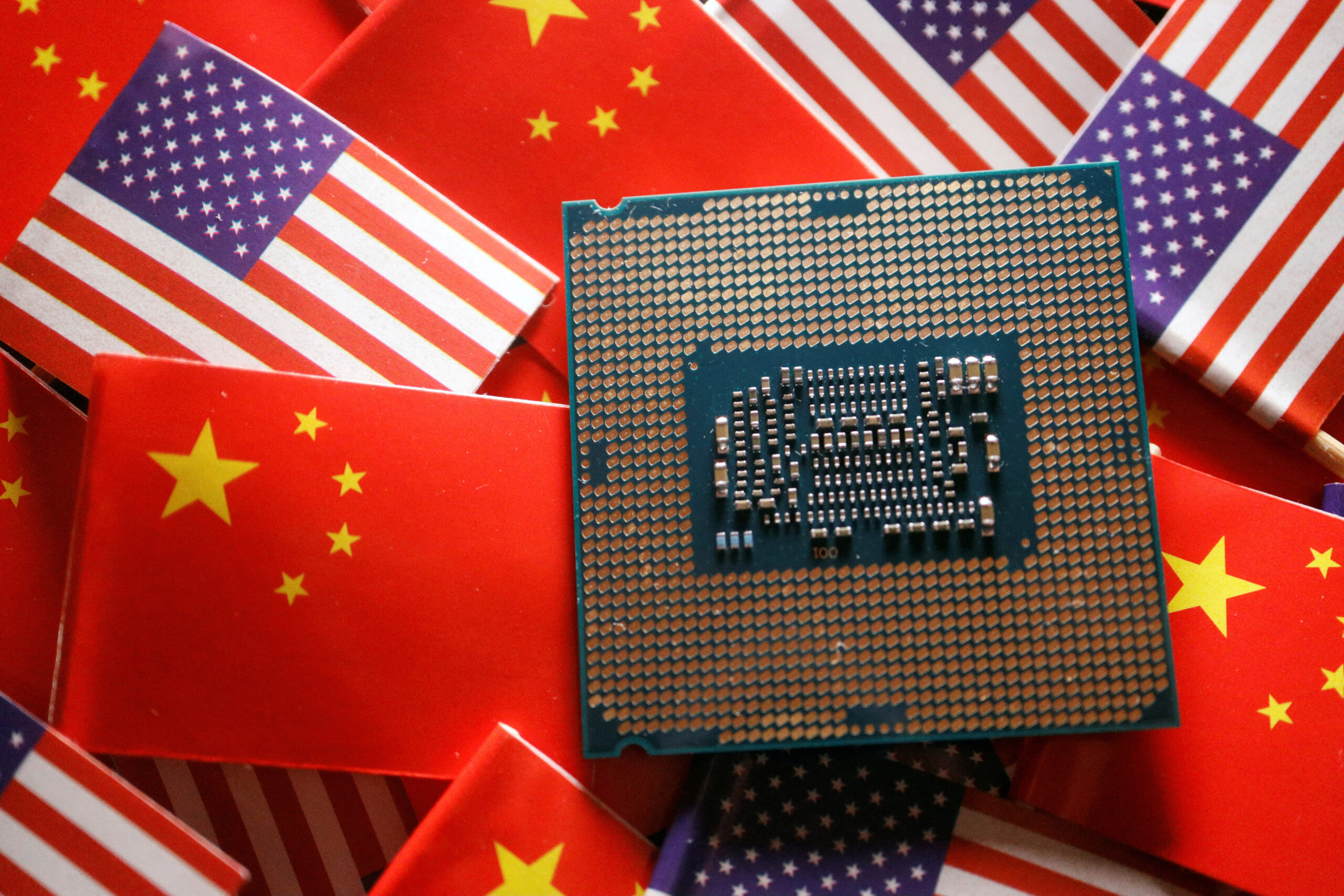

Governments are taking proactive measures to regulate AI technologies, with the EU pioneering stringent rules through the Artificial Intelligence Act. The US and China have also shown willingness to collaborate on mitigating AI risks, signaling a global recognition of the imperative for regulatory frameworks.

Despite these strides, gaps persist, especially concerning standards and certification processes. The need for a concerted global dialogue on certification approaches looms large, considering the complexity involved in assessing AI systems’ functionality and impact.

Debates surrounding the efficacy of regulations in balancing innovation and risk continue, with policymakers emphasizing the need for governance to realize AI’s potential and avert potential pitfalls.

( )

)

Investing with an AI lens

Investors are increasingly scrutinizing companies’ AI practices through an ESG lens, seeking to gauge the risk landscape and evaluate the alignment of AI applications with sustainability goals. Initiatives like Investors for a Sustainable Digital Economy aim to foster collaboration among asset managers and owners to navigate the evolving digital terrain.

By leveraging AI tools, asset managers like Axa Investment Managers are streamlining ESG assessments by analyzing corporate documents to gauge contributions to the UN’s Sustainable Development Goals. The integration of AI in ESG decision-making holds the promise of incorporating diverse data points for a comprehensive evaluation of companies’ environmental and social impact.

( )

)

Serving people and planet

AI technologies are not only revolutionizing impact-focused ventures but also driving positive change across sectors like healthcare and clean technology. By harnessing AI’s analytical prowess, companies are enhancing operational efficiencies, accelerating drug development, and optimizing resource utilization to bolster sustainability efforts.

The advent of AI-powered solutions, such as virtual clinics and waste sorting robots, underscores the transformative potential of AI in expanding access to essential services and mitigating environmental impact. These innovations exemplify the intersection of technology and sustainability in fostering a more equitable and efficient future.

( )

)

In conclusion, as AI continues to permeate diverse industries, the imperative for responsible and ethical AI deployment becomes increasingly pronounced. Striking a delicate balance between innovation and caution, companies must prioritize governance, transparency, and accountability to harness AI’s transformative potential while mitigating associated risks. By aligning AI applications with societal and environmental imperatives, businesses can not only drive positive change but also cultivate trust and sustainability in the digital age.