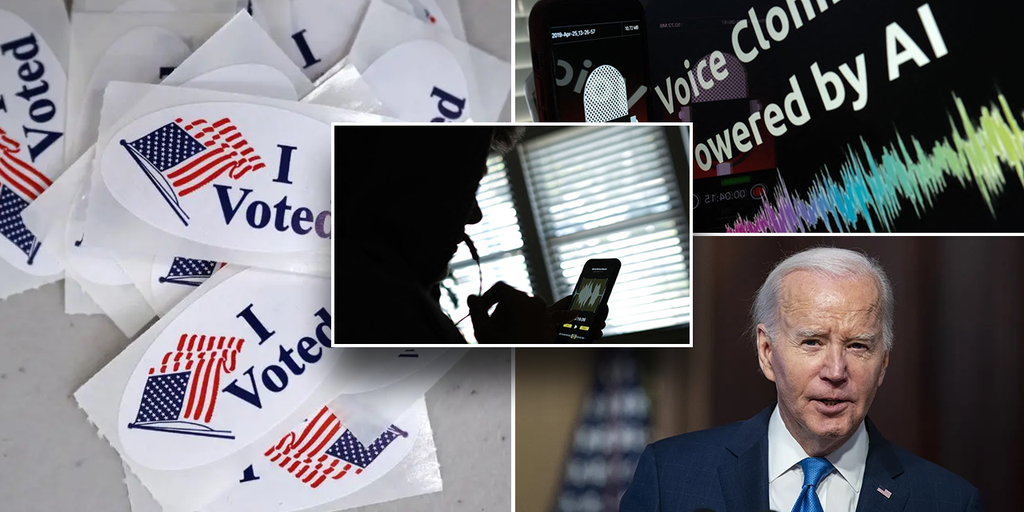

After a robocall targeted New Hampshire residents with a fraudulent phone call from President Biden, experts are warning that voters may be inundated with content generated by artificial intelligence (AI) with the potential to interfere with the 2024 primary and presidential elections.

While threat actors are using AI to overcome existing security measures and make attacks bigger, faster, and more covert, researchers are now leveraging AI tools to create new defensive capabilities. But Optiv Vice President of Cyber Risk, Strategy and Board Relations James Turgal told Fox News Digital, make no mistake, generative AI poses a “significant threat.”

“I believe the greatest impact could be AI’s capacity to disrupt the security of party election offices, volunteers and state election systems,” he said.

Turgal, a former FBI veteran, noted that a threat actor’s goals can include changing vote totals, undermining confidence in electoral outcomes or inciting violence. Even worse, they can now do so on a massive scale.

Supreme Court Chief Justice Report Urges Caution on Use of AI Ahead of Contentious Election Year

Experts say that election offices and staffers need to be trained on social engineering and AI-generated threats for upcoming elections. (Al Drago/Bloomberg/Chip Somodevilla/Chris Delmas/AFP/Getty)

Experts say that election offices and staffers need to be trained on social engineering and AI-generated threats for upcoming elections. (Al Drago/Bloomberg/Chip Somodevilla/Chris Delmas/AFP/Getty)“In the end, the threat posed by AI to the American election system is no different than the use of malware and ransomware deployed by nation-states and organized crime groups against our personal and corporate networks on a daily basis,” he said. “The battle to mitigate these threats can and should be fought by both the United States government and the private sector.”

To mitigate the threat, Turgal suggested that election offices should have policies to defend against social engineering attacks and staff must participate in deep fake video training that informs them on attack vectors, such as email text and social media platforms, in-person and telephone-based attempts.

He also stressed that private sector companies that create AI tools, including large language chatbots, have a responsibility to ensure that chatbots provide accurate information on elections. To do this, companies must confirm their AI models are trained to state their limitations to users and redirect them to authoritative sources, such as official election websites.

When asked by Fox News Digital whether voters may see a larger quantity of AI-generated voices that could potentially sway their decisions, NASA Jet Propulsion Laboratory (JPL) Chief Technology and Innovation Officer Chris Mattmann said, “The cat’s out of the bag.”

The spoof AI call of Biden is currently under investigation by the New Hampshire attorney general’s office and is of unknown origin. Experts said that because programs that can replicate voices are widely available as applications and online services, it is nearly impossible to determine which program created it.

Watchdog Warns Several Federal Agencies Are Behind On AI Requirements

Artificial Intelligence words are seen in this illustration taken on March 31, 2023. (REUTERS/Dado Ruvic/Illustration/File Photo)

Artificial Intelligence words are seen in this illustration taken on March 31, 2023. (REUTERS/Dado Ruvic/Illustration/File Photo)The voice, which is a digital manipulation of Biden, told New Hampshire voters that casting their ballot on Tuesday, January 23, would only help Republicans on their “quest” to elect Trump once again.

The voice also claimed their vote would make a difference in November but not during the primary.

While federal laws prosecute knowing attempts to limit people’s ability to vote or sway their voting decisions, regulation on deceptively using AI is still yet to be implemented.

When these audio clips reach such speed and authenticity and are added to AI-generated video trained on millions of hours of clips found in the public lexicon, Mattmann said the predicament gets even worse.

“It’s at that point when they’re literally indiscernible, even from computer programs, that we have a big problem when we start not being able to do some of these steps, like attribution and detection. That’s the moment that you hear everyone worrying about, including myself,” he said.

Years ago, spoof calls and spam calls were typically generated based on a combination of statistical methods and audio dubbing and clipping. Since public figures, such as Biden, are on record with a voice saying many words known to the public, the use of software and careful attention to things like voice tone and background can produce audio that is difficult to differentiate from authentic recordings.

Even on a weak computer, these AI voice models can fully replicate a human voice in around two to eight hours. However, Microsoft’s new text-to-speech algorithm, VALL-E, has shaved voice cloning down to a fraction of the time.

A touchscreen voting machine and printer are seen inside a voting booth, in Paulding, Ga.

“This can take three seconds of your voice and it can clone it. And when I say clone it, I mean clone it in the sense that words that Joe Biden has never said, it can actually make him say,” Mattmann said.

The rapid acceleration of voice cloning software is largely due to data collected by virtual voice assistants. Products like Amazon Alexa, Google Assistant, Apple’s Siri and more have been capturing snippets of people’s voices for years, all of which are used to train the AI and make them better at replicating speech.

In some cases, tech giants have even kept consumers’ voice recordings without their informed consent, which is mandated by law. In May, Amazon was ordered to pay $25 million to settle a lawsuit after regulators said the company violated privacy laws when they kept children’s voice recordings “forever.”

“Basically, they’ve been listening to our data for years, whether we clicked yes or not,” Mattmann said.

AI-manipulated content is not exclusive to threat actors. Over the last year, several political groups and politicians have used the tech to target their opponents.

Florida Gov. Ron DeSantis was the first United States presidential hopeful to use the technology in a political attack advertisement. In June, DeSantis’ now-disbanded campaign posted AI images of former President Donald Trump and Dr. Anthony Fauci hugging. Twitter, responding to concerns that the images may manipulate voters, soon added a “context” bubble for readers.

“It was through the looking glass. It was the Alice in Wonderland moment. It’s like, gosh, you know, they could immediately the same day respond with this,” Mattmann said.

Top Republican Talks Ai Arms Race: ‘You’ll Have Machines Competing With Each Other’

President Biden’s administration and members of Congress have attempted to adopt some policies related to artificial intelligence. (iStock)

In December, another political ad using AI was unveiled, this time by the House Republicans’ campaign arm. The advertisement featured AI-generated pictures depicting migrant camps across some of the most famous monuments and national parks throughout the United States.

Mattmann, an experienced AI expert, said the federal government has asked the Federal Election Commission and other organizations to experiment with labels that can be added to AI-generated campaign products to inform voters about how they were created. However, this rule has yet to be adopted.

While there are methodologies and computer programs to discern if content in the political space is AI-generated, he said many research groups do not have access to them and there are few, if any, campaigns that understand the technical aspect.

“In political campaigns and things like that too, this is going to take us into a realm audio-wise and just beyond that, audio, text, multimodal video, in which they’re going to have tools in not this election cycle but the next one that is ready for this. But the challenge is now,” Mattmann added.