It can be challenging to keep pace with the rapidly evolving landscape of the AI-driven economy. This compilation offers a fresh array of machine learning updates and noteworthy research insights that might have slipped under your radar until automated AI assistance becomes more prevalent.

In the realm of AI developments this week, a spotlight is cast on companies engaged in data labeling and aggregation, such as Scale AI, currently in discussions to secure additional funding valuing the company at $13 billion. While the allure of cutting-edge conceptual AI models like OpenAI’s Sora may overshadow platforms focused on naming and annotation experimentation, these foundational services are indispensable. Without them, the very existence of modern AI models could be called into question.

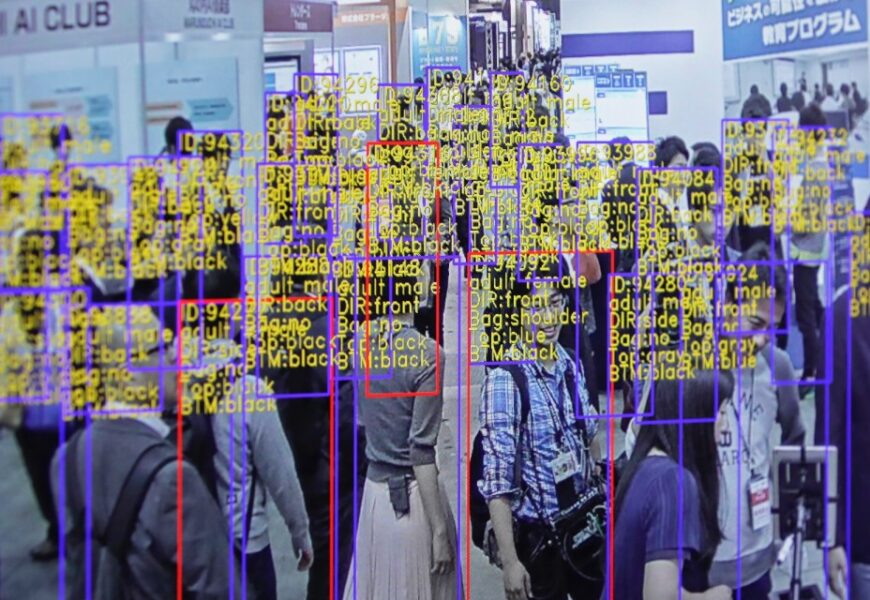

The process of training certain models necessitates data labeling. Why is this crucial? Labels, or tags, play a pivotal role in enabling models to comprehend and interpret information during the training phase. For example, when coaching an image recognition system, labels may manifest as markings delineating objects, “bounding boxes,” or descriptive captions identifying individuals, locations, or objects depicted in an image.

The efficacy and reliability of these trained models are heavily contingent on the accuracy and quality of the annotations provided. Moreover, classification tasks often demand hundreds to millions of labels for more intricate and extensive datasets in use.

One would expect data annotators to receive equitable compensation akin to the engineers crafting the models, as well as fair treatment. Unfortunately, this parity is not always the reality, owing to the harsh working conditions prevalent in some annotation and labeling startups.

An illuminating exposé by NY Mag sheds light on Scale AI’s practices, which involve sourcing annotators from locations as distant as Nairobi and Kenya. These labelers are often required to work multiple eight-hour shifts without breaks and may receive meager compensation, as low as $10 for their efforts. Furthermore, these workers are subject to the platform’s whims, with instances of prolonged delays in payment or sudden removal from the platform affecting individuals in various countries like Thailand, Vietnam, Poland, and Pakistan.

While some labeling platforms tout their commitment to ethical practices, the absence of stringent regulations and industry standards on ethical labeling work, as highlighted by MIT Tech Review’s Kate Kaye, underscores the need for greater oversight and accountability in this sector.

In addressing these challenges, the imperative for annotating and labeling data for AI training persists, barring any substantial technological breakthroughs. While self-regulation by these platforms is a plausible scenario, the more pragmatic approach appears to be the formulation of robust policies. Although navigating policymaking terrain is intricate, it represents a crucial avenue for effecting positive change or, at the very least, initiating the process.

Additional Recent AI Developments to Note:

- OpenAI’s Voice Cloner: OpenAI unveils Voice Engine, a novel AI tool allowing users to replicate a voice from a brief 15-second audio snippet. Despite its potential, OpenAI opts not to widely release the tool, citing concerns over misuse.

- Amazon Reinforces Anthropic: Amazon injects an additional $2.75 billion into Anthropic, bolstering the AI capabilities of the venture.

- Google’s Charitable Arm Launches Accelerator: Google’s philanthropic arm, Google.org, introduces a $20 million initiative to support nonprofits developing generative AI technologies.

- AI21 Labs Introduces Jamba: AI startup AI21 Labs debuts Jamba, a generative AI model leveraging state space models (SSMs) to enhance efficiency.

- Databricks Unveils DBRX: Databricks releases DBRX, a generative AI model akin to OpenAI’s GPT series and Google’s Gemini, showcasing superior performance across various AI benchmarks.

- EU Proposes Election Security Guidelines: The European Union releases draft guidelines on election security for digital platforms, including measures to counter generative AI-driven disinformation.

- X’s Grok Chatbot Upgrade: X’s Grok chatbot receives an update to Grok-1.5, now accessible to all Premium X subscribers.

- Adobe Expands Firefly Services: Adobe launches Firefly Services, a suite of over 20 generative and creative APIs, including Custom Models for tailored AI model refinement.

As AI continues to advance, innovations like the SEEDS system and Fujitsu’s underwater image analysis showcase the breadth of possibilities in leveraging AI technologies for diverse applications, from weather forecasting to environmental monitoring and simulation.

In the pursuit of AI excellence, understanding the intricate mechanisms within AI models, addressing biases, and upholding ethical standards are pivotal for fostering responsible AI development and deployment. The intersection of AI and search technologies warrants ongoing scrutiny to mitigate potential risks and ensure ethical practices in the digital landscape.