“According to a firm supporting the president’s AI Safety Institute, the UK might prioritize the establishment of international standards for testing artificial intelligence rather than handling all the evaluation internally.

Marc Warner, the CEO of Faculty AI, expressed concerns that the newly established institute could be responsible for assessing various types of AI, including the technology behind chatbots like ChatGPT.

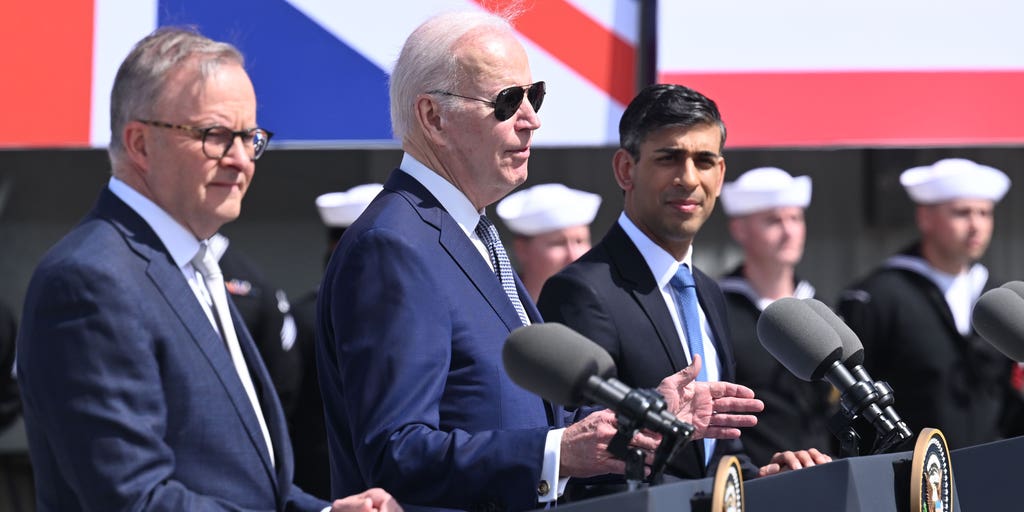

The announcement of the AI Safety Institute (AISI) by Rishi Sunak before the global AI safety summit last year led to major tech companies pledging cooperation with the EU and 10 countries, including the UK, US, France, and Japan, in testing advanced AI models pre and post-deployment.

The UK, known for its innovative AI security research showcased through the creation of the academy, plays a significant role in this collaborative effort.

Warner, whose company in London collaborates with the UK academy to test AI versions for compliance with health regulations, emphasized the importance of the university becoming a global leader in setting testing standards.

Instead of managing everything independently, he emphasized the significance of the institute setting standards for the broader global community.

Warner commended the institute for its promising start and highlighted the rapid pace at which governmental initiatives are progressing, particularly in the context of fast-evolving technology.

He suggested that the university could establish guidelines that governments and businesses worldwide could follow, such as ‘red teaming,’ a practice where experts simulate AI model misuse rather than taking on the entire evaluation task.

Warner proposed that the government could implement ‘red teaming’ protocols for AI models, ensuring adherence to high standards that other entities can benchmark against, thus offering a more adaptable and sustainable approach to ensuring security.

Recognizing the limitations in capacity, Warner indicated that AISI would focus on evaluating the most advanced systems, rather than all released models, as discussed with the Guardian before an upcoming update on the testing program.

Top AI companies are urging UK authorities to expedite security assessments for AI systems, as reported by the Financial Times. Leading tech firms like Google, OpenAI, Microsoft, and Meta, among others, have committed to voluntary testing agreements.

In a similar vein, the US has introduced an AI safety institute to participate in the testing program unveiled at the Bletchley Park conference. The Biden administration recently announced a partnership to support the objectives outlined in the executive order on AI safety, including the development of guidelines for watermarking AI-generated content. Notable tech giants like Meta, Google, Apple, and OpenAI are part of this consortium under the US institute.

The UK’s department of science, technology, and systems emphasizes the crucial role governments play in testing AI models worldwide. The UK’s AI Safety Institute, as the world’s second AI safety institute, is at the forefront of evaluations, research, and knowledge sharing to enhance global understanding of AI safety.

The director highlighted that the institute’s work will continue to contribute to policymakers’ education on AI safety globally.”