Is a piece of equipment capable of discerning your anger just by observing your facial expressions? Can your written correspondence reveal your level of anxiety? Could a machine potentially grasp your emotions better than you can?

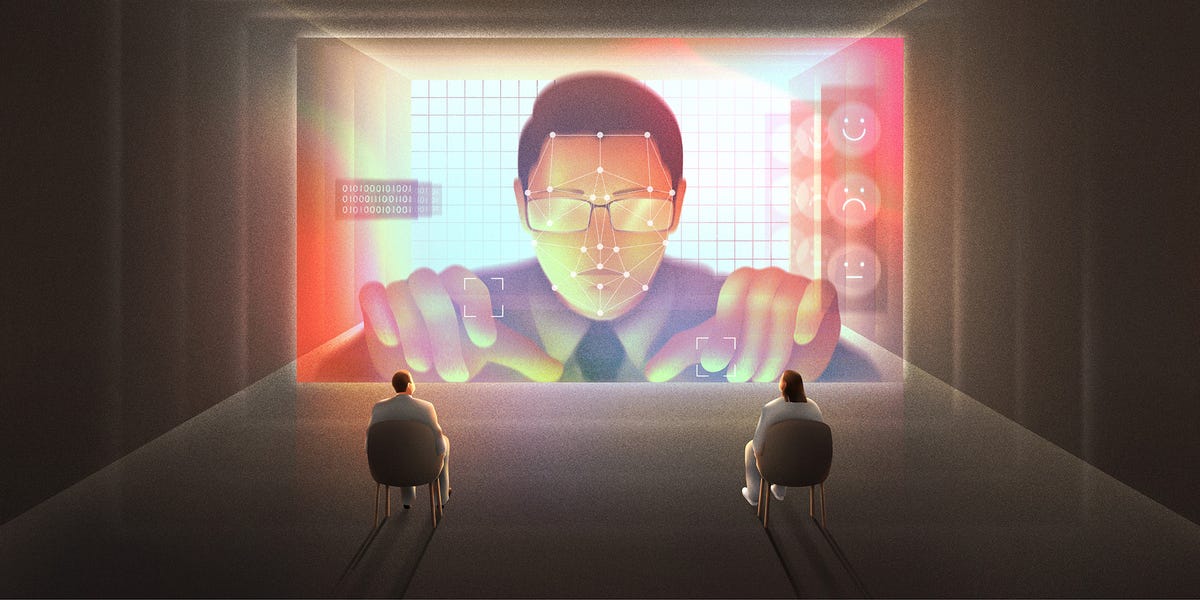

A wave of emerging businesses firmly believes that the answer to all these questions is a resounding yes. Numerous enterprises are pushing the idea that emotion-aware artificial intelligence (EAI) has the ability to detect subtle facial movements that may go unnoticed by individuals, interpret these minute gestures to determine emotions, and then translate these private feelings into measurable data. Proponents of this technology claim that AI can differentiate between emotions like joy, confusion, anger, and even nostalgia. In theory, this advancement allows computers to comprehend our emotions, possibly even when we are not consciously aware of them.

The integration of EAI could prove to be a lucrative opportunity for businesses. Understanding consumers’ genuine thoughts, emotions, and characteristics could significantly simplify the process of marketing products. For instance, envision using a shopping application and encountering an item you dislike. With EAI, the app could promptly identify this and offer content tailored to uplift your mood, thereby increasing the likelihood of a purchase. Some companies are already leveraging this technology to develop smart products, automation systems, vehicles, and empathetic AI chatbots. Apart from its commercial applications, EAI could also be advantageous in the workplace: By gauging the psychological state of potential or existing employees, employers can make informed decisions to enhance productivity and streamline operations.

However, there is a caveat: the efficacy of EAI remains a subject of debate. Experts in the field hold conflicting views on the reliability of the technology, with some critics asserting that interpreting human emotions solely based on physical expressions is inherently flawed. Nonetheless, this skepticism has not deterred businesses from utilizing EAI to monitor their workforce, gauge employee sentiments, and influence hiring and firing decisions. The pervasive reach of AI in the workplace, particularly in this context, raises concerns about potential misuse and invasion of privacy. Employees may unknowingly find themselves under scrutiny, with their thoughts and emotions potentially weaponized against them.

Functionality of Emotion-Aware Artificial Intelligence

While the concept of emotion AI has been in development since the late 1990s, recent advancements in technology and a surge in interest have propelled the industry forward. The abundance of data available for training purposes has enabled businesses to utilize technologies that analyze various physiological indicators such as body posture, breathing patterns, heart rate, perspiration levels, and changes in skin conductance. This data is harnessed for a myriad of applications, including identifying insurance fraud, diagnosing depression, and monitoring student engagement in online learning environments. Some iterations of EAI focus on verbal cues, tracking subtle shifts in tone and pauses during conversations, while others analyze changes in facial expressions and gaze patterns. Additionally, there are versions that scrutinize written communication to generate detailed reports on underlying emotions conveyed unintentionally.

One company, Smart Eye, has amassed a vast repository of emotion-based data by analyzing over 14.5 million videos capturing facial expressions of willing participants. According to Gabi Zijderveld, the Chief Marketing Officer of Smart Eye, their EAI technology excels at “understanding, supporting, and predicting human behavior” by examining facial expressions, body movements, and visual cues to infer individuals’ mental and cognitive states.

For instance, Smart Eye applies this technology in pilot monitoring systems that combine eye-tracking and visual analysis to detect driver fatigue. Moreover, they provide analytics services to marketing and entertainment industries, where paid viewers’ facial expressions are analyzed to gauge responses to advertisements or movie trailers. This data is then leveraged by companies like CBS and Disney to tailor their products based on consumer feedback, refining marketing strategies for maximum impact.

Employee Sentiment Monitoring

The implementation of EAI in the workplace, while seemingly innocuous or even beneficial, poses significant risks. Employees may be unaware of its usage, potentially leading to feelings of discomfort, emotional instability, or performance pressure.

Utah-based HireVue first introduced EAI facial analysis in 2014 as part of the job interview process to evaluate applicants’ skills, cognitive abilities, psychological traits, and emotional intelligence. Despite the noble intentions of reducing bias and identifying top candidates, concerns were raised about the technology’s efficacy. The Electronic Privacy Information Center filed a complaint alleging unfair practices, citing biases against women, people of color, and individuals with neurological differences in the hiring process.

Following public backlash, HireVue announced in 2021 that it would discontinue EAI facial analysis, shifting focus to analyzing speech, intonation, and behavior during interviews. While the company claimed that linguistic analysis alone could provide insights into candidates’ mental states, critics remain skeptical of the technology’s neutrality.

Another platform, Retorio, utilizes a combination of visual analysis, body-pose recognition, and voice analysis to create profiles of candidates’ personality traits and soft skills for recruitment purposes. Despite claims of eliminating discrimination and ensuring applicant consent, concerns persist regarding the potential biases and privacy implications of such technologies.

The use of emotion-recognition technology extends beyond the hiring process, with call centers employing EAI to gauge customer sentiments and provide real-time feedback to customer service representatives. Employees may receive guidance on adjusting their tone to align with algorithm-defined standards, leading to confusion and apprehension among some workers.

Furthermore, companies are exploring innovative ways to delve deeper into employees’ emotions, such as incorporating biosensors into office furniture to monitor physiological indicators like heart rate and stress levels. While these initiatives are marketed as tools for enhancing employee well-being and productivity, critics caution against the potential privacy infringements and ethical implications associated with such intrusive monitoring practices.

Challenges and Ethical Concerns

In addition to ethical dilemmas, the effectiveness of emotion AI remains a contentious issue. The foundational research by psychologist Paul Ekman, which posited universal facial expressions for basic emotions, has faced criticism in recent years. Studies questioning the reliability of interpreting emotions solely based on facial expressions highlight the complexity and variability of human emotional expressions across different contexts and cultures.

Despite claims by EAI proponents that cultural nuances are accounted for in their algorithms, independent verification of these assertions is challenging due to the proprietary nature of the technology. The lack of transparency raises concerns about the accuracy and reliability of emotion-recognition systems, casting doubt on their widespread adoption and impact.

The deployment of EAI in workplace settings underscores the growing trend of companies intruding into employees’ private emotions, raising profound ethical and privacy concerns. The potential misuse of emotion-recognition technologies in hiring, performance evaluation, and employee monitoring poses significant risks to individual autonomy and well-being. As businesses navigate the ethical and regulatory landscape surrounding emotion AI, the need for transparency, accountability, and safeguards to protect employees’ rights becomes increasingly critical.