According to a recent study highlighting the drawbacks of technology, tens of millions of individuals are utilizing AI-powered “nudify” applications. In September, over 24 million people visited porn AI websites employing deep learning algorithms to digitally modify images, particularly of women, to portray them as clothed in the picture. These methods can superimpose lifelike nude body parts onto images, regardless of the subject’s actual attire, as they are trained on existing female photographs. The promotion of nudity through apps has seen a significant increase, with email advertisements across major platforms leading to a growth of over 2,000 percent since the beginning of 2023.

Social media platforms like Google’s YouTube, Reddit, and various Telegram groups have been implicated in accessing non-consensual intimate imagery (NCII) services, contributing to the rising popularity of nudity-centric apps.

Telegram has been actively moderating harmful content on its platform, including nonconsensual pornography, as stated by a spokesperson from the company. Moderators on Telegram consistently monitor the platform’s public areas and address user reports to remove content violating their terms of service. Daily Mail.com has reached out to X and YouTube for comments on the matter.

Recently, algorithmic images of female students were exploited to target them at a high school in New Jersey, leading to concerns raised by a mother and her 14-year-old daughter regarding the need for better protection against NCII content. In another incident, a young boy reportedly utilized AI algorithms to create inappropriate images of female students at his high school in Seattle, Washington.

An app called “Clothoff” was used to produce fake images of over 20 women in September, highlighting the alarming trend of AI-generated nudity. A report by the social network analysis firm Graphika delved into the strategies and operations of NCII providers, shedding light on the functioning and marketing tactics of AI-generated nudity websites and apps. The report warns of the potential increase in online harm due to the proliferation of these services, including the creation and dissemination of non-consensual nude images, targeted abuse campaigns, sextortion, and child sexual exploitation.

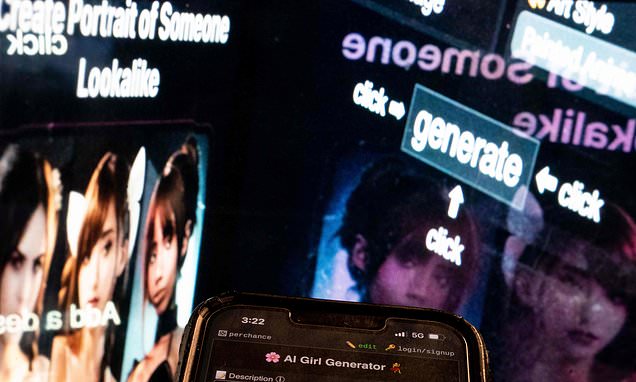

The report also reveals that these applications operate on a “freemium” model, offering basic services for free while charging for advanced features through paywalls. Users are required to purchase additional “credits” or “tokens” priced between \(1.99 and \)299 to access premium features. Advertisements for NCII apps and websites are explicit in their offerings, promoting services like “undressing” individuals or providing incriminating images.

YouTube tutorial videos demonstrate the usage of “nudify” apps, while ads on the platform endorse similar services. Concerns about the potential harm caused by conceptual AI tools have prompted the Federal Trade Commission (FTC) to file a complaint with the U.S. Copyright Office, emphasizing the need to monitor the impact of such technologies on consumers.

The absence of specific laws prohibiting deepfake content raises concerns about the misuse of AI-generated materials. Psychotherapist Lisa Sanfilippo emphasizes the severe emotional impact of unauthorized creation and distribution of pornographic images, highlighting the violation of personal boundaries and the potential for lasting trauma.