Creators may have valid concerns about the future of generative AI. While GenAI can enhance creativity, there is a risk of its misuse to deceive the public and disrupt traditional creative markets. Performers, in particular, fear that AI-generated content like images and music could replace human models, actors, or musicians in a misleading manner.

Existing laws provide avenues for performers to tackle this issue. In the United States, most states acknowledge a “right of publicity,” granting individuals control over how their likeness is used commercially. While this right originally aimed to prevent false endorsements in advertisements, its scope has expanded to cover any content that evokes a person’s identity.

Moreover, state laws prohibit defamation, false representations, and unfair competition, offering established mechanisms to address economic and emotional harms from identity misappropriation while safeguarding online expression rights.

However, some performers advocate for additional measures. They argue for consistent image control rights across states and seek recourse against companies offering generative AI tools or hosting deceptive AI-generated content. Conventional liability rules, such as copyright, may not apply to companies merely providing expressive tools like Adobe Photoshop. Section 230 also shields intermediaries from liability for user-generated content, including potential publicity rights violations.

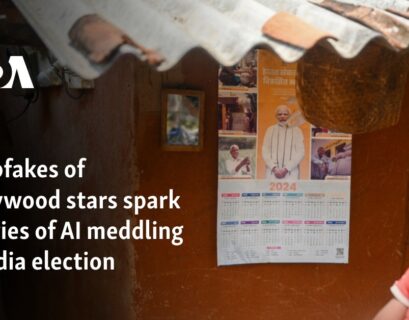

Balancing these interests poses challenges, and legislative efforts like the NO FAKES Act have raised concerns. This proposed bill introduces a transferable federal publicity right lasting 70 years posthumously, enabling record labels to exploit AI-generated performances of deceased celebrities. Another proposal, the “No AI FRAUD Act,” aims to address AI misuse but has notable flaws.

Firstly, the Act’s broad language covers a wide range of digital content beyond AI misuse. Secondly, by framing the new right as federal intellectual property, it exposes content hosts to legal risks. Thirdly, the Act’s terms, though limited to ten years post-death, could extend further based on heirs’ choices, creating uncertainty. Lastly, while claiming to protect free expression, the Act’s provisions could potentially restrict it by prioritizing intellectual property interests over First Amendment rights.

In conclusion, a more precise and balanced approach is necessary to safeguard performers and individuals from deceptive practices without impeding free expression, competition, and innovation. The No AI FRAUD Act, in its current form, falls short of achieving this goal.