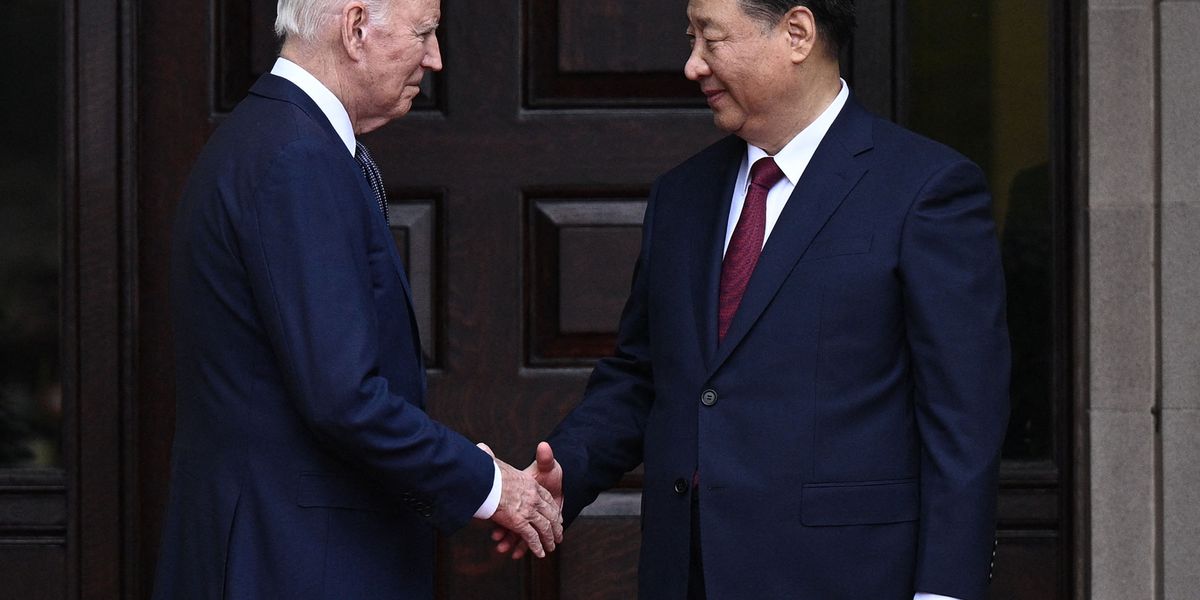

The summit between President Joe Biden and Chinese leader Xi Jinping was notably overshadowed by the presence of pandas. The memory of this meeting may eventually be encapsulated by a plaque at the San Diego Zoo, assuming that zoo visitation remains prevalent. Discussions at the summit delved into the significant subject of artificial intelligence (AI) and its associated risks.

Speculations preceding the summit hinted at a potential announcement by Biden and Xi regarding the prohibition of AI utilization across various sectors, including the control of nuclear weapons. While no such agreement materialized, both the White House and the Chinese foreign ministry suggested the possibility of future dialogues between the US and China concerning AI risks and safety protocols. Biden expressed intentions to assemble a panel of experts to tackle these concerns.

The specifics regarding the composition of the expert panel and the agenda items were not disclosed by US and Chinese authorities. Nevertheless, the conversations could encompass a broad spectrum of AI risks, ranging from ethical dilemmas arising from AI systems diverging from human values to the controversial issue of autonomous lethal weapons, commonly referred to as “killer robots.” The potential incorporation of AI in nuclear decision-making processes and execution might also be up for discussion.

Despite apprehensions, the likelihood of an outright ban on AI remains slim due to two primary reasons. Firstly, the blurred distinction between current commonplace AI applications and future advancements poses a challenge in delineating which aspects should be restricted. Secondly, the indispensable role of AI in military operations, mirroring its civilian benefits, renders a ban unfeasible. The competitive environment among the US, China, and other nations in harnessing AI for military progressions indicates a burgeoning AI arms race.

Amidst the array of AI-related risks, the amalgamation of AI with nuclear capabilities emerges as a pivotal concern. The unparalleled intelligence and rapidity of AI systems, coupled with their potential centrality in decision-making processes, necessitate a comprehensive exploration of the ramifications. The emphasis on “responsible” AI development, championed by the US State Department, highlights the necessity of human oversight in crucial decision-making, particularly in contexts involving life-or-death determinations.

While the concept of a “human-in-the-loop” strategy aims to mitigate risks associated with autonomous AI systems, particularly in military settings, uncertainties linger regarding the efficacy of human control in an AI-influenced decision-making milieu. The reliance on AI for decision support, akin to navigation aids in driving, raises questions about the extent of human intervention and the vulnerability to biased AI-generated data.

As countries like the US and China navigate the integration of AI into their military doctrines, the focus shifts towards formulating nuclear postures that reduce dependence on AI-driven assessments. A balanced approach that acknowledges the omnipresence of AI while safeguarding human agency in critical decision-making processes becomes increasingly crucial in a world where AI assumes an expanding role.

In conclusion, the dynamic landscape of AI necessitates strategic interchanges between nations to shape coherent nuclear policies that align with the realities of an AI-driven era. The dialogues between US and Chinese experts should concentrate on devising nuanced strategies for nuclear security that recognize the pervasive impact of AI while upholding the tenets of responsible decision-making.

Support Vox’s explanatory journalism:

Vox depends on reader contributions to uphold its comprehensive, transparent, and forward-thinking journalism. By supporting Vox’s mission, you facilitate the creation of insightful content that promotes comprehension and optimism. Your contribution on #GivingTuesday, whether through a $20 donation or any sum you can provide, represents a valuable investment in the future of journalism.