With the introduction of the NVIDIA HGXTM H200, SC23, NVIDIA promptly declared a significant enhancement to the country’s leading AI technology initiative. The platform’s NVIDIA H200 Tensor Core GPU, featuring advanced memory capabilities, is structured on the LNIDIA HopperTM design, enabling the processing of vast volumes of data for relational AI and high-performance computing workloads.

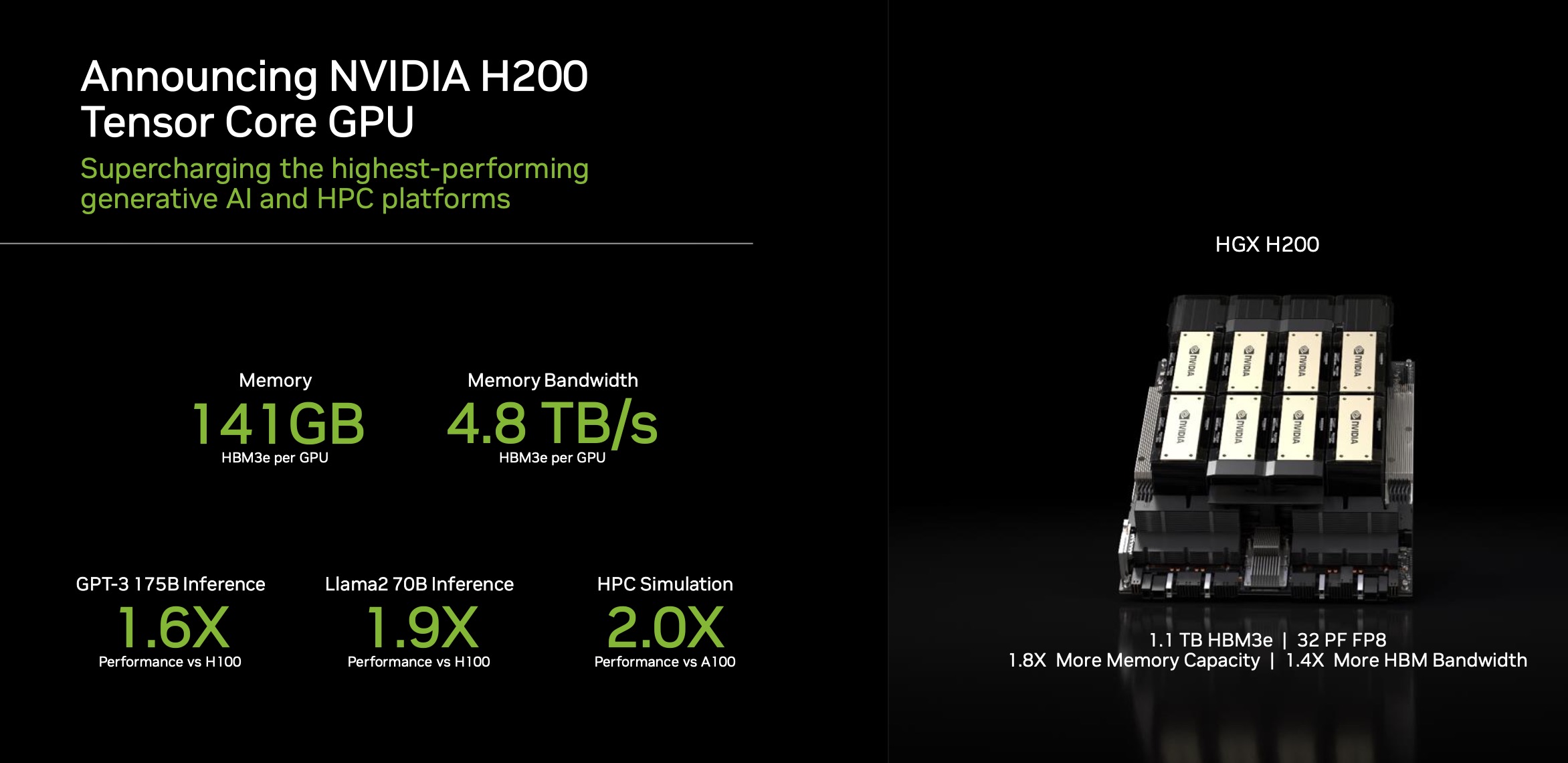

Being the pioneer GPU to incorporate HBM3e, the NVIDIA H200 revolutionizes medical technology for HPC tasks by delivering quicker and larger storage capacity to expedite conceptual AI and massive language models. Equipped with HBM3e, the NVIDIA H200 boasts 141GB of memory at a remarkable speed of 4.8 terabytes per minute, nearly doubling the speed and offering 2.4 times more bandwidth compared to HBIDIA A100.

In the second quarter of 2024, deployments of H200-powered solutions from major cloud service providers and client organizations are expected to commence.

“Significant data processing at high speeds utilizing substantial GPU memory is essential to foster intelligence through conceptual AI and HPC applications.” NVIDIA’s top-tier AI supercomputing system, the NVIDIA H200, has expedited its progress to tackle some of the most critical global challenges.

— Ian Buck, Vice Chairman of Hyperscale and HPC at NVIDIA

Sustained Performance Enhancements and Technological Advancements

Continuing the trend of technological advancements seen with H100, including the recent launch of potent open-source libraries like NVIDIA TensorRTTM- LLM, the architecture of NviDIA Hopper showcases a remarkable performance leap over its forerunner, continually elevating the performance standards.

The introduction of the H200 may yield additional efficiency gains, potentially doubling inference speed on Llama 2, a 70 billion feature LLM, in comparison to H100. Anticipated software updates are poised to introduce further performance optimizations and enhancements with the H200.

Form Factors for NVIDIA H200

NVIDIA HGX H200 site boards featuring four- and eight-way configurations, compatible with both system hardware and software, may soon be accessible. Additionally, the NVIDIA GH200 Grace HopperTM Superchip, equipped with HBM3e, was unveiled in August.

With these configurations, the H200 can seamlessly integrate into any data center environment, whether on-premises, in the cloud, across multiple clouds, or at the edge. NVIDIA’s extensive ecosystem of partner site manufacturers, including ASRock Rack, ASUS, Dell Technologies, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron, and Wiwynn, can leverage the H200 to upgrade their existing systems.

By the upcoming month, deployments of H200-based solutions are expected from Amazon Web Services, Google Cloud, Microsoft Azure, Oracle Cloud Infrastructure, CoreWeave, Lambda, Vultr, and other cloud service providers.

The HGX H200 delivers top-tier performance across various software workloads, including LLM training and inference for models exceeding 175 billion parameters. It is empowered by NVIDIA NVLinkTM and NVSwitchTM high-speed interconnect technologies.

For unparalleled performance in conceptual AI and HPC applications, an eight-way HGX H200 offers over 32 petaflops of FP8 deep learning performance and 1.1TB of total high-bandwidth memory.

The H200, in conjunction with NVIDIA GraceTM CPUs interconnected via ultra-fast NVLink- C2C links, forms the GH200 Grace Hopper Superchip with HBM3e, a crucial component aimed at supporting large-scale HPC and AI workloads.

Leveraging NVIDIA’s Full Stack Software for AI Acceleration

Robust software solutions that empower developers and enterprises to expedite the creation and deployment of production-ready applications spanning AI to HPC underpin NVIDIA’s accelerated technology initiative. This includes the NVIDIA AI Enterprise suite of programs for tasks like speech, classification systems, and hyperscale inference.

Availability

From the second quarter of 2024 onwards, the NVIDIA H200 will be accessible through global infrastructure providers and cloud service vendors.