AI has become a significant force in the software application development realm, with the deployment of Artificial Intelligence (AI) taking center stage. Industry experts, analysts, AI enthusiasts, and Chief AI Officers (CAIOs) are now integral parts of the tech leadership landscape.

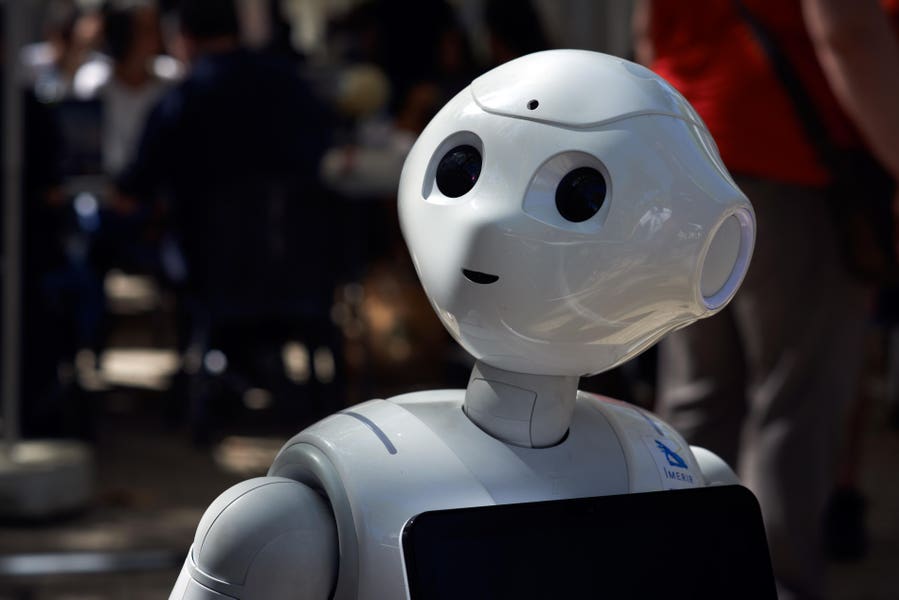

Discussions in this domain often revolve around the necessary backend infrastructure to support the computational requirements of AI, concerns about safeguarding Personally Identifiable Information (PII) in AI processes, and the evolution of AI applications themselves, including the emergence of AI bots with their social media presence.

As the integration of predictive, reactive, and generative AI technologies becomes more mainstream, many new services leverage Natural Language Processing (NLP) techniques powered by Large Language Models (LLMs) such as OpenAI’s GPT, Google AI’s PaLM, Anthropic’s Claude AI, Meta’s LLaMA, BLOOM, and Ernie Titan. Organizations commonly rely on commercially available LLMs as foundational components for developing tailored solutions, sparking conversations about the selection criteria for LLMs and their underlying architectures.

Ysanne Baxter, a solutions consultant at the low-code software platform and process automation company Appian, emphasizes the importance of aligning the choice of LLM with the specific business problem or opportunity at hand. Understanding the nuances of different LLM architectures, training data, strengths, and suitable applications is crucial for optimizing outcomes. Baxter highlights the significance of a robust and diverse dataset for training LLMs to yield optimal results.

In navigating the complexities of AI utilization, Baxter suggests that IT teams consider leveraging low-code development resources to streamline AI projects and facilitate cross-disciplinary collaboration. Low-code tools empower developers to harness existing AI models efficiently, accelerating the development process and enhancing productivity.

The debate between open-source and closed-source models, data, and systems adds another layer of complexity to AI implementation. While open-source projects offer transparency and flexibility, closed-source solutions may provide proprietary advantages but lack transparency. Strategies like Retrieval Augmented Generation (RAG) aim to enhance the reliability of generative language models by integrating external knowledge sources and implementing stringent data protection measures.

Furthermore, Baxter underscores the importance of safeguarding AI systems against vulnerabilities, especially in natural language interactions, to prevent unauthorized manipulations or injections that could compromise system integrity. Implementing robust security measures and user input validation is essential to fortify AI systems against potential attacks.

Looking ahead, the future of AI development is expected to pivot towards task- and industry-specific applications, catering to diverse business contexts and use cases. Collaboration between software developers, domain experts, and non-technical professionals will be pivotal in driving the evolution of AI technologies towards more targeted and impactful solutions.

In conclusion, the evolving landscape of AI necessitates a nuanced and collaborative approach to harnessing the full potential of these technologies, ensuring that advancements in AI integration are guided by real-world insights and domain expertise.