Greetings, comrades,

I am Nabiha, revisiting the topic of AI and its reliance on data. The ongoing discussions on AI and rights are intriguing, shedding light on how our current perspectives on data and its governance hinder our understanding of AI’s impact on humanity and the potential future scenarios.

In our daily routines, data is silently collected as we engage in activities like reading, browsing, shopping, and scheduling appointments. This data is often viewed through the lens of personal choice and consent. Questions arise: Do I want this information exposed? Should I click “accept all cookies”? Is this significant to me?

AI raises the stakes by not only making decisions about individuals but also drawing profound conclusions about entire communities. The data utilized includes patterns that shape automated systems affecting our fundamental rights, influencing determinations regarding loan eligibility, job prospects, or salary considerations. It is crucial to shift focus from individual concerns to collective implications. Should I willingly provide data that could be used to profile individuals like me based on familial ties, gender, or political beliefs?

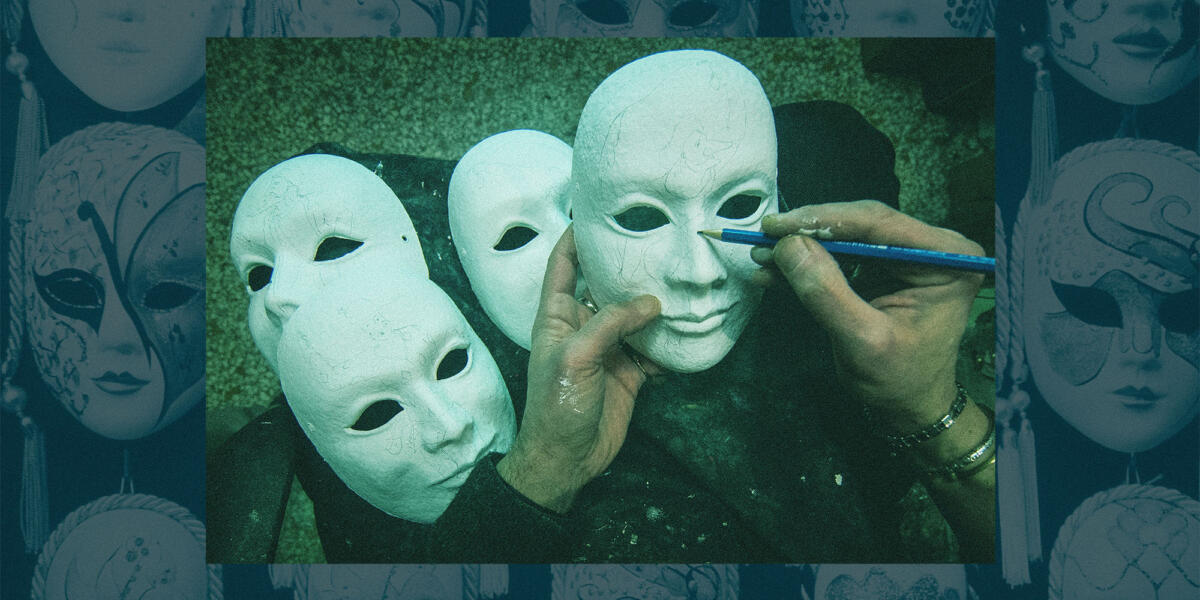

I deeply admire Dr. Joy Buolamwini’s advocacy for ethical AI practices, emphasizing accountability and justice in analytics. Her recent book, “Unmasking AI: My Mission to Protect What Is Human in a World of Machines,” delves into the risks posed by current AI technologies. To forge a better path forward, we must think collectively, engage actively, and innovate differently. Like Buolamwini, we are pioneers in this evolving landscape.

To explore topics such as “the excoded” facial recognition at airports and the concept of “living in the age of the last masters,” continue reading.

( This interview has been condensed for clarity and conciseness. )

Joy Buolamwini, Ph.D., is the author, and Morgan Lieberman and Getty Images are credited as the photographers. Syed Nabiha: During my recent air travels, I encountered the request to scan my face before boarding multiple flights, leading to a chaotic experience. What is the rationale behind this practice?

Delving into Face Scanning and the Right to “Get Stupid In Public” with Kashmir Hill

The seasoned investigative journalist shares insights gained from researching Clearview AI and the implications of facial recognition technology.

October 21, 2023, 08:00 ET

Joy Buolamwini, Ph.D.: Despite mounting evidence of biases and privacy concerns, government entities continue to embrace facial recognition technology, often citing efficiency and customer service benefits. The potential misuse of data in law enforcement and military applications underscores my reservations about biometric surveillance technologies. Recently, I came across footage of quadruped robots armed with guns, possibly integrated with facial recognition and other biometric tools, raising significant ethical dilemmas. What happens when civilians are mistaken for combatants? Who faces heightened scrutiny? As modern dehumanization tactics evolve, what new forms of warfare emerge? Could machine brutality complement police aggression? History echoes the dehumanizing use of dogs on enslaved individuals, a parallel not lost on me. The sight of these robots evokes memories of activists confronting police dogs, underscoring the inherent dangers of such systems, compounded by known biases in facial recognition technology.

As a researcher, I foresee the implications of expanding facial recognition at airports and its integration into governmental services. While these contexts vary significantly, the normalization of facial recognition to enhance security poses serious privacy concerns. While facial scans may seem convenient, they encroach upon our biological rights and data privacy. I strongly advise individuals against opting for facial scans, especially when traveling, to safeguard their privacy.

Syed: My airport encounters have been enlightening in understanding facial recognition technology. This also presents opportunities for advocacy. It underscores the growing awareness of surveillance technologies post your seminal work, “Gender Shades.” What progress have we made, and what lies ahead?

Buolamwini: Since the publication of “Gender Shades,” co-authored with Dr. Timnit Gebru, and subsequent collaborative research like the Practical Auditing paper with Deb Raji, corporate practices have evolved. Every American company we audited has refrained from selling facial recognition technology to law enforcement agencies in various capacities. Over a dozen jurisdictions have imposed restrictions on police use of facial recognition, leading to legislative reforms. This progress is pivotal, as without such safeguards, scenarios akin to the Detroit Police Department’s actions persist. The experiences of individuals like Porcha Woodruff, part of what I refer to as the “excoded,” epitomize the nightmares of artificial discrimination and exploitation.

Porcha’s wrongful arrest, facilitated by AI-powered facial recognition misidentification while eight months pregnant, serves as a stark example. Her ordeal is not singular; Robert Williams faced a similar fate three years earlier, wrongfully detained in front of his young daughters. These instances underscore the prevalence of racial biases in facial recognition technologies. We must halt the reckless deployment of AI to prevent others from enduring the trauma faced by Porcha, Robert, and their families.

Individuals like Michael Oliver, Nijeer Parks, and Randal Reid, though their names may not make headlines, have suffered unjust arrests due to AI errors. No one is immune to AI-related harms; we are all susceptible to being “coded.” AI-powered facial recognition extends its influence across various domains, from employment to healthcare and transportation, posing risks of misidentification and laying the groundwork for algorithmic surveillance if adopted widely, such as by the Transportation Security Administration at U.S. airports.

How will people develop expert callouses if AI systems replace earlier tasks that might be considered routine necessities?

Joy Buolamwini, Ph.D.

Since “Gender Shades,” advancements in AI techniques have broadened the scope of biometric data usage beyond facial analysis. The emergence of deepfake-generating AI systems leveraging biometric data underscores the need for robust biometric rights safeguarding our identities from exploitative algorithms. The concerns raised by artists and authors regarding unauthorized use of their original works highlight the ethical dilemmas in AI-driven content creation. By advocating for fair compensation and control over their creations, artists can combat algorithmic exploitation. The excoded encompass individuals across various professions, facing the risk of displacement as automation encroaches on traditional roles. Even public figures like Tom Hanks have encountered challenges, as evidenced by the misuse of his digital likeness in advertisements without consent.

Diverging momentarily, I recently featured in Rolling Stone alongside other AI critics, a testament to our longstanding efforts in raising awareness on AI risks. Reflecting on my affinity for a golden electric guitar inspired by a photo shoot, I rediscovered my calluses upon returning to playing. This experience prompts introspection on the potential loss of expertise as AI systems supplant routine tasks, signaling a shift towards a future where mastery may be a diminishing art.

Haji: Many stakeholders in the business realm advocate for ethical AI and responsible innovation. I understand you have engaged with technology leaders like Sam Altman, former CEO of OpenAI. How can businesses mitigate harm effectively?

Buolamwini: In my conversation with Sam, I emphasized that businesses claiming accountability must align their actions with ethical standards. As a new member of the Authors Guild and the National Association of Voice Actors, I am particularly attuned to preserving artistic integrity. Upholding the four C’s—consent, compensation, control, and credit—can combat algorithmic exploitation. Artists deserve fair remuneration and autonomy over their creations from the outset, not as an afterthought. Scrutiny of data sets and AI training procedures is imperative to prevent irresponsible practices. Venture capitalists and businesses should adopt stringent data governance policies, ensuring transparency in data sourcing for AI systems. Establishing robust data pipelines and trade protocols can pave the way for ethical AI development while mitigating economic costs associated with training AI models.

The phenomenon of context collapse, wherein AI models designed for specific use cases are repurposed elsewhere, poses risks for startups and venture capitalists considering AI integration. A cautionary tale involves a U.S. startup aiming to detect early signs of Alzheimer’s using voice recognition technology. Despite noble intentions, misdiagnoses occurred when the model trained on English-speaking Canadians misapplied to French-speaking Canadians.

At the Algorithmic Justice League, we are developing a monitoring framework as an early warning system for emerging AI risks. This initiative underscores the importance of post-implementation feedback mechanisms to identify and address AI-related issues proactively.

Addressing Challenges in Biden’s AI Order

A detailed breakdown of the executive order on artificial intelligence is presented in The Markup.

Syed: The evolving regulatory landscape, encompassing initiatives like the U.K. AI Safety Summit, the U.S. Executive Order, and the EU’s AI regulation discussions, underscores the complex landscape of AI governance. In your view, what are institutions lacking, and where are they making strides?

Buolamwini: The EU AI Act’s clear directives, such as prohibiting live facial recognition in public spaces, exemplify a proactive stance on AI governance. The shift from indifference to active engagement on AI biases and computational fairness since my early work in 2015, which prompted the founding of the Algorithmic Justice League, is heartening. To elevate AI governance to a national and global priority is essential. The Entrepreneurship’s inclusion of algorithmic discrimination safeguards in the Blueprint for an AI Bill of Rights is commendable. However, enforcement mechanisms primarily impact government bodies and regions with substantial resources, necessitating broader policy frameworks beyond voluntary corporate commitments. Restitution, a critical aspect often overlooked in AI governance discussions, demands attention. How do we address failures and rectify past injustices exacerbated by AI-related harms?

Syed: The tendency for individuals to favor recommendations from automated systems, disregarding contradictory non-technological inputs, poses a concerning trend. The “Homer Simpson problem,” where passive compliance masks genuine engagement, raises red flags. What concerns keep you awake at night?

My sleep is haunted by the armed vertebrates.

Joy Buolamwini, Ph.D.

Buolamwini: The tokenistic inclusion of humans in last-minute decision loops is emblematic of a flawed system. The plight of the excoded and the risks of being marginalized by AI systems weigh heavily on my mind. Those denied job opportunities or vital services due to algorithmic biases in healthcare and housing sectors epitomize the structural injustices perpetuated by AI. The prospect of AI systems exacerbating structural violence, impacting quality of life and perpetuating inequalities under the guise of neutrality, is deeply troubling. My sleep is haunted by the armed vertebrates.

Syed: The fear that striving for progress may yield no tangible results is a sobering thought. How can we navigate towards a realm of analytic justice?

Buolamwini: I firmly believe that diverse voices, including yours, are pivotal in shaping AI discourse and ensuring equitable technological advancements. Achieving analytic justice entails narratives not dictated by our data but by our shared stories. The experiences of individuals like Porcha and Robert underscore the need for inclusive narratives. By questioning the efficacy of AI systems in critical domains like healthcare, education, and employment, we transcend mere rhetoric. Advocating for legislation safeguarding civil rights, creativity, and biometric data is essential to foster analytic justice.

I extend my gratitude to Dr. Joy and other advocates striving for inclusive and ethical AI frameworks.

Thank you for your attention!

Syed Nabiha

Executive Director

The Markup

P.S. Greetings, World will be on hiatus next week for the Thanksgiving holiday. We will resume communication on Saturday, Dec. 2.