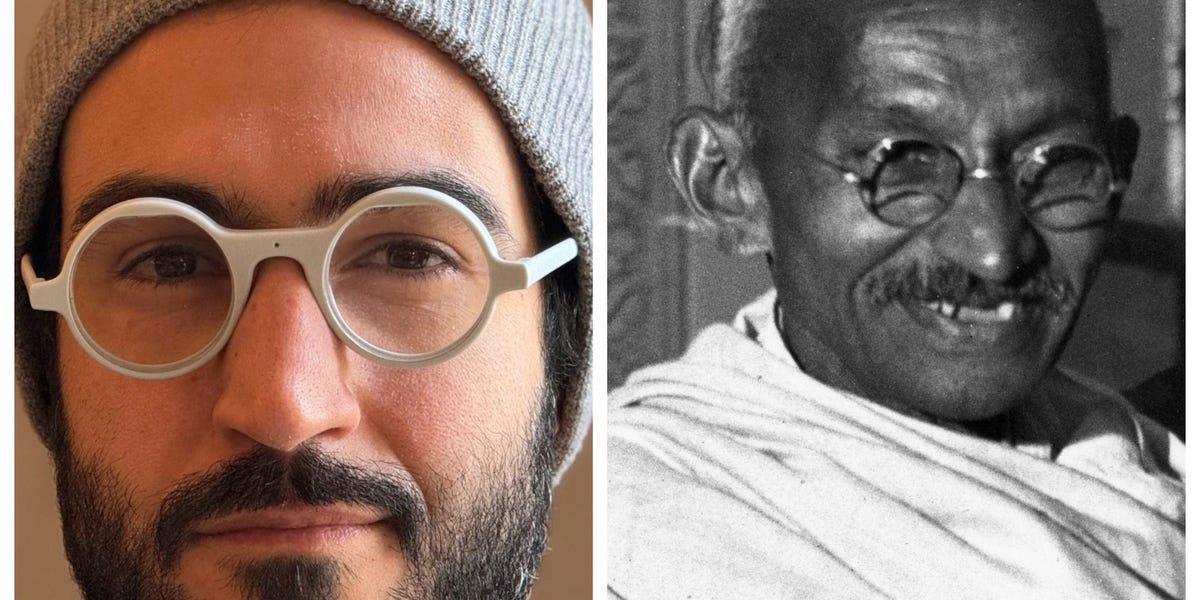

If you were a contemporary doppelgänger or an AI replica

The advancement of artificial intelligence, word duplication, and the rise of deepfakes have opened up the possibility of mimicking real individuals online, whether they are alive or deceased. When used with the individual’s consent and information, AI replicas offer a plethora of exciting applications, such as expediting content creation, enhancing personal productivity, and establishing modern legacies. However, this technology has also given rise to a new form of exploitation, including scams and deceptive deepfakes that leverage AI-generated human replicas without authorization.

Recently, a company suffered a $25 million loss due to an employee falling victim to phishing through a deepfake AI-generated video conference featuring the company’s chief financial officer and colleagues. Supporters of Taylor Swift took to social media to demand the removal of obviously photoshopped images utilizing her likeness. The FBI has disclosed instances of deepfakes being used for fraudulent remote work applications in 2022.

Various terms have been used interchangeably to refer to AI replicas, including AI duplicates, avatars, modern twins, entities, personas, figures, and digital beings. While there isn’t a singular term yet for AI replicas of living individuals, terms like thanabots for deceased individuals, mourning bots, deadbossing, deathbot, and ghostbotas have been coined. When an AI-altered or generated image is misused to deceive individuals or propagate false information, it is commonly known as a “deepfake.”

In my production Elixir: Digital Immortality, centered around a fictional tech startup selling AI replicas, I have engaged with numerous individuals since 2019 regarding their perspectives on digital twins or AI entities. The predominant audience reaction has been one of curiosity, concern, and caution. Issues associated with possessing an AI replica include “doppelgänger-phobia,” identity disassociation, and the formation of false memories, as indicated by recent research.

Potential Adverse Psychological Effects of AI Replicas

AI replicas have the potential to induce several negative psychological impacts, particularly when they are stolen, manipulated, or misused.

1. Anxiety and apprehension surrounding the utilization of AI replicas, including deepfakes

Key concerns revolve around the security and privacy of AI replicas and the datasets they encompass. Existing podcasts, videos, photos, books, and articles already contain substantial data required to replicate one’s voice or image. Studies on identity theft and algorithmic misuse suggest that the unauthorized use of an AI replica can evoke anxiety, stress, and feelings of violation and powerlessness. The term “doppelgänger-phobia” is used to describe the fear of possessing an AI replica.

2. Erosion of trust and uncertainty as the line between reality and fiction becomes blurred

Distinguishing between authentic and artificially generated media such as videos, images, or voices can be challenging without meticulous scrutiny. Research indicates that AI-generated eyes appear more lifelike than human eyes. Consequently, the general populace may need external verification to ascertain the authenticity of media. Given the tendency for negative online content to be more readily believed than positive content, prevalent biases are likely to be reinforced. Some public figures have exploited this climate of uncertainty, known as “the liar’s dividend,” to cast doubt on media credibility.

3. Concerns regarding the integrity of AI replicas and identity fragmentation

While digital identities can enable individuals to present more polished personas, they can also contribute to identity fragmentation. The impact of possessing an AI replica on one’s identity is expected to vary based on the context, application, and degree of control. Ideally, individuals should have direct oversight over the behaviors and characteristics of their AI counterparts. Adjusting the “temperature” is one method for individuals to regulate the level of randomness in an AI model’s output.

4. Fabrication of false memories

Studies on deepfakes demonstrate that interactions with a modern replica can influence individuals’ perceptions even when they are aware of the replica’s artificial nature. This phenomenon can engender “false” memories of individuals, potentially tarnishing their reputation if misrepresented. Positive false memories may also yield unforeseen and challenging personal consequences. Engaging with one’s AI replica may even lead to the formation of false memories.

5. Interference with the grieving process

The psychological impact of griefbots, thanabots, or AI replicas portraying deceased individuals on their loved ones remains largely unexplored. Some grief therapy approaches involve engaging in an “imaginal dialogue” with the deceased individual, typically as a concluding step following multiple sessions under professional supervision. Platforms offering griefbots or thanabots may need to integrate expert guidance from qualified therapists to ensure responsible and ethical use.

6. Heightened reliance on verification mechanisms

The proliferation of deepfakes for fraudulent activities and biometric authentication bypasses necessitates the implementation of enhanced verification protocols. By 2026, it is projected that 30% of businesses may lose confidence in existing biometric authentication solutions. Consequently, consumers may face increased authentication requirements to bolster security measures.

Potential Solutions: Education, Deepfake Detection, and Advanced Authentication

Enhancing public awareness, instituting AI disclosure requirements, implementing deepfake detection tools, and advancing authentication technologies are potential strategies to mitigate the misuse of AI replicas and deepfakes. Educating the public on deepfakes and AI replicas can empower individuals to identify and report suspicious online interactions effectively.

Platforms could consider mandating user disclosures for AI-generated or manipulated media uploads, although enforcement may pose challenges. For instance, YouTube recently introduced a policy requiring disclosure when uploading AI-altered content. Integrating deepfake detection mechanisms into video conferencing and communication platforms can provide users with real-time alerts for manipulated media. Furthermore, the development of novel identification technologies beyond facial or vocal biometrics will be essential to safeguard consumers and combat fraudulent activities.

Marlynn Wei, J. D., Copyright 2024