I observed a keynote presentation at the Consumer Electronics Show about the Rabbit R1, an AI device designed to function as a personal assistant, which triggered a sense of unease within me.

The discomfort did not solely stem from Rabbit’s CEO Jesse Lyu exuding a Kirkland-brand Steve Jobs aura. It was not even the cringeworthy demonstration of Rabbit’s camera recognizing a photo of Rick Astley and Rickrolling the owner, a segment that induced physical discomfort.

The true apprehension arose when Lyu showcased Rabbit’s ability to order pizza by simply stating, “the most-ordered option is fine,” leaving the dinner choice to the Pizza Hut website. Subsequently, he had Rabbit plan an entire trip to London, sourcing sights to see from an internet top-10 list, likely AI-generated.

While Rabbit’s functionalities align with existing voice-activated products like Amazon Alexa, its distinguishing feature is the creation of a “digital twin” of the user. This twin can directly access all apps, eliminating the need for user interaction. Moreover, Rabbit can utilize Midjourney to generate AI images, further reducing human involvement and blurring the lines between reality and artificiality.

Despite the hype surrounding Rabbit, questions linger about its interaction with various apps and data security. Nonetheless, the initial 10,000 preorder units sold out instantly at CES, making it the most talked-about product at the event. Early adopters eagerly anticipate relinquishing more control to this advanced chatbot, setting the stage for my growing sense of unease.

Shortly after the keynote, I attended a panel on deepfakes and “synthetic information,” featuring Bartley Richardson, an AI infrastructure manager at NVIDIA. Bartley praised Microsoft’s AI assistant, Copilot, as a time and effort-saving digital companion. This sentiment of accelerating human progress through AI assistance was echoed by other tech executives, hinting at a future where AI companions like Bartley Copilot could become commonplace.

However, amidst the enthusiasm for AI advancement, concerns were raised about consumer trust in AI-driven decisions. Research indicates that only a quarter of customers trust AI-generated choices over human decisions. This skepticism poses a dilemma for executives like Bartley, who champion AI while acknowledging the erosion of consumer trust in AI-dependent brands.

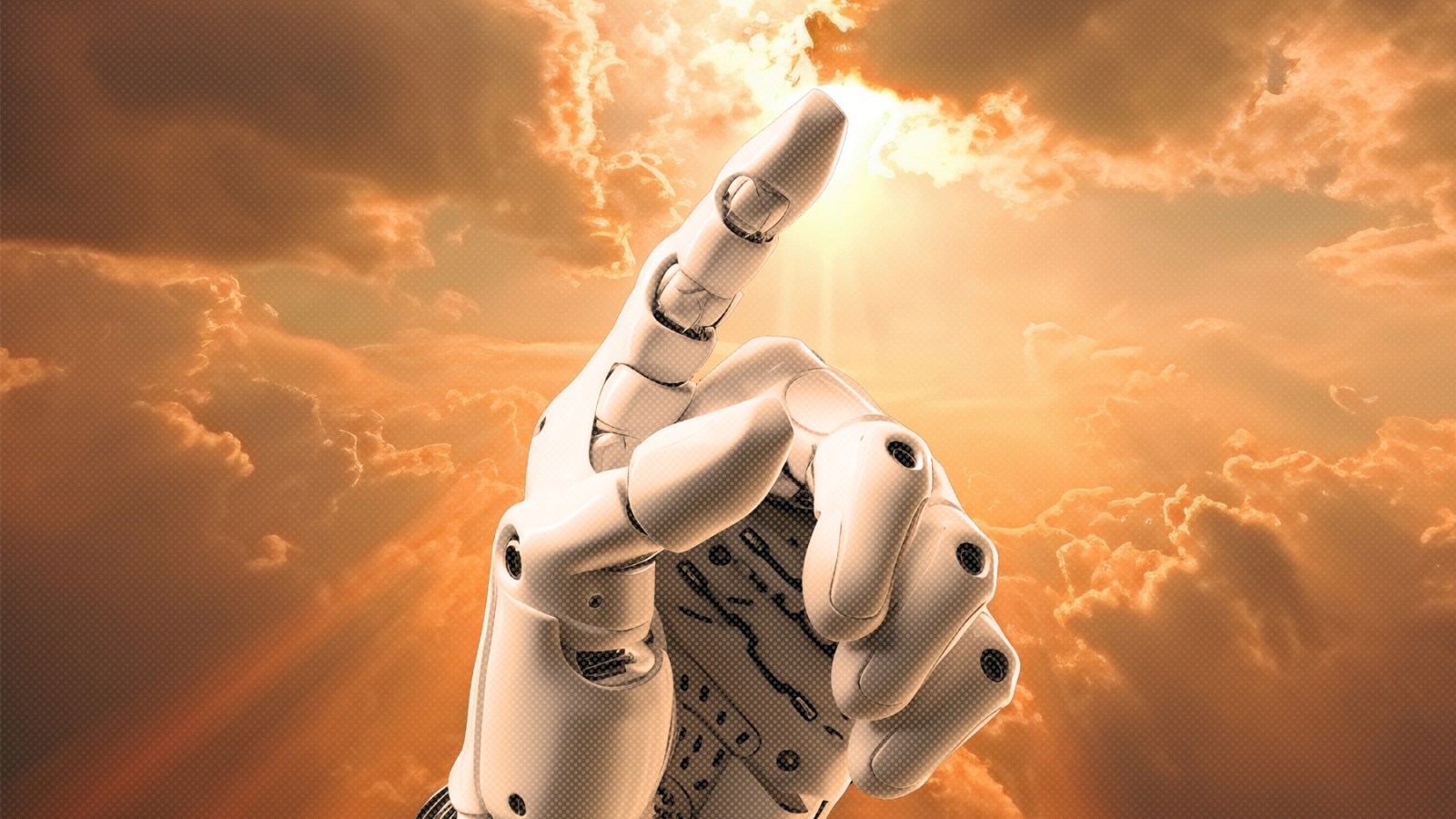

As the tech industry propels forward with AI innovations, reminiscent of cult-like fervor, parallels can be drawn to the concept of “voluntary self-surrender,” where individuals cede decision-making power to a higher authority. The allure of AI as a transformative force in various sectors is palpable, yet the implications of blindly embracing AI without scrutiny or regulation remain unsettling.

While AI holds immense potential for enhancing various fields, including scientific research and data analysis, the blind faith in AI as a panacea for all societal challenges raises ethical and practical concerns. The fervor surrounding AI development, akin to a religious zeal, necessitates a critical examination of its implications on human agency and societal values.

In conclusion, the intersection of AI innovation and human reliance on technology prompts reflections on the balance between technological advancement and ethical considerations. As the tech industry marches towards an AI-driven future, it is imperative to navigate this landscape with caution, ensuring that the pursuit of progress does not compromise fundamental human values and autonomy.