Until the occurrence involves Taylor Swift, it may seem insignificant.

Now, Houston, you have brought to our attention an issue with AI-generated videos. Previously, many individuals appeared indifferent to this troubling social phenomenon until it directly impacted America’s Sweetheart.

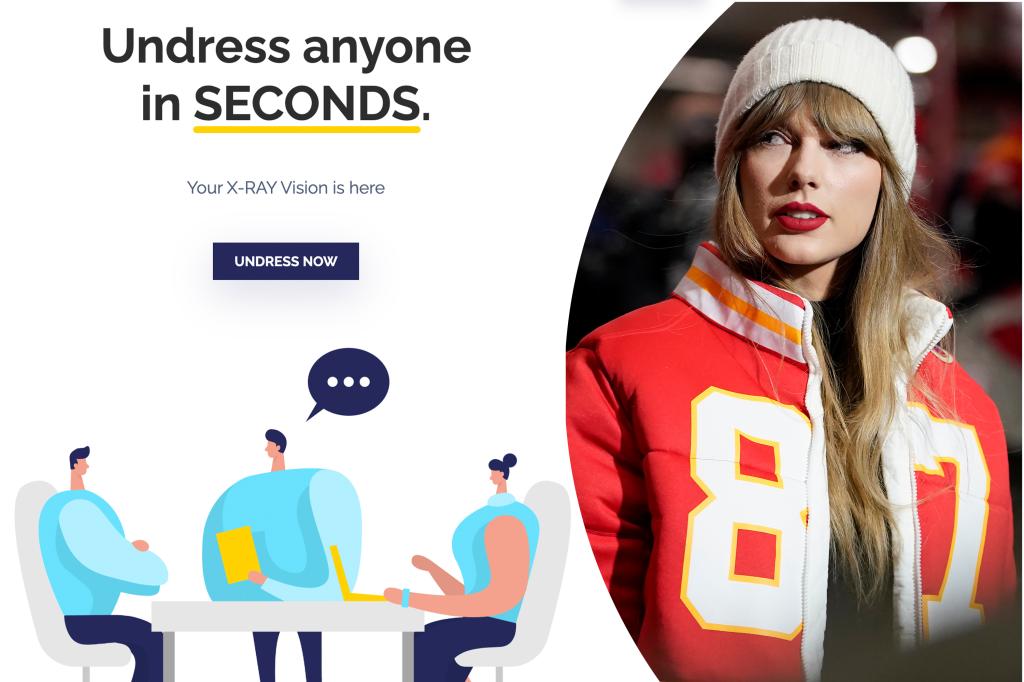

This week, manipulated images, known as deepfakes, depicting the renowned singer in compromising and intimate scenarios began circulating, much to the dismay of her devoted fans, known as Swifties.

Swifties reacted promptly, akin to a united force like Voltron, commending the singer for standing up against the deceitful content and demanding its removal from the digital realm.

Questions arose among Swifties regarding the absence of legislation preventing the creation and dissemination of falsified pornographic material using real individuals’ likenesses.

This pressing issue has caught the attention of lawmakers, as US Representatives Joseph Morelle (D-NY) and Tom Kean (R-NJ) reintroduced the Preventing Deepfakes of Intimate Images Act to the House Judiciary Committee last week.

Under this proposed legislation, the unauthorized sharing of digitally altered pornographic content could result in severe federal penalties, including imprisonment, fines, or both, with victims empowered to take legal action against offenders.

The proliferation of AI-generated explicit content featuring Taylor Swift is not only alarming but also a widespread concern affecting women globally on a daily basis, as highlighted by Morelle’s tweet advocating for federal action against sexual abuse.

Despite the significance of this issue, it has not received adequate attention, with the focus shifting towards ChatGPT’s hiring rather than the detrimental impact of AI on individuals’ reputations, mental well-being, and overall reality distortion.

Celeb Jihad, a notorious website previously admonished by Swift for posting fake nude images, has once again surfaced as a potential source of these fabricated deepfakes, alongside other leaked explicit content of celebrities.

The accountability for such reprehensible actions remains elusive, with Celeb Jihad attempting to downplay its actions through a ludicrous disclaimer on its website, which blends rumors, fiction, and factual information under the guise of humor.

This modern-day nightmare extends beyond high-profile figures to vulnerable teenagers, predominantly young women and potentially minors, as evidenced by a recent AI-generated scandal involving fabricated images of female students circulating within a New Jersey high school.

The repercussions of such incidents are profound, instigating fear and uncertainty among victims and their families about the long-term consequences on their personal, academic, and social lives.

While temporary media coverage may shed light on these distressing events, the underlying issue persists, posing a threat not only to individuals like Swift but also to impressionable youths facing similar violations of privacy and dignity.

Efforts to combat this digital menace have been undertaken at both state and federal levels, with initiatives such as the Preventing Deepfakes of Intimate Images Act aiming to address the escalating threat posed by AI-generated content.

Francesca Mani, a young survivor of the Westfield scandal, emphasized the emotional toll and lasting trauma caused by such violations, underscoring the urgent need for protective measures to safeguard individuals, especially children and adolescents, from falling victim to such malicious acts.

In conclusion, the urgency to address and mitigate the impact of AI-generated content on individuals’ lives, privacy, and mental well-being cannot be overstated, necessitating a concerted effort from legislators, law enforcement, and society as a whole to combat this pervasive threat effectively.