Global feature impact techniques, such as Partial Dependence Plots (PDP) and SHAP Dependence Plots, are commonly utilized to elucidate opaque models by illustrating the average impact of each feature on the model’s output. Nevertheless, these approaches have limitations when the model demonstrates feature interactions or heterogeneous local impacts, resulting in aggregation bias and potentially misleading interpretations. To address the demand for interpretable AI techniques, particularly in critical sectors like healthcare and finance, a group of researchers has introduced Effector.

Effector, a Python library, aims to overcome the constraints of existing methods by introducing regional feature impact techniques. This method divides the input space into subspaces to provide a regional explanation within each, facilitating a deeper comprehension of the model’s behavior across distinct regions of the input space. By adopting this approach, Effector strives to diminish aggregation bias, enhance interpretability, and bolster trustworthiness in machine learning models.

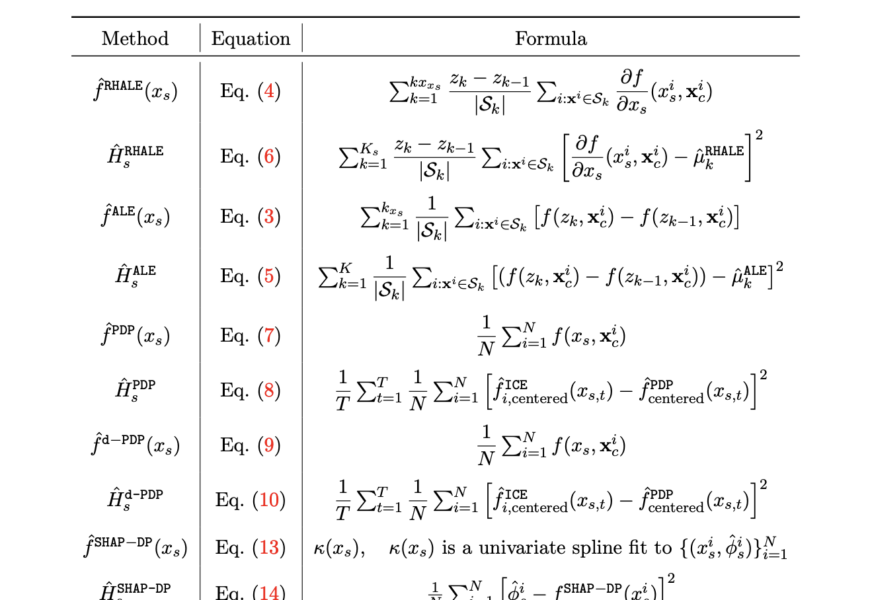

Effector presents a wide array of global and regional impact techniques, including PDP, derivative-PDP, Accumulated Local Effects (ALE), Robust and Heterogeneity-aware ALE (RHALE), and SHAP Dependence Plots. These techniques share a uniform API, simplifying the process for users to compare and select the most appropriate method for their specific use case. The modular architecture of Effector allows for seamless integration of new techniques, ensuring the library remains adaptable to evolving research in the realm of eXplainable AI (XAI). The efficacy of Effector is validated using both synthetic and authentic datasets. For instance, by utilizing the Bike-Sharing dataset, Effector uncovers insights into bike rental trends that were previously obscured by global impact methods alone. Effector automatically identifies subspaces within the data where regional impacts have lessened heterogeneity, furnishing more precise and understandable explanations of the model’s behavior.

Effector’s user-friendly interface and accessibility render it a valuable asset for researchers and practitioners in the machine learning domain. Users can initiate with basic commands to generate global or regional plots and progress to more intricate features as needed. Furthermore, Effector’s extensible framework encourages collaboration and innovation, empowering researchers to experiment with novel techniques and juxtapose them against existing approaches.

In summary, Effector presents a promising remedy to the challenges of interpretability in machine learning models. By providing regional explanations that consider heterogeneity and feature interactions, Effector enhances the comprehensibility and dependability of black-box models, thereby expediting the deployment and utilization of AI systems in practical scenarios.