500 automated systems reviewed the news from July 1, 2020, on a simulated evening in July of the same year that never actually occurred. As reported by ABC News, students in Alabama were reportedly organizing “COVID parties.” President Donald Trump, appearing on CNN, labeled Black Lives Matter as a “symbol of hatred.” An article in The New York Times highlighted how the pandemic led to the postponement of the football season.

Subsequently, the 500 machines deliberated on the information they had absorbed while accessing a platform resembling Twitter. Meanwhile, in the real world, a team of researchers was conducting observations.

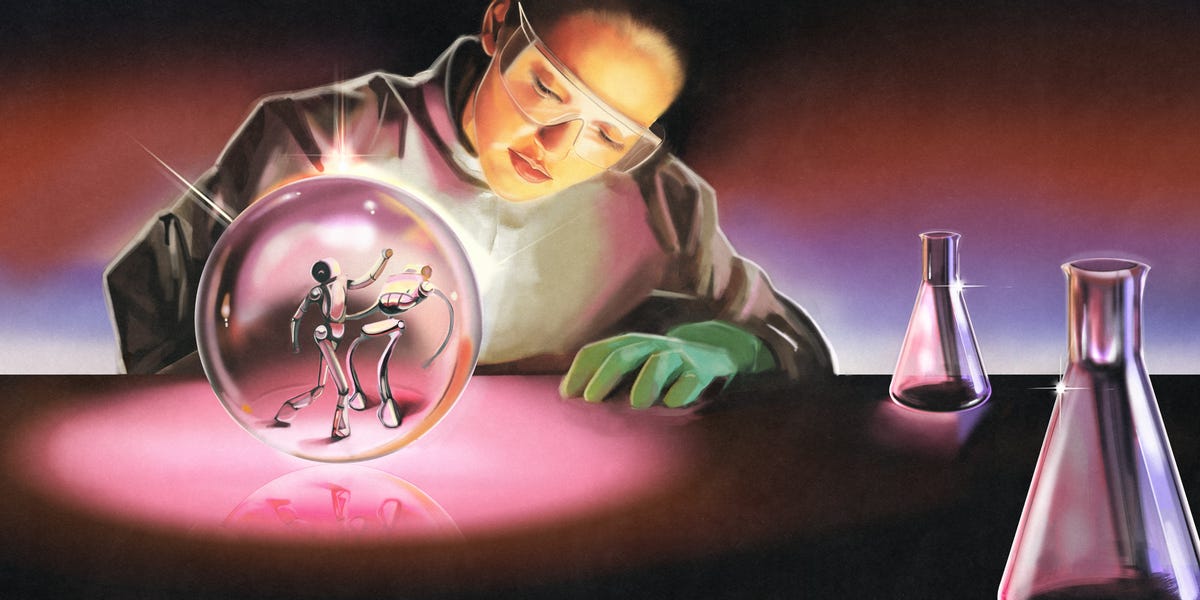

In an endeavor to enhance the social networking landscape and foster a more cohesive and respectful online environment, the researchers utilized ChatGPT 3.5 to develop these automated systems. Their aim was to gain insights into creating a more favorable digital space akin to an idealized version of Instagram. The researchers pondered on the possibility of promoting partisanship without fostering divisiveness and incivility. Dr. Petter Törnberg, the computer scientist overseeing the project, expressed his curiosity about this prospect.

Designing platforms like Twitter or conducting technological experiments using real individuals can be challenging due to the complexities involved in human behavior and the high costs associated with human subjects. Conversely, AI-powered machines can execute tasks as instructed without constraints and are designed to mimic human behavior. Hence, researchers are increasingly turning to AI as proxies for human subjects to gather valuable insights.

Dr. Törnberg, an associate professor at the University of Amsterdam, emphasized the need for advanced models of human behavior when designing public discourse platforms. With the emergence of sophisticated language models, such as AI, researchers can simulate human interactions effectively. This approach could potentially accelerate our understanding of various fields, ranging from public health to sociology, by using AI as surrogates for human subjects in scientific experiments. Artificial intelligence has the capacity to offer profound insights into human behavior.

The utilization of AI proxies in social experiments is not a new concept. In 2006, researchers at Columbia University established a virtual social network to study how users interacted and rated music, pioneering the field of computational social science. The concept of using AI agents to populate simulated social networks dates back even further. These agent-based models are now widely employed across diverse fields, including finance and epidemiology. For instance, Facebook created a simulated environment populated by AI bots to study online behavior.

Dr. Törnberg’s team created multiple personas for their Twitter bots, each reflecting a specific demographic profile and social attitudes based on extensive research data. These bots were then subjected to three different algorithms for selecting posts on a Twitter-like platform. The experiment revealed that the “Bridging Algorithm,” which showcased posts that garnered the most likes from bots of opposing political affiliations, facilitated more engagement and cross-ideological interactions compared to the other algorithms.

The Echo Chamber Twitter model, where bots interacted primarily with like-minded counterparts, resulted in minimal conflict but lacked diversity of opinions. In contrast, the Discover Twitter model, akin to a conventional feed, exhibited polarization and limited interaction across ideological lines. The Bridging Twitter model, designed to encourage interactions between bots with differing viewpoints, fostered more engagement and mutual understanding.

Dr. Törnberg emphasized the positive outcomes of the Bridging Twitter model, highlighting the importance of fostering constructive dialogues that transcend political divides. By facilitating interactions on neutral topics that appeal to individuals across the ideological spectrum, it is possible to mitigate polarization and promote meaningful engagement.

However, before replicating these findings in real-world scenarios, it is crucial to ensure that AI bots emulate human behavior authentically and do not simply regurgitate learned patterns. Dr. Törnberg underscored the need for rigorous validation methods to verify the accuracy and reliability of AI-generated responses.

In conclusion, the potential of AI proxies in social experiments holds promise for advancing our understanding of human behavior and societal dynamics. As researchers delve deeper into this realm, ethical considerations regarding data privacy, consent, and the implications of using AI surrogates for human subjects must be carefully addressed to uphold ethical standards and ensure the integrity of scientific research.