In a comprehensive bundle package, VAST Data has teamed up with AI load orchestrator Run:AI to provide storage and data network services tailored for Run:AI’s AI application chain and framework management for GPUs.

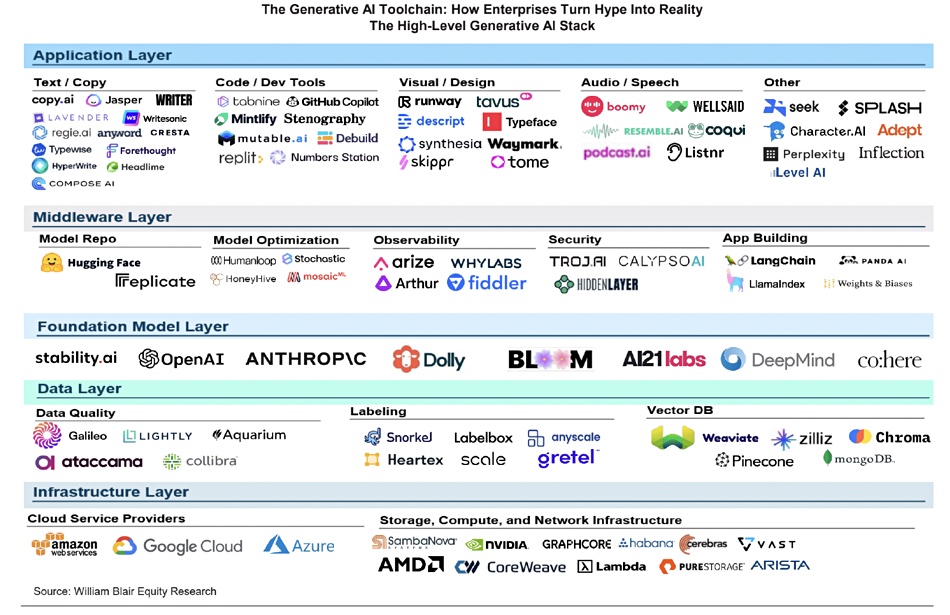

The process of training AI models for teaching and inference goes beyond merely submitting a vast dataset to the GPUs for processing. William Blair analyst Jason Ader has illustrated the intricate nature of this process, outlining task load components from e-commerce that encompass storage, data layers, base models, middleware, and application layers.

Each level comprises numerous components that are adeptly managed by Run:AI. Renen Hallak, the CEO and co-founder of VAST, emphasized the necessity for a holistic approach to AI operations, acknowledging that clients require comprehensive solutions. The collaboration with Run:AI integrates all essential elements for an efficient AI network, surpassing traditional artificial solutions. This announcement paves the way for data-intensive organizations worldwide to enhance their AI operations significantly, delivering more potent, successful, and cutting-edge results at scale.

Run:AI, founded in Tel Aviv, Israel, in 2018 by CEO Omri Geller and CTO Ronen Dar, has raised $118 million to date. Their platform offers AI optimization and orchestration services to empower customers in training and constructing AI models, providing flexible and tailored access to AI compute resources. For example, they offer fractional GPU services, enabling multiple artificial tasks to utilize the same physical GPU through virtualization, akin to how server virtualization facilitates various virtual machines (VMs) sharing a single CPU.

Ensuring equitable access to compute resources for diverse data science teams poses a significant market challenge, as noted by Geller. The partnership with VAST focuses on maximizing efficiency within complex AI infrastructures, enhancing visibility, and improving data management throughout the entire AI pipeline.

The joint offering by VAST and Run:AI may encompass:

- Comprehensive observability for reference and data management, covering compute, networking, storage, and task management across AI operations.

- Cloud Service Provider-Ready Infrastructure with a Zero Trust approach to compute and data isolation, efficiently deploying and managing AI cloud environments on a shared infrastructure.

- Optimization of End-to-End AI Pipelines, from multi-protocol ingest to data processing, model training, and inferencing, leveraging Nvidia RAPIDS Accelerator for Apache Spark. The VAST Data platform facilitates file pre-processing.

- Fundamental multi-GPU distributed training, AI deployment, and infrastructure management with fair-share scheduling, enabling users to share GPU clusters without encountering memory overflows or processing conflicts. The VAST Data platform ensures data accessibility worldwide and in multiple cloud environments through encryption, access-based controls, and data security measures.

This partnership is poised to encourage VAST customers to explore Run:AI for their data storage needs, presenting an enticing package for Cloud Service Providers (CSPs) seeking to deliver AI training and inference services effectively.

At hall 1424 of the Nvidia GTC 2024 event, attendees can access blueprints, solution briefs, and previews from VAST and Run:AI.