Millions of individuals utilize bots daily, leveraging NVIDIA GPU-based cloud machines to power these automated systems. These innovative resources are now accessible for quick, customized conceptual AI on Windows PCs equipped with NVIDIA RTX.

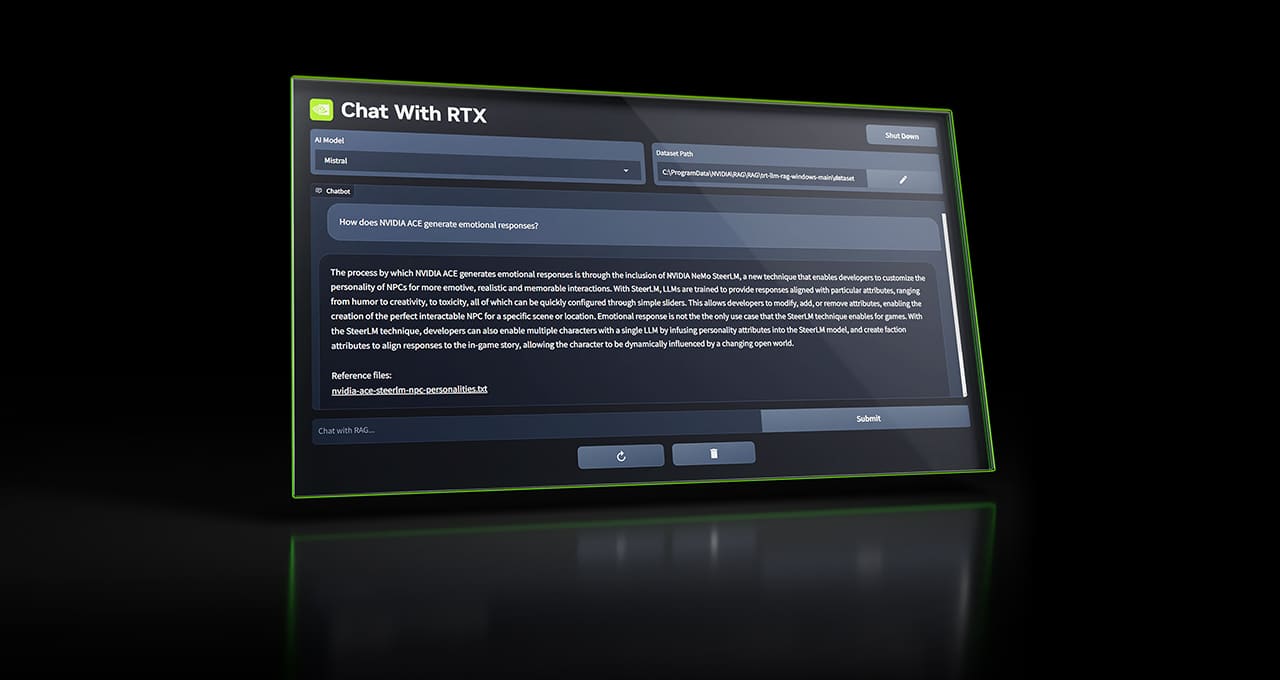

For local, GeForce-powered Windows PCs, the Chat with RTX tool utilizes searching-mixed generation (RAG), NVIDIA TensorRT-LLM software, and non-GPU acceleration to deliver conceptual AI capabilities. By leveraging an open-source big language model like Mistral or Llama 2, users can seamlessly integrate local PC files as a dataset to generate prompt and socially appropriate responses to inquiries.

Rather than sifting through notes or saved content, users have the option to input queries directly. For example, they can inquire about a restaurant recommendation from a previous trip to Las Vegas. Furthermore, Chat with RTX has the ability to scan the user’s local files to provide contextualized responses.

This tool supports various file formats such as .txt, .pdf, .doc, .docx, and .xml. Users can swiftly populate these files into the system by directing Chat with RTX to the corresponding folder.

Moreover, users have the flexibility to include data from YouTube videos in their interactions. By integrating a video link into Chat with RTX, individuals can receive cultural insights or seek travel recommendations based on influencer content. This feature allows for a diverse range of interactions, including quick tutorials and educational resources.

The outputs generated are rapid, and user data remains secure on their machine since Chat with RTX operates locally on Windows PCs and workstations. This localized approach enables users to handle sensitive data on their personal PC without the need to share it externally or rely on cloud-based services.

To utilize Chat with RTX, users must have Windows 10 or 11 installed, along with the latest NVIDIA GPU drivers and a GeForce 30 Series GPU or higher with a minimum of 8GB of VRAM.

Leveraging RTX for LLM-based Applications

RTX GPUs can significantly accelerate Large Language Models (LLMs), as exemplified by the Chat with RTX tool. The TensorRT-LLM RAG developer research project, available on GitHub, serves as the cornerstone for this software. Developers can leverage this research project, accelerated by TensorRT-LLM, to build and deploy their own RAG-based applications for RTX. Explore further insights on developing LLM-based software.

Participants have the chance to win a GeForce RTX 4090 GPU, an exclusive in-person meeting at NVIDIA GTC, and other rewards by entering the conceptual AI-powered Windows application or plugin event, running until Friday, February 23, and open to all contestants.

Discover more about RTX chat capabilities.