Princeton Researchers Revolutionize AI Chip Development with DARPA Support

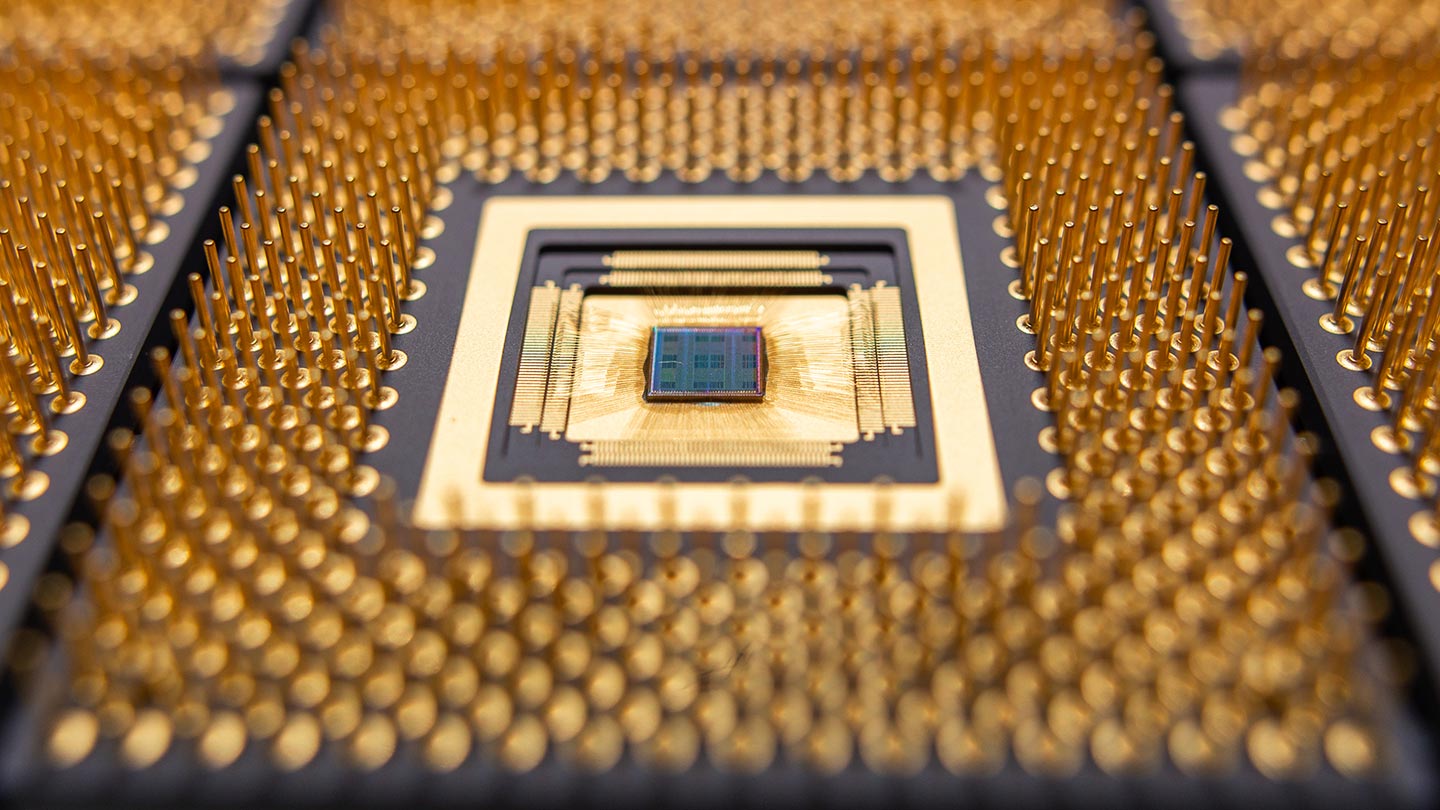

Princeton researchers have undertaken a groundbreaking initiative to transform the realm of computing by introducing a cutting-edge chip tailored for contemporary AI workloads. With substantial backing from the U.S. government, the project aims to explore the chip’s speed, compactness, and energy efficiency. The early prototype of this innovative chip is showcased above. Image Credit: Hongyang Jia/Princeton University

Advanced AI Chip Project at Princeton, Supported by DARPA

Originally established in 1958 as ARPA, the Defense Advanced Research Projects Agency (DARPA) operates under the United States Department of Defense, focusing on pioneering technologies for military applications. DARPA collaborates with various partners to drive technological advancements beyond immediate military needs, delving into diverse research and development initiatives.

In collaboration with EnCharge AI, DARPA is set to revolutionize AI accessibility and functionality, with a strong emphasis on enhancing energy efficiency and computational power. This partnership between the Defense Department’s prominent research entity and a team led by Princeton aims to develop state-of-the-art microchips designed specifically for artificial intelligence.

The novel hardware architecture reimagines AI chips to align with contemporary workloads, enabling robust AI systems to operate with significantly lower energy consumption compared to current semiconductor technologies. By pushing the boundaries of chip design, the project spearheaded by Professor Naveen Verma addresses crucial challenges in AI chip development, including size, efficiency, and scalability.

Transforming AI Deployment Landscape

The advent of energy-efficient chips opens up new possibilities for deploying AI in dynamic environments, ranging from personal devices like laptops and smartphones to critical settings such as healthcare facilities, transportation systems, and even space missions. Unlike the bulky and inefficient chips prevalent today, the envisioned chips offer versatility in deployment, extending beyond conventional server setups to cater to a wide array of applications.

Acknowledging the significance of this advancement, DARPA has pledged support for Verma’s initiative with a substantial $18.6 million grant. This funding boost will drive further exploration into optimizing the chip’s speed, size, and power efficiency, setting the stage for groundbreaking developments in AI hardware.

Verma expressed the transformative potential of liberating AI capabilities from centralized data centers, envisioning a future where AI seamlessly integrates into diverse environments, from handheld devices to industrial settings.

Innovating AI Chip Technology

To address the escalating demands of modern AI workloads within constrained environments, the research team embarked on a comprehensive reimagining of computing physics. Their approach involves designing and packaging hardware that aligns with existing fabrication techniques while seamlessly integrating with established computing technologies, such as central processing units.

Verma emphasized the critical need for AI chips to excel in mathematical computations and data management efficiency. The team’s strategy comprises three pivotal components aimed at revolutionizing AI chip technology:

-

In-Memory Computing: Departing from traditional data processing paradigms, the team explores in-memory computing, where computation occurs directly within memory cells. This innovative approach promises to streamline data processing and reduce energy consumption.

-

Analog Computation: Leveraging analog computation as an alternative to digital methods, the team taps into the intrinsic physics of devices to enhance computational efficiency. Analog signals offer finer processing capabilities, optimizing data storage and management.

-

Capacitor-Based Computation: By harnessing the precise switching capabilities of capacitors, the team achieves highly accurate computation without relying on variable conditions like temperature or electron mobility. This approach ensures consistent performance and scalability in manufacturing.

Through these strategic advancements, the Princeton-led project, in collaboration with EnCharge AI, aims to redefine the landscape of AI computing, ushering in a new era of energy-efficient, high-performance microchips tailored for diverse applications. This transformative endeavor holds the potential to reshape the future of AI technology and its integration into various domains. Image Credit: Sameer A. Khan/Fotobuddy