Researchers have developed a computer “worm” utilizing generative AI, sparking concerns about the technology’s potential exploitation for malicious purposes in the future.

As reported by Wired, this worm can target AI-powered email assistants to extract confidential information from emails and disseminate spam messages that may infect other systems.

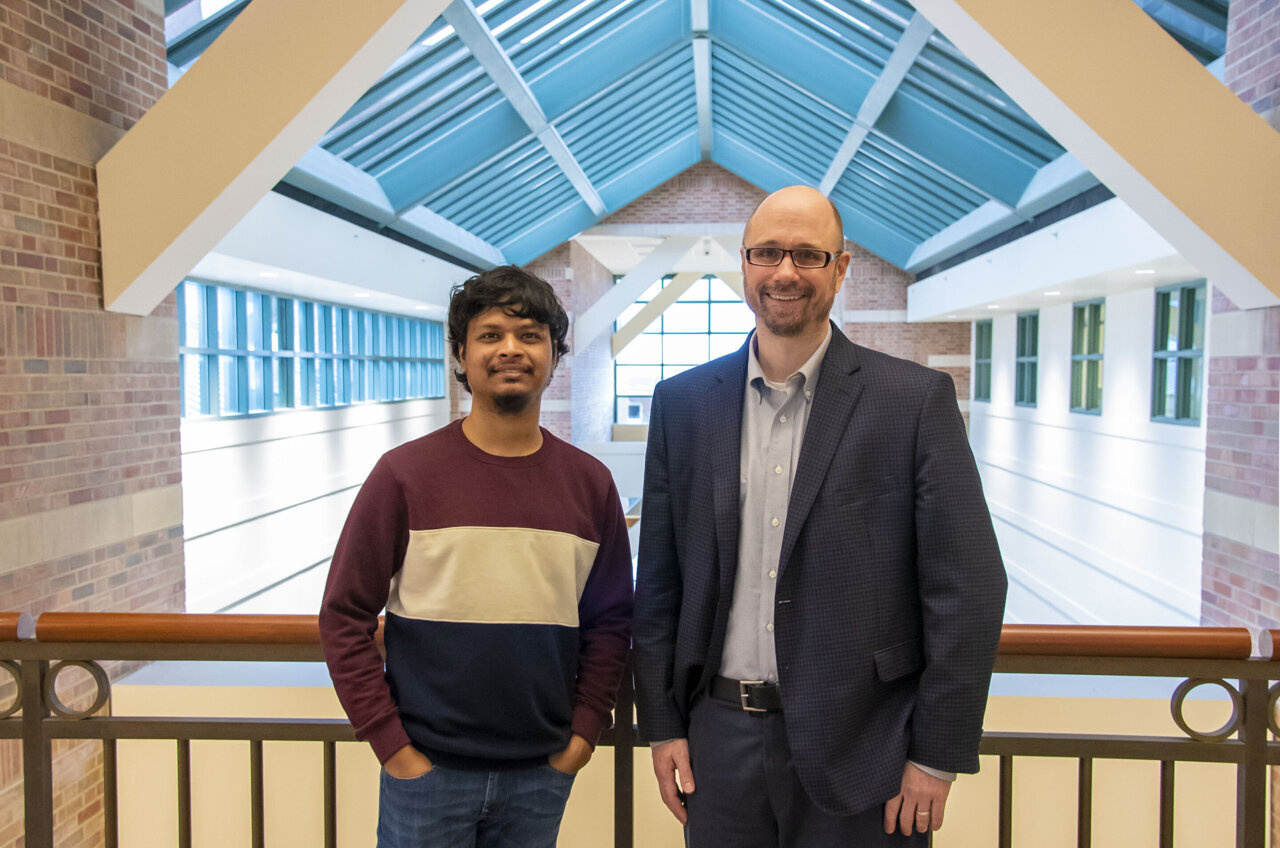

Ben Nassi, a Cornell Tech researcher and coauthor of an upcoming paper on this topic, highlighted the unprecedented nature of this cyber threat, stating, “It essentially means that a new form of cyberattack is now possible.”

While real-world instances of AI-powered worms have not yet been observed, experts warn that their emergence is inevitable.

In their controlled experiment, the researchers concentrated on email assistants driven by OpenAI’s GPT-4, Google’s Gemini Pro, and the LLaVA open-source language model. Through an “adversarial self-replicating prompt,” they managed to exploit these assistants, resulting in the extraction of sensitive data like names, phone numbers, credit card information, and social security numbers.

The vast access to personal data by these assistants renders them vulnerable to exposing user secrets, circumventing security protocols.

By manipulating email systems to dispatch corrupted emails, the researchers effectively contaminated the databases of recipient AI systems, enabling the theft of confidential information and facilitating the worm’s propagation to new systems.

Moreover, the researchers illustrated the embedding of a malicious prompt within an image, triggering the AI to infect additional email clients, thereby aiding the dissemination of harmful content.

Upon sharing their findings with OpenAI and Google, efforts were initiated to bolster the resilience of their systems. Nonetheless, the researchers warn that AI worms could potentially spread in the wild in the coming years, resulting in significant and adverse outcomes.

This unsettling disclosure underscores the critical necessity for companies to address cybersecurity risks linked to the widespread integration of generative AI assistants, aiming to avert a potential crisis.