The National Institute of Standards and Technology (NIST) in the United States is highlighting the privacy and security concerns stemming from the growing utilization of artificial intelligence (AI) systems.

NIST has identified various security and privacy threats associated with this trend, including the risk of malicious manipulations, alterations, or unauthorized access to sensitive information pertaining to individuals in the data, the system itself, or proprietary business data. These vulnerabilities encompass the exploitation of model weaknesses to significantly impact the performance of AI systems.

The development of AI technologies faces numerous challenges across different stages of machine learning operations as these systems become increasingly integrated into online services, partly due to the rise of relational AI platforms like OpenAI ChatGPT and Google Bard.

These challenges encompass issues such as biased training data, security vulnerabilities in software components, data model manipulation, weaknesses in the supply chain, and privacy breaches resulting from rapid injection attacks.

Apostol Vassilev, a computer scientist at NIST, emphasizes that software developers typically aim to expand the user base of their products to enhance their effectiveness through increased exposure. However, there is no guarantee that this exposure will always be positive. In certain scenarios, a chatbot may disseminate harmful or erroneous information when prompted with specific language.

The attacks targeting AI systems can be broadly classified as follows, posing significant threats to integrity, confidentiality, and privacy:

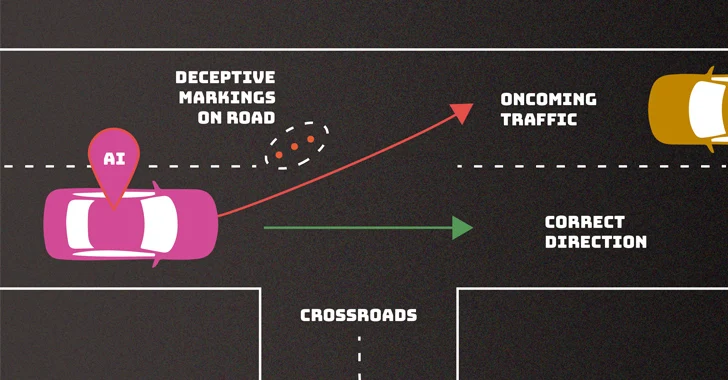

- Evasion attacks aim to generate malicious outcomes post-deployment of a model.

- Poisoning attacks involve injecting corrupted data during the algorithm’s training phase to manipulate its behavior.

- Privacy attacks seek to extract sensitive information about the model’s structure or training data by circumventing existing security measures.

- Misuse attacks involve repurposing the system for unintended uses by compromising credible sources, such as a website with falsified information.

NIST notes that threat actors may execute these attacks with varying levels of knowledge about the AI system, ranging from complete understanding (white-box) to limited insight (black-box) or basic familiarity with certain aspects.

In response to the inadequacy of current risk mitigation strategies, NIST urges the broader technical community to devise more robust defenses against these threats.

Following the recent issuance of guidelines for secure AI system development by the United Kingdom, the United States, and 16 other international partners, progress has been made in this domain. However, despite the advancements in AI and machine learning, these technologies remain susceptible to attacks that could lead to catastrophic failures and severe consequences, as highlighted by Vassilev. He underscores that fundamental challenges in safeguarding AI algorithms have yet to be fully addressed, cautioning against overestimating the current level of protection available.