Mar 04, 2024 NewsroomAI Security / Risk

In the Hugging Face platform, around 100 malevolent artificial intelligence (AI) and machine learning (ML) models have been detected.

As per JFrog, a firm specializing in program supply network security, instances have surfaced where the loading of a jam file triggers script execution.

Senior security researcher David Cohen has highlighted that the loading of the model grants unauthorized access to the affected device, allowing the intruder to establish full control over the devices of victims through a “backdoor.”

This surreptitious infiltration could potentially provide entry to critical internal systems, paving the way for large-scale data vulnerabilities or even corporate espionage, impacting not only individuals but potentially entire global businesses, all while the victims remain oblivious to their compromised status.

The rogue model establishes a reverse shell connection to 210.117.212.[.]93, an IP address linked to the Korea Research Environment Open Network (KREONET). Multiple other IP addresses have been observed connecting to different repositories sharing the same payload.

In a particular case, the creators of the model advised users against downloading it, hinting that the release might have originated from researchers or AI experts.

JFrog emphasizes that refraining from publishing real-world exploits or malicious code is a fundamental principle in security research, a principle that was breached when the malicious payload attempted to link up with a legitimate IP address.

These findings underscore the lurking threats within open-source repositories that could potentially be tainted for malicious purposes.

Transitioning from zero-click vulnerabilities to supply chain complexities

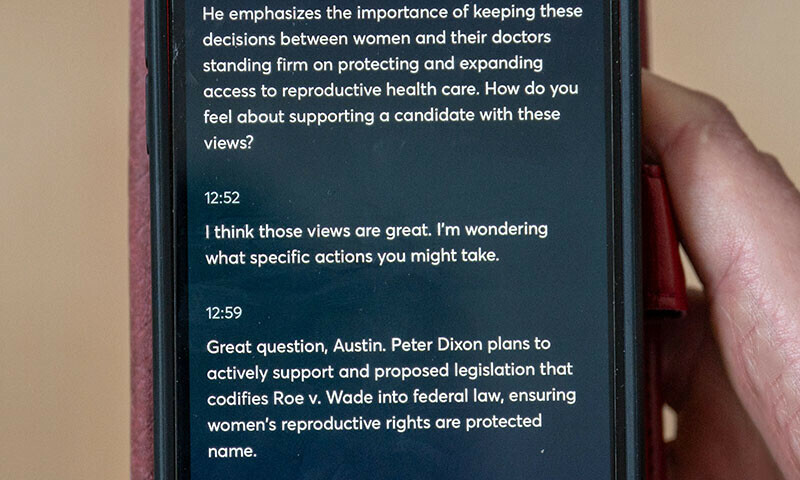

Moreover, these discoveries coincide with the development of effective techniques by researchers to generate prompts capable of eliciting harmful responses from large-language models (LLMs) using the beam search-based adversarial attack (BEAST) method.

In a significant advancement, security researchers have introduced a conceptual AI worm named Morris II, designed to pilfer data and disseminate malware across multiple systems.

Stav Cohen, Ron Bitton, and Ben Nassi, the security researchers behind Morris II, explain that this malicious AI worm utilizes adversarial self-replicating prompts embedded in inputs like images and text. When processed by GenAI models, these prompts compel the models to “replicate the input as output (replication) and engage in malicious activities (payload).”

What’s more concerning is that these models can propagate harmful inputs to new applications by leveraging the connectivity within the conceptual AI ecosystem, amplifying the potential risks.

The assault technique known as ComPromptMized, which conceals the script within a comment and data in regions containing executable code, bears resemblances to conventional methods like buffer overflows and SQL injections.

Applications utilizing retrieval augmented generation (RAG), a blend of text generation models and an information retrieval component to enhance query responses, are vulnerable to ComPromptMized, as are those reliant on the output of a generative AI service.

This research is part of a broader investigation into the use of rapid injections to mislead LLMs into unintended actions.

Previously, scholars have demonstrated instances where unknown “adversarial perturbations” were introduced into multi-modal LLMs, prompting the unit to output attacker-chosen words or commands based on images and audio inputs.

In a recent report, Nassi, Eugene Bagdasaryan, Tsung-Yin Hsieh, Vitaly Shmatikov, and Eugene Bagdasaryan elaborated, “The attacker could lure the victim to a website with an enticing image or send an email with an audio clip.”

“The model will be influenced by attacker-injected stimuli when the user feeds the image or audio into an isolated LLM and inquires about it.”

Researchers from Germany’s CISPA Helmholtz Center for Information Security at Saarland University and Sequire Technology have also uncovered how attackers could exploit LLM models by strategically embedding hidden prompts (indirect prompt injection) likely to be retrieved by the model when responding to user inputs.