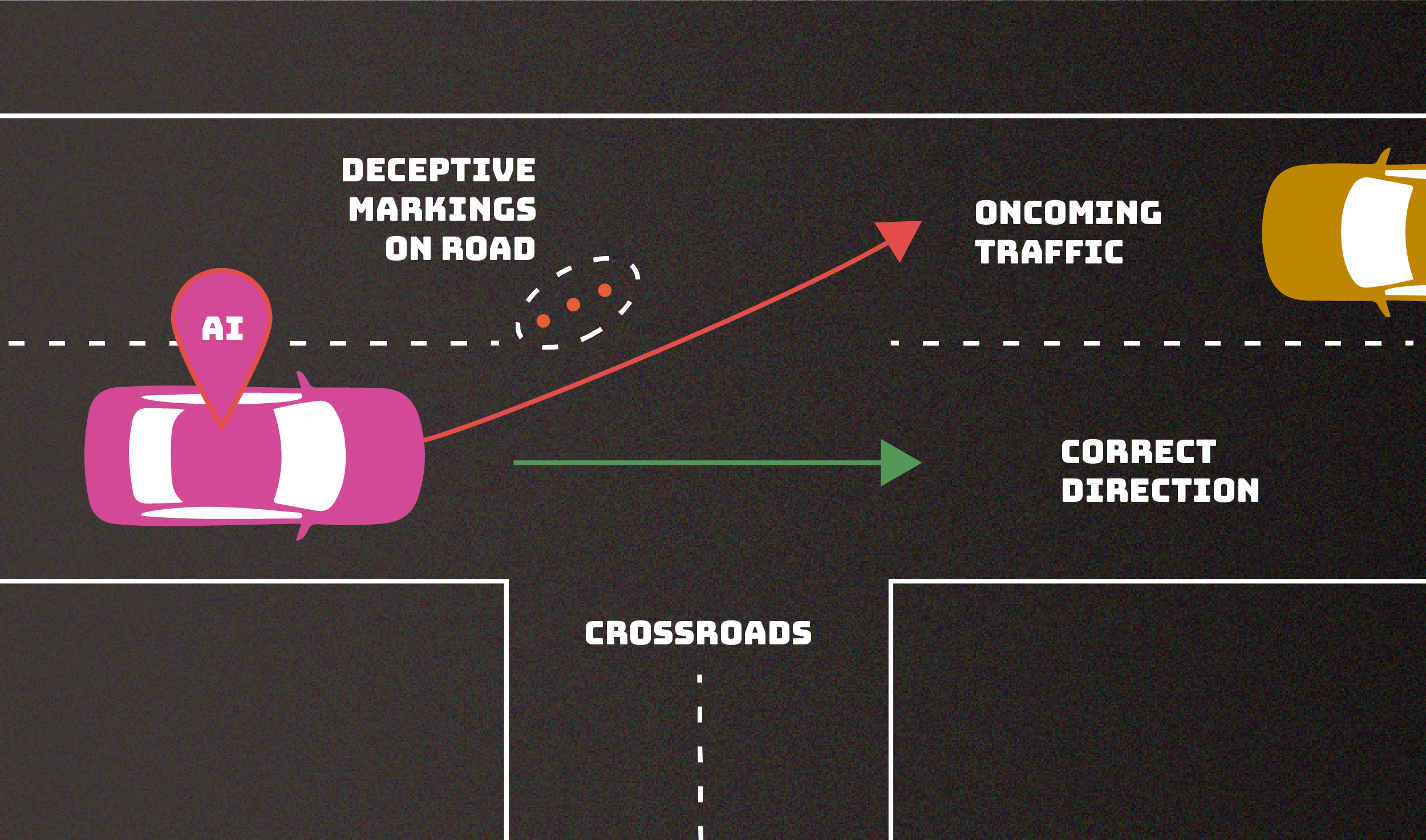

If a malicious actor manages to manipulate its decision-making process, an AI system could experience malfunctions. For example, altering road markings could potentially lead an autonomous vehicle off course and into oncoming traffic, a scenario known as an “evasion” attack, as outlined in a recent NIST publication detailing various adversarial strategies.

Artificial intelligence (AI) systems are susceptible to intentional misinterpretation or “poisoning” by adversaries, rendering their creators powerless to prevent such disruptions. In a recent report, computer scientists from the National Institute of Standards and Technology (NIST) and their collaborators have identified vulnerabilities in AI and machine learning (ML) systems.

Titled “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations” (NIST AI.100-2), the publication is part of NIST’s broader initiative to promote the development of trustworthy AI. It aims to assist in implementing the AI Risk Management Framework by providing insights into potential attacks and strategies to mitigate them, acknowledging that there is no foolproof solution.

According to NIST system professor Apostol Vassilev, one of the authors of the paper, the report offers a comprehensive overview of attack methodologies applicable to various AI systems. While discussing the latest mitigation strategies available in the literature, the report highlights the current limitations in guaranteeing complete risk reduction, emphasizing the need for stronger defenses to be developed by the community.

In today’s society, AI systems are ubiquitous, facilitating online interactions through chatbots, autonomous driving, medical diagnostics, and more. For instance, a chatbot powered by a large language model (LLM) can analyze online conversations, while an autonomous vehicle processes images of roads and signs to make informed decisions in different scenarios.

However, the reliability of the data utilized poses a significant challenge, sourced from online forums and public interactions. Throughout the AI system’s development and deployment stages, malicious actors can manipulate this data, leading to undesirable outcomes. For example, chatbots may inadvertently learn to produce offensive responses if exposed to carefully crafted malicious inputs.

Application developers often seek increased user engagement to enhance product visibility, but this exposure does not guarantee positive outcomes. In some cases, exposing AI systems to carefully designed inputs can lead to the dissemination of harmful or misleading information.

Protecting AI systems from manipulation remains a daunting task due to the vast datasets involved, making it impractical for humans to monitor effectively. The report outlines various attack categories, including evasion, poisoning, probing, and misuse attacks, and recommends corresponding mitigation strategies to support the engineering community.

Evasion attacks aim to alter inputs post-deployment to influence the system’s response, such as introducing misleading street markings to misguide autonomous vehicles. Poisoning attacks involve injecting corrupt data during training, potentially leading to unintended behaviors like chatbots using inappropriate language based on manipulated training examples.

Probing attacks target sensitive AI information during deployment, allowing attackers to exploit vulnerabilities in the model. Misuse attacks involve feeding false information to an AI from compromised sources, repurposing it for malicious intent. Despite efforts to mitigate these attacks, the report acknowledges the ongoing challenge of safeguarding AI systems effectively.

In conclusion, while advancements in AI and machine learning have been substantial, the persistent threat of adversarial attacks underscores the need for continued vigilance. Vassilev emphasizes the unresolved security challenges in AI algorithms and cautions against overestimating the current defenses. Vigilance and innovation are essential to address the evolving landscape of AI security threats effectively.