Nation-backed Groups Utilizing Large Language Models for Cyber Activities

Authored by Tom Warren, a senior editor with expertise in Microsoft, PC gaming, consoles, and technology. He established WinRumors, a platform dedicated to Microsoft news, and later joined The Verge in 2012.

The collaboration between Microsoft and OpenAI has uncovered a concerning trend where various nation-backed entities are leveraging large language models (LLMs) such as ChatGPT for diverse malicious purposes including research, scripting, and phishing endeavors.

In a recent joint study, Microsoft and OpenAI have identified instances of Russian, North Korean, Iranian, and Chinese-supported groups harnessing tools like ChatGPT to enhance their cyber operations. These groups are utilizing LLMs to delve into target analysis, refine scripts, and bolster social engineering tactics.

According to Microsoft’s recent blog post, cybercrime syndicates and state-sponsored threat actors are actively exploring the potential benefits of emerging AI technologies to enhance their offensive strategies while evading security measures.

One notable example is the Strontium group, associated with Russian military intelligence, which has been employing LLMs to grasp satellite communication protocols, radar imaging technologies, and technical parameters. This group, also known as APT28 or Fancy Bear, has a history of engaging in cyber activities, including interference in the Russia-Ukraine conflict and targeting Hillary Clinton’s 2016 presidential campaign.

Additionally, North Korean hackers under the moniker Thallium have been utilizing LLMs to research vulnerabilities, execute scripting tasks, and craft content for phishing campaigns. Meanwhile, the Iranian group Curium has been leveraging LLMs to generate phishing emails and develop code to evade antivirus detection. Chinese state-linked hackers are also tapping into LLMs for research, scripting, translations, and tool refinement.

Although no major cyber incidents involving LLMs have been detected thus far, Microsoft and OpenAI are proactively monitoring and dismantling accounts associated with these malicious groups. The collaboration aims to raise awareness within the cybersecurity community about the evolving tactics employed by threat actors.

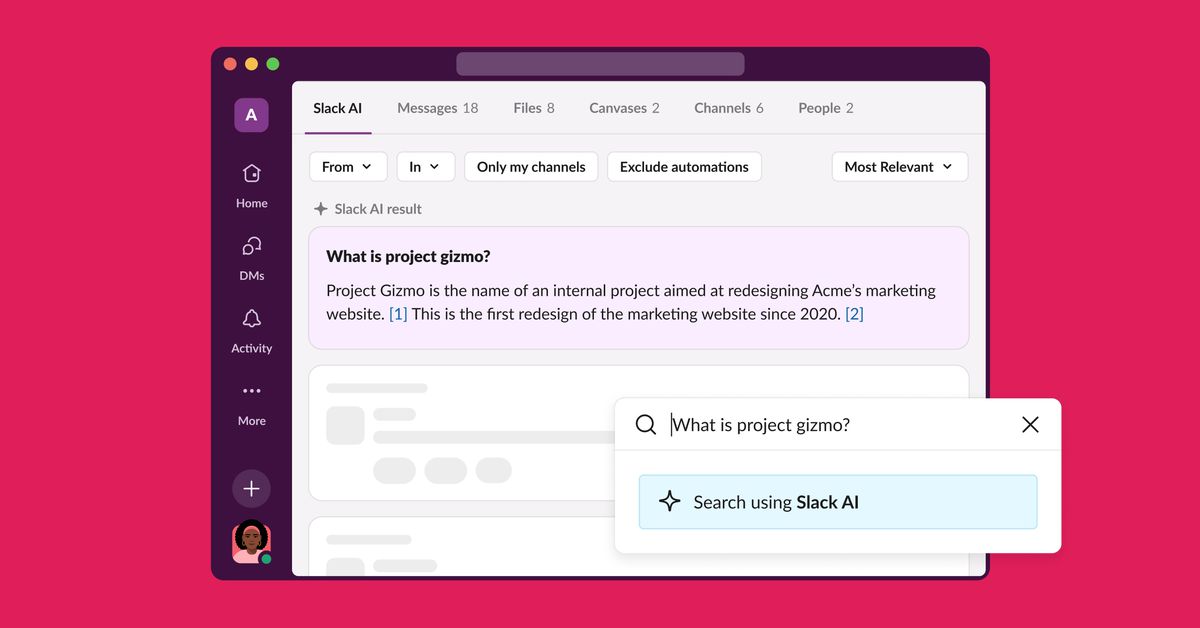

Looking ahead, Microsoft cautions about potential risks such as voice impersonation facilitated by AI. The company emphasizes the importance of utilizing AI defenses to counter the growing sophistication of cyber threats. Microsoft is introducing a Security Copilot, an AI assistant tailored for cybersecurity professionals to enhance breach identification and streamline data analysis amidst the ever-increasing cybersecurity challenges.

In response to recent security breaches, including Azure cloud attacks and espionage by Russian hackers, Microsoft is reinforcing its software security measures to fortify its defenses against evolving cyber threats.