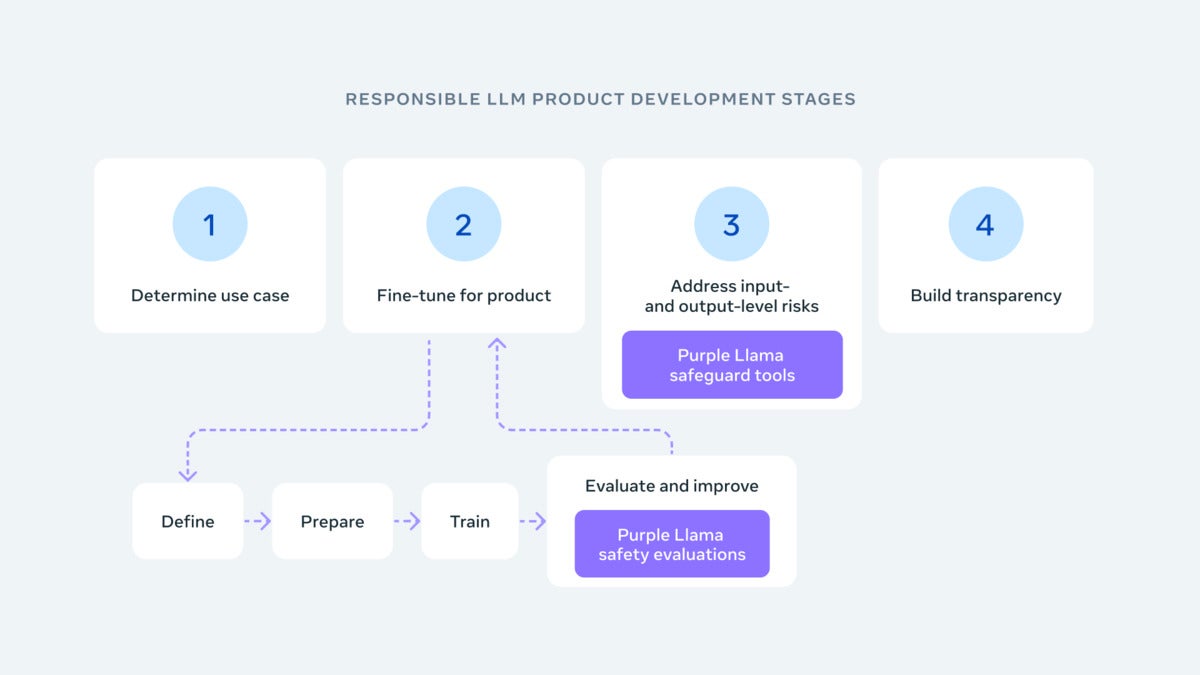

Meta has introduced Purple Llama, an open-source tool designed to assist designers in evaluating and enhancing the reliability and safety of relational AI versions before their widespread release.

The company emphasized the importance of collaboration in ensuring AI health, stating that AI challenges cannot be addressed in isolation. With concerns rising regarding large language models and diverse AI technologies, Meta aims for Purple Llama to establish a shared foundation for developing safer genAI.

Gareth Lindahl-Wise, Chief Information Security Officer at Ontinue, hailed Purple Llama as a proactive step towards safer AI. He highlighted the potential benefits of improved consumer-level security and the need for ethical considerations in AI development. While Purple Llama contributes to regulating the AI landscape, organizations with stringent obligations will still need to conduct thorough evaluations beyond Meta’s offerings.

Purple Llama’s initiatives involve partnerships with AI engineers, cloud providers like AWS and Google Cloud, silicon manufacturers such as Intel, AMD, and Nvidia, and technology firms like Microsoft. Together, they aim to create tools for evaluating AI model features and identifying potential health risks for both academic and industrial applications.

One of the tools within Purple Llama is CyberSecEval, which assesses security risks in AI-generated technology. This tool includes a language model capable of identifying offensive or harmful text related to violence or illegal activities. Developers can utilize CyberSecEval to determine if their AI models are prone to generating problematic code or supporting cyberattacks. Meta’s research underscores the importance of continuous testing and development for AI protection, as large language models often suggest vulnerable code.

Another component of Purple Llama is the Llama Guard, a robust language model that detects offensive or dangerous language. Developers can leverage Llama Guard to identify and filter out illegal content generated or accepted by their models, preventing erroneous outputs.