MEG recordings are consistently synchronized with the deep representation of the images, influencing the generation of images in real-time.

Credit: Défossez et al.

Recent advancements in technology have paved the way for aiding individuals with impairments or disabilities, offering tools for physical rehabilitation, social skills practice, and daily task support.

Meta AI researchers have introduced a novel, non-invasive method to interpret speech from brain activity, potentially enabling non-verbal individuals to communicate through a computer interface. The innovative approach, detailed in Nature Machine Intelligence, integrates imaging techniques with machine learning.

“Following a stroke or brain ailment, many patients lose their speech abilities,” shared Jean Remi King, a Research Scientist at Meta, with Medical Xpress. “While significant progress has been made in neural prosthesis development, which allows AI-controlled computer interaction via a device implanted on the motor cortex, the procedure involves brain surgery and associated risks.”

Most existing methods for speech decoding post-injury necessitate implanted electrodes, posing challenges in long-term functionality. King and team aimed to explore a non-invasive avenue for speech decoding in their recent study.

“Forgoing intracranial electrodes, we utilize magneto-encephalography, a non-invasive imaging tool capturing rapid brain activity snapshots,” explained King. “By training an AI system to interpret these signals into speech segments, we aim to decode speech representations.”

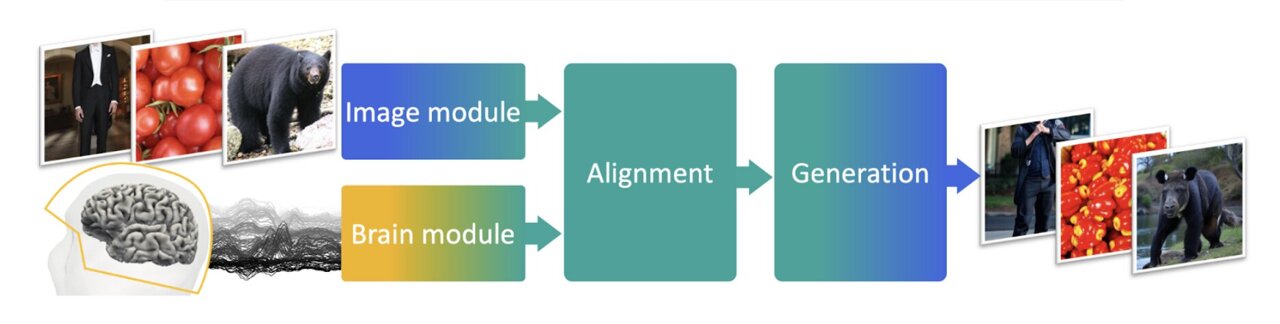

The AI system crafted by King’s team comprises two core modules: the ‘brain module’ extracting data from magneto-encephalography brain activity and the ‘speech module’ identifying speech representations for decoding.

“Through parameterization, the modules deduce real-time auditory inputs for the participant,” King elaborated.

In an initial trial involving 175 participants, brain activity was recorded via magneto-encephalography or electroencephalography while participants listened to narratives and spoken sentences. The team achieved optimal results analyzing three-second magneto-encephalography signals, decoding speech segments with up to 41% accuracy across over 1,000 possibilities, reaching up to 80% accuracy in some cases.

“The decoding accuracy surpassed our expectations, often correctly retrieving perceived speech, with errors tending to be semantically related,” King expressed.

The proposed speech decoding system displayed superior performance compared to baseline methods, showcasing its potential for future applications. Its non-invasive nature could simplify real-world implementation without the need for invasive procedures or brain implants.

“While our focus lies in fundamental brain research to inform AI, we aspire to aid communication-restricted patients due to paralysis,” King emphasized. “The next critical phase involves progressing from decoding perceived to produced speech.”

Though in its nascent stages, the AI-driven system necessitates refinement before clinical trials. Nevertheless, this work underscores the promise of less invasive technologies for individuals with speech impairments.

“Our team’s core objective is unraveling brain functionality to bridge AI and neurological processes, not limited to speech but extending to visual perception,” King concluded.