One of the primary challenges we face online is the escalating prevalence of AI deception. The proliferation and misapplication of relational artificial intelligence tools have led to a surge in fabricated images, videos, and audio content.

Distinguishing between reality and falsehood has become increasingly arduous due to the proliferation of sophisticated AI-generated deepfakes featuring individuals ranging from Taylor Swift to Donald Trump. Platforms like DALL-E, Midjourney, and OpenAI’s Sora have made it effortless for novices to create deepfakes by simply inputting a request, resulting in a surge of deceptive content.

While these synthetic visuals may initially appear harmless, they pose significant risks such as electoral manipulation, identity theft, and deceptive advertising.

The Threat of AI Deepfakes to Democracy and Effective Countermeasures

Strategies to Combat Deepfake Deception:

Identifying Algorithmic Artifacts

During the nascent stages of deepfake technology, imperfections often betrayed the deceptive nature of the content. Fact-checkers could easily pinpoint anomalies like six-fingered hands or mismatched facial features.

As AI capabilities have advanced, detecting deepfakes has become more challenging. Henry Ajder, a renowned expert in relational AI from Latent Space Advisory, notes that traditional telltale signs like artificial blinking patterns are no longer reliable indicators of manipulation.

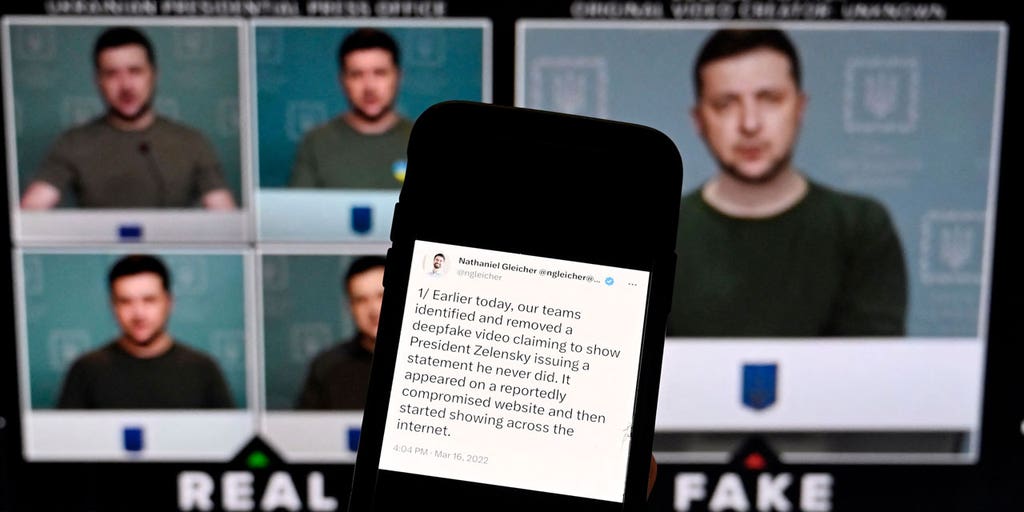

In a comparison between authentic images of Ukrainian President Volodymyr Zelensky in Washington, D.C., and a fabricated image depicting him giving a misleading directive, discrepancies in authenticity become evident. (Olivier Douliery/AFP via Getty Images)

However, certain visual cues can still expose AI-generated alterations.

Many AI-generated images exhibit a distinct glossy finish, particularly noticeable on faces, imparting an unnaturally smooth appearance to the skin, according to Ajder.

Nevertheless, artistic interventions can mitigate these telltale signs of AI manipulation.

Pay attention to lighting consistency. While the primary subject may appear sharply defined and realistic, background elements may lack coherence or realism.

Scrutinizing Facial Features

Face-swapping is a prevalent technique in image manipulation. Observing the congruity of facial tones with the rest of the body, as well as the sharpness of facial contours, can reveal potential alterations.

When assessing a video of someone speaking, focus on their dental features. Do their lip movements align convincingly with the audio?

Ajder recommends examining the teeth for discrepancies. Are they well-defined, or do they appear blurred and inconsistent with reality?

Incomplete detailing of individual teeth may indicate AI limitations in replicating intricate dental structures, as noted by cybersecurity experts at Norton.

Contextual Considerations

Context plays a crucial role in detecting deepfakes. Evaluate the plausibility of the scenario presented in the content.

Poynter, a reputable news source, suggests that if a public figure is depicted engaging in actions that seem exaggerated, implausible, or out of character, it could be a deepfake.

For instance, scrutinize whether the depicted scenario, such as a prominent figure sporting an extravagant fish coat, aligns with reality. Would legitimate sources have corroborated such an event with additional evidence?

Leveraging AI for Detection

Combatting AI-generated deception with AI tools is an effective approach.

Microsoft has developed authentication tools capable of discerning manipulated images and videos. Products like FakeCatcher utilize algorithms to verify the authenticity of visual content.

Various online resources offer services to identify fraudulent materials by analyzing uploaded files or URLs. However, access to certain tools like Microsoft’s authentication platform may be restricted to select partners rather than the general public to prevent misuse by malicious actors.

While acknowledging the limitations of these tools, Ajder emphasizes the importance of maintaining a critical mindset rather than relying solely on diagnostic technologies.

Challenges in Deepfake Detection

Despite ongoing efforts, AI advancements continue to outpace detection capabilities. AI models trained on vast internet datasets are producing increasingly realistic content with fewer discernible flaws.

This rapid evolution implies that existing detection methods may become obsolete within a short timeframe.

Experts caution against over-reliance on individuals to detect deepfakes, as the growing sophistication of AI-generated content may surpass the capabilities of even trained observers, leading to a false sense of security among the general populace.