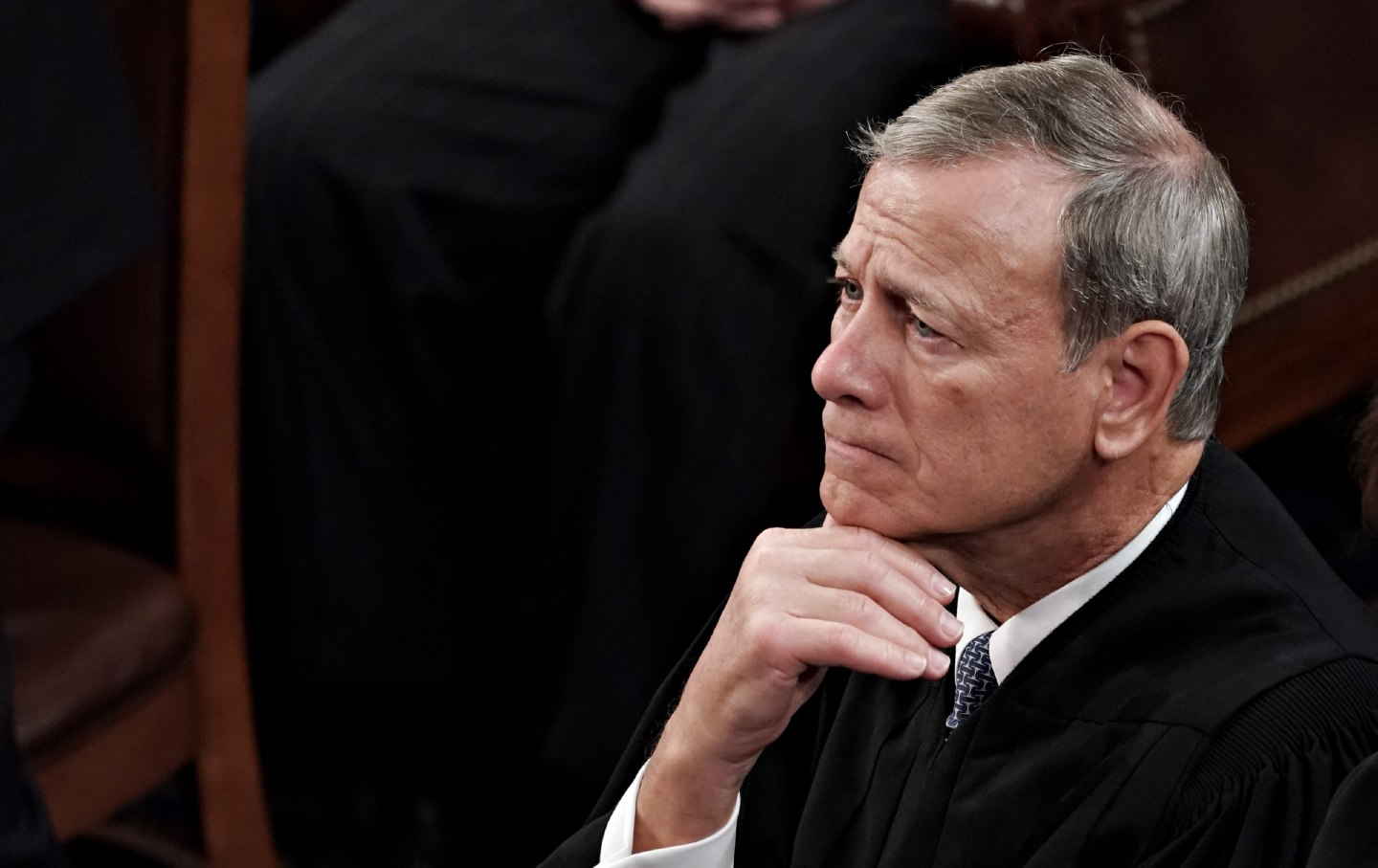

To demonstrate their superiority over automated systems, the chief justice skillfully avoided addressing the prevalent issues, notably Supreme Court corruption, in his annual report. On February 7, 2023, Chief Justice John Roberts delivered the State of the Union speech at the US Capitol in Washington, DC.

Each year, Chief Justice John Roberts releases a year-end assessment of the state of the national judiciary. However, these reports often fall short of expectations, with Roberts opting for whimsical anecdotes and questionable fashion choices over tackling critical court and national concerns at a “State of the Union” level.

Expectations were high this time around, especially with impending Supreme Court decisions on the eligibility of individuals involved in government overthrow attempts to run for re-election and the legality of applying the rule of law to former presidents. Despite the court’s legitimacy facing unprecedented challenges and internal scandals tarnishing its integrity, Roberts’ report failed to directly confront these pressing issues. While the release of an ethics code in 2023 was a step, it was criticized for its ineffectiveness and lack of transparency. Many hoped that Roberts would seize the opportunity to address or at least rationalize the ethical standing of the judicial branch as it teeters on the brink of uncertainty.

However, these hopes were dashed as Roberts’ 13-page document, submitted on December 31st, focused more on the future implications of AI on the federal court system rather than addressing the ethical concerns surrounding his tenure and the judiciary as a whole.

Current Challenge

Recent discussions in the legal community have been dominated by the impact of AI. Professors across various disciplines, including law, are astounded by the potential of AI to generate academic papers as effectively as overburdened graduate students. The use of AI even played a role in the high-profile embarrassment of Michael Cohen, former attorney to Trump, who unknowingly forwarded fictitious legal cases and citations generated by an AI system to his defense attorney. Beyond these incidents, legitimate concerns arise regarding AI, ranging from privacy implications of facial recognition technology to copyright issues related to AI-generated content and the necessity for regulatory frameworks governing AI application in business decision-making processes.

Roberts, however, chose to sidestep these critical constitutional issues, opting instead to focus on a common concern among the aging population: the fear of being replaced by technology. This shift in focus is particularly ironic given Roberts’ history of undermining workers’ rights while now expressing apprehension about the potential replacement of his own white-collar position by machines.

AI poses a unique threat to traditional professions, revealing the legal profession’s foundation as a practice rooted in echoing past interpretations and decisions. With AI’s ability to construct compelling legal arguments based on historical data and precedents, the core function of lawyers is brought into question. It is conceivable that by the end of the decade, legal appeals could be based solely on AI-generated analyses, bypassing the input of human judges or jurors.

Roberts adamantly asserts in his report that AI cannot supplant human judges, a sentiment echoed by many before him. However, his comparison to the use of optical technology in professional golf tournaments to determine serve accuracy falls short in justifying the irreplaceability of human judgment in legal matters.

While experts often champion the accuracy and efficiency of AI in various fields, they are quick to defend the irreplaceability of human judgment in their own domains. Roberts’ reliance on a sports analogy is particularly grating, especially considering his prior commitment to impartiality during his confirmation process. The shift from being an “umpire” to advocating for human discretion that can potentially alter legal outcomes mirrors the defensive stance of a flawed umpire in baseball.

Roberts’ argument that human judgment prevails in law due to the existence of “gray areas” seems incongruous, especially when considering the inherent biases that often influence judicial decisions. The perception of objectivity within the Supreme Court is questionable, with decisions often aligning with conservative ideologies. The notion that justices are free from bias is contradicted by their alignment with specific political agendas, casting doubt on the objectivity of human judgment in legal matters.

While an AI-based Supreme Court justice may seem far-fetched, the existing system, which seemingly follows predetermined rules akin to watching Fox News for an extended period, leaves much to be desired in terms of fairness and impartiality.

Roberts’ discussion on the “fairness gap” highlights the prevailing belief that human judges are perceived as fairer than machines, even when the latter may render more accurate decisions. This disparity in perception underscores the challenges in achieving true justice within the current judicial system, particularly when crucial decisions regarding individuals like Trump are at stake.

In conclusion, the resistance to AI integration in the judiciary is not solely based on technological limitations but also on the potential disruption of established power dynamics and political agendas that currently influence legal outcomes.