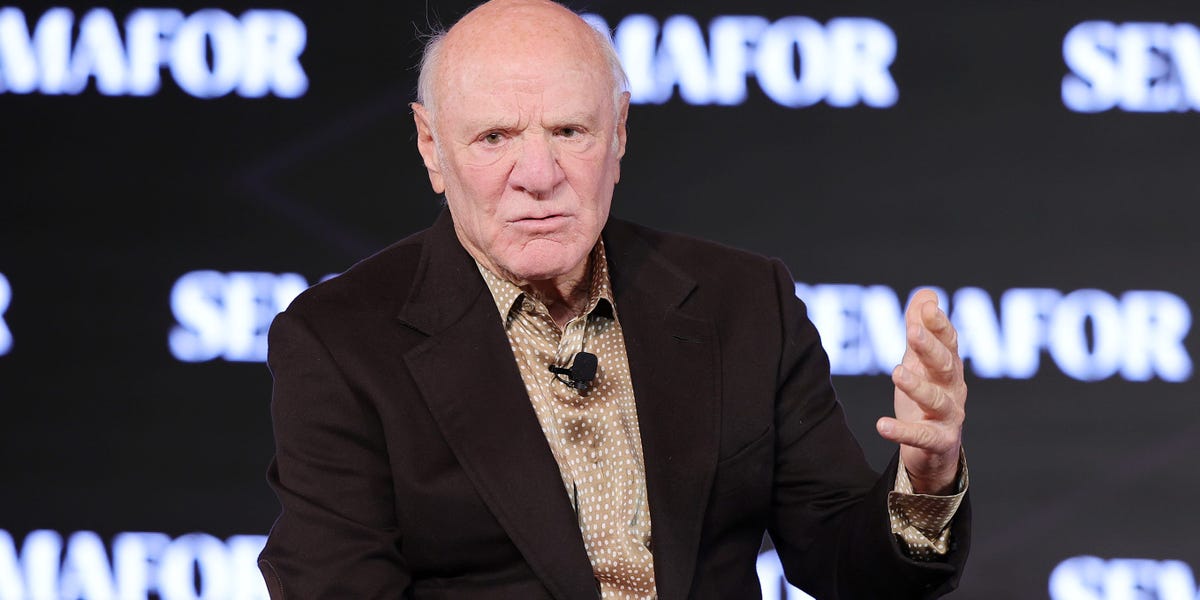

An inquiry has uncovered that Israel utilized an AI tool to pinpoint approximately 37,000 potential targets based on their perceived connections to Hamas [GETTY]

Employing an artificial intelligence (AI) system named ‘Lavender’, the Israeli military has been pinpointing targets throughout Gaza to guide their bombing operations, which have resulted in the deaths of many of the 33,000 Palestinians since October.

According to an investigation conducted by +972 Magazine and Local Call, ‘Lavender’ has played a crucial role in the extensive bombing of Palestinians during the conflict in the Gaza Strip, as confirmed by intelligence officers directly familiar with the AI tool.

Sources revealed that the military was willing to sacrifice the lives of 15 to 20 civilians to target lower-ranking Hamas members, while authorizing the deaths of up to 100 civilians to eliminate a Hamas commander, exemplified by the destruction of an apartment building on 31 October.

The sources indicated that the tool significantly influenced the military’s decision-making process, with the output of the AI machine being treated “as if it were a human decision”.

While ‘Lavender’ is programmed to identify suspects associated with Hamas and the Palestinian Islamic Jihad (PIJ), in the initial weeks of the conflict, the system flagged around 37,000 Palestinians and their residences as “suspects” eligible for airstrikes, even if family members were present.

‘Systematically targeted’

Despite the AI tool’s known 10 percent error rate, there was no mandate during the early stages of the conflict for the army to thoroughly scrutinize the target selection process by the machine.

“The Israeli army systematically targeted the designated individuals within their homes – often at night when their entire families were present – rather than during active military engagements,” the investigation revealed.

An intelligence officer who participated in the conflict informed +972 that it was deemed “much simpler” to bomb a family residence than to engage suspected militants when they were isolated from civilians.

“The IDF chose to bomb them [Hamas operatives] in residential settings without hesitation, as the primary option. It’s more convenient to bomb a family’s dwelling. The system is designed to locate them in these scenarios,” the officer remarked.

In instances where alleged junior militants identified by the Lavender system were targeted, the army opted to utilize unguided missiles, referred to as “dumb” bombs, which have the potential to devastate entire neighborhoods and result in significant casualties compared to more precise munitions.

While the Israeli military did not refute the existence of the tool, it asserted that it served as an information system for analysts involved in target identification, emphasizing Israel’s efforts to “minimize harm to civilians to the best extent possible given the operational circumstances prevailing at the time of the strike”.

In response to the investigation, the Israeli army stated that “analysts are required to conduct independent reviews to ensure that the identified targets align with the relevant definitions in compliance with international law and additional constraints outlined in IDF directives”.

The investigation coincides with widespread international criticism of Israel’s military actions in Gaza, where Israeli forces have caused the deaths of over 33,000 Palestinians since the commencement of the conflict on 7 October.

Recently, Israeli airstrikes led to the deaths of seven foreign aid workers from the World Central Kitchen who were delivering food aid in Gaza, sparking global outrage and condemnation for what has been described as a deliberate attack.

Gaza is facing a severe humanitarian crisis, with humanitarian organizations warning of “catastrophic levels of hunger” as operations are suspended due to the killings of aid workers.