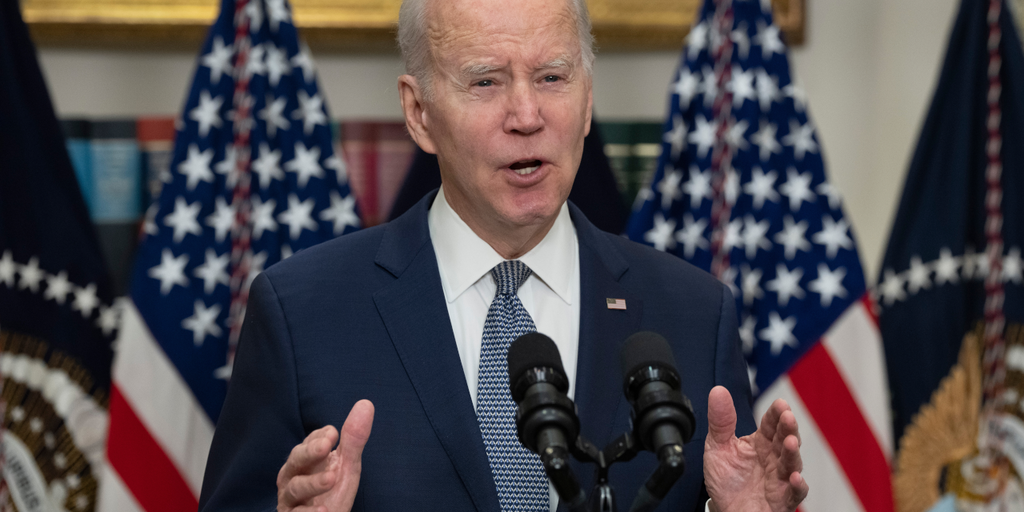

The presidency is ramping up efforts to authenticate established communications from the White House in response to an AI-generated deepfake featuring U.S. President Joe Biden, aimed at misleading New Hampshire primary voters next month.

Across the board, engineers and specialists are scrambling to develop strategies to combat AI-generated deepfakes, as the realm of AI presents new challenges. The White House has indicated its exploration of encrypted technology to verify genuine information.

Ben Buchanan, the White House Special Advisor on AI, disclosed to Business Insider recently that “we acknowledge that highly advanced technology facilitates activities like voice replication or video manipulation.” “We aim to mitigate certain risks while preserving the potential for innovation that comes with more powerful tools.”

As per Buchanan, companies have agreed to incorporate tags in their products prior to public release subsequent to discussions with over a hundred AI developers in 2023 under the Biden administration. The alternative method is gaining traction due to the potential alteration and removal of watermarks.

“To establish clear guidelines and directives on how to tackle these intricate watermarking and content authenticity challenges,” Buchanan informed Yahoo Finance, “We are working on formulating watermarking standards through the new AI Safety Institute and the Department of Commerce.”

Decrypt reached out for a statement, but a spokesperson from the White House did not respond immediately.

Major digital imaging giants Nikon, Sony, and Canon unveiled a collaboration in December to integrate embedded electronic signatures in photos captured with their respective cameras as part of the initiative to combat AI-generated visuals.

OpenAI, Microsoft, Google, Apple, and Amazon are among the participants in the U.S. AI Safety Institute, launched recently by the Biden Administration. The establishment of the Institute stemmed from Biden’s executive order aimed at the AI sector in October, according to the administration.

The development of AI for detecting synthetic deepfakes has evolved into a burgeoning sector. Some critics argue that this approach may exacerbate the problem.

“Using AI to detect AI is not a viable solution. It initiates an endless arms race where malicious actors usually have the upper hand,” stated Rod Boothby, co-founder and CEO of IDPartner, a verification organization, to Decrypt. “The focus should shift towards enabling individuals to showcase their identity online. The logical remedy lies in leveraging verifiable identity.”

Boothby highlighted the banking industry’s utilization of “continuous authentication” to validate the identity of an anonymous participant in a web conference.

Cybersecurity specialist and constitutional expert Star Kashman emphasized that raising awareness is pivotal in defending against cyber threats.

“Enhanced awareness can preempt significant harm,” Kashman conveyed to Decrypt via email, “especially concerning robocalls and AI-generated phone scams.” Familiarity with prevalent AI voice phone scams, where fraudsters simulate a family member’s voice to feign a kidnapping, empowers individuals to verify the situation before falling prey to a false ransom demand.

A recent telemarketing campaign aimed at dissuading Democrat voters in New Hampshire from participating in the primary election illustrated the ease with which the public can be manipulated due to advancements in conceptual AI.

A telecommunications company based in Texas was identified as the source of the deceptive Biden call. The U.S. Federal Communications Commission enacted a new regulation deeming robocalls utilizing AI voices unlawful following a cease and desist order issued to Slang Telecom LLC.

While acknowledging the necessity for governmental intervention in addressing the threat, Kashman stressed that awareness serves as a frontline defense against exploitation by AI deepfakes.

“In the case of sophisticated scams,” Kashman remarked, “Awareness alone may not prevent victimization.” Nonetheless, heightened awareness can exert pressure on authorities to enact legislation criminalizing the production of unauthorized deepfakes.