The impact of artificial intelligence is pervasive across various aspects of life, presenting both opportunities and risks. Sam Altman, the mind behind the ChatGPT language model, has raised concerns about the potential dangers of AI advancements that may surpass human control. Despite the innovation AI brings, there are warnings that humans could face threats from its unchecked growth.

Recognizing the significance of the matter, the European Union has taken the lead in establishing comprehensive AI regulations. The European Commission’s draft legislation aims to foster the development of AI that is transparent, comprehensible, ethical, secure, and environmentally friendly. However, these regulations are not intended to impede the progress of AI startups, as emphasized by EU Industry Commissioner Thierry Breton. Following negotiations among the European Commission, the European Parliament, and the Council of member states, a consensus was reached during a “trilogue” conference. The proposal is now pending final confirmation and approval by the committee.

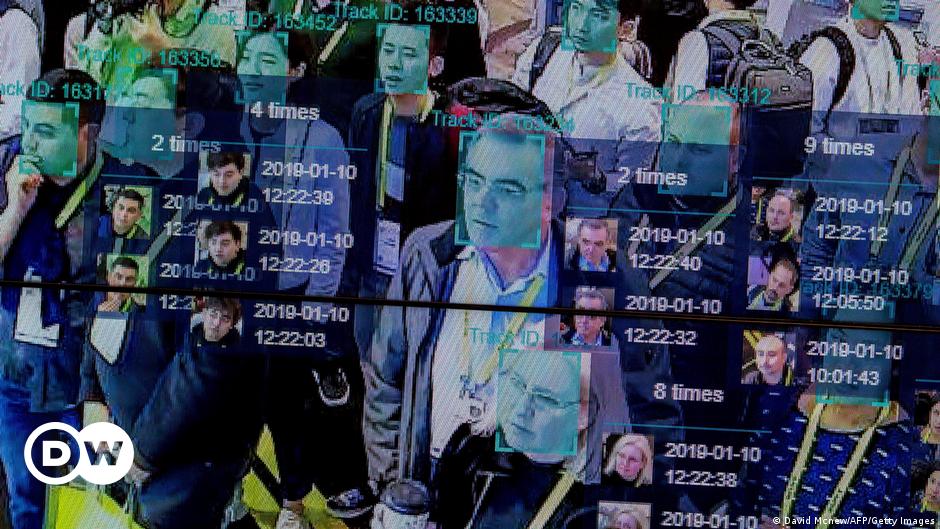

The EU’s approach focuses on categorizing AI products into four risk classes: unacceptable risk, high risk, conceptual AI, and limited risk. Certain AI applications, such as those that coerce behavioral changes or enable real-time eye identification through remote genetic recognition systems, are deemed unethical and prohibited. The use of AI to profile individuals based on characteristics like gender, skin color, cultural traits, or behavior is also forbidden.

AI applications with higher risks, such as self-driving cars, healthcare systems, power grids, aviation technology, and certain toys, will undergo thorough assessments before market release to safeguard fundamental rights. Additionally, transparency requirements will apply to AI systems involved in legal interpretation and compliance with Western AI laws.

Moderate-risk AI systems, like those generating original content and analyzing vast datasets, necessitate openness regarding their operations and methods to prevent illicit content creation. Stricter rules apply to AI applications manipulating visual or audio content, including deepfakes and customer service programs, with a focus on transparency and disclosure.

The EU’s new legislation is undergoing finalization by the European Parliament, Council of Ministers, and Commission, with anticipated implementation in April 2024. However, concerns have been raised regarding the potential obsolescence of these regulations due to the rapid evolution of AI technologies.

In response to the proposed regulations, industry associations like the European Computer and Communications Industry Association (CCIA) have expressed reservations about potential overregulation and lack of foresight in the AI Act. Similarly, consumer advocates from the European Consumer Organization (BEUC) have criticized the legislation for granting excessive self-regulatory leeway to businesses, particularly in the realm of toys and virtual assistants.

Comparatively, the EU’s approach to AI regulation differs from that of other nations like the United States and China. While the US and UK issue data protection guidelines for tech companies, the EU’s regulations are more stringent. In China, AI usage is heavily restricted by the government, with certain AI technologies, like ChatGPT, inaccessible due to censorship concerns.