Music videos have long served as testbeds for new technology, from the 3D characters populating Dire Straits’ “Money for Nothing” to the pioneering CGI morphing techniques employed in Michael Jackson’s “Black or White.”

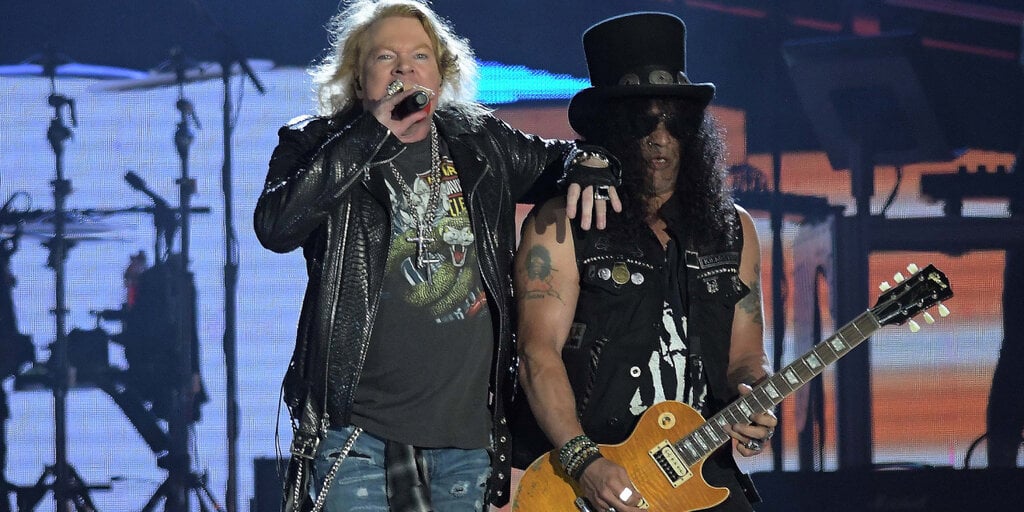

Now, iconic hard rock band Guns N’ Roses has brought generative AI into the mix for their latest video to accompany new track “The General.”

The band turned to Creative Works London, the studio that’s produced everything from animations for their live shows to music videos, for the video.

“The General” follows a young boy down the streets of an unsettling, magenta-toned cityscape as he’s menaced by childhood toys that take on a threatening aspect.

The video was originally conceived as a fully animated video in Unreal Engine, Creative Works London creative director Dan Potter told Decrypt. About six weeks into production, the band’s manager sent him a link to Peter Gabriel’s DiffuseTogether generative AI competition.

“I’d been tracking that,” he said, adding that he’d been “playing” with generative AI platform Stable Diffusion since its launch in 2022. “So when he sent that to me, it was like, ‘Oh, I’m already there—I already know what it takes to pull that sort of work off.”

This AI Love

“I went underground for a bit, literally for five days,” Potter said. “I went into my studio and started exploring everything around utilizing everything we’ve done so far in Unreal Engine—feeding it the aesthetic, feeding it the motion, feeding it the story, feeding the AI all the ideas that we’d developed in those six weeks.”

Creative Works London developed a “kind of hybrid workflow,” Potter said, using concept sketches and Unreal Engine footage as a starting point for Stable Diffusion to work from before “controlling the vibe and the look and feel of it with the prompts.”

Potter added that he trained a hypernetwork (or supporting neural network) on Stable Diffusion with the studio’s previous work for Guns N’ Roses to help capture the band’s visual style. “I was able to take some of my sketches and paint-overs and level them up,” Potter explained, adding that “AI is accelerating my process exponentially.”

“I was feeding it the Unreal Engine footage in various ways,” he explained. “I fed it as a layer that it dreamt over, and also as a starting point to dream the rest of the visual out of it.”

Street of Dreams

Where generative AI comes into its own is in creating the uncanny, dreamlike imagery seen throughout the video. As the camera pans and tilts, the AI “dreams” each frame separately, with background elements flickering and morphing. Teddy bears disintegrate and reform as chairs, windows reform as doors and hallways, and biomechanical creatures straight out of Hieronymus Bosch paintings flicker into existence in the background before dissolving into their constituent parts.

“Inputting Unreal Engine renders, and then having it dream over the top of those—that for me was a really interesting use,” Potter said. “Because that was six or seven humans making those renders, and then we were just giving it instantly a different vibe.”

Elsewhere, Potter used Stable Diffusion prompts to turn live-action footage of the band into AI-generated imagery, cutting back and forth between the two. “I’d fed a prompt into it, like, ‘futuristic, psychotic rock star,’” he said, pointing to an image of Axl Rose rendered as, well, a futuristic, psychotic rock star.

Potter went to pains to point out that Creative Works used generative AI to augment the studio’s output, rather than replacing the work of artists. “I did want to make it as much of a hand-drawn, human-intervened process as I could,” he said.

To that end, the imagery generated by Stable Diffusion is based on the work of the studio’s artists, while the video also includes plenty of hand-crafted touches—such as graffiti overlaid on top of the image, transitions through a manhole emblazoned with the Guns N’ Roses logo, and the logo for “The General” that appears on a crushed drinks can at the outset.

That logo also features a “cheeky hidden easter egg,” Potter said. “I just notched in the ‘L,’ so you have the ‘G,’ the ‘N’ and the ‘R,’ and an ‘A.I.’ at the end.”

Live and Let AI

Potter said that he envisions AI as a “creative tool” rather than a replacement for artists.

“I think the problem a lot of artists have with AI is that it almost says, ‘Ta-da! There’s your finished image,’ he explained. “And it’s a really dangerous place for a creative to be, where you think, ‘Well, we can just put a prompt in and you get the result.’”

With the video for “The General,” he added, “I was in the unique position that it was only something I was able to do.”

“What I wanted to do with AI was to go, ‘If you’re entrusting me with such a controversial thing, then who better than Guns N’ Roses, the most dangerous band in the world, to go, ‘Let’s try AI’?” he said. “Because it is a creative tool at the end of the day, I wanted to make it something that came in every sense from within the band.”

Following “The General,” Creative Works London is looking at other ways to integrate AI into Guns N’ Roses’ output, including combining AI with iMAG, the large-scale video projection system used in live performances. “What we’ve been doing with Guns’ show—we haven’t really pulled it off, because the teams aren’t ready for it—is to drop real time content in the iMAG,” he said.

The idea, he explained, is to “have Axl interacting with content that’s being fed from content in the main screen, but in the iMAG,” with augmented reality elements appearing in front of him and behind him. “What we’re trying to do there is some kind of halfway house, so if you’re in a show and you put goggles on, you can see all this real-time content flowing around the band.”

He suggested that in future, live performances could incorporate augmented reality elements, “where people can just wear those glasses and then see the content explode beyond the stage and around them, in this kind of DMT-like hallucination.”

As for the future of artists in a world of generative AI, Potter said, “We felt like we owed it to everyone to just say, ‘A significant team of artists was behind this—no artist died making this.’” He added that AI is “not going to kill the artist, it’s just going to amplify and augment what we already do. I’d say the future is about us being more like guides.”

Edited by Andrew Hayward