Samantha Stokes

- Google is currently in the process of developing Gemini 1.5, an advanced large language model.

- The Gemini 1.5 model offers significant enhancements over its predecessor, particularly in its ability to process diverse data types.

- This new model utilizes a ‘mixture of experts’ approach to improve efficiency and boasts a larger context window.

Google’s recent announcement about the development of Gemini 1.5 has intensified the competition among major tech companies and emerging startups in the field of artificial intelligence.

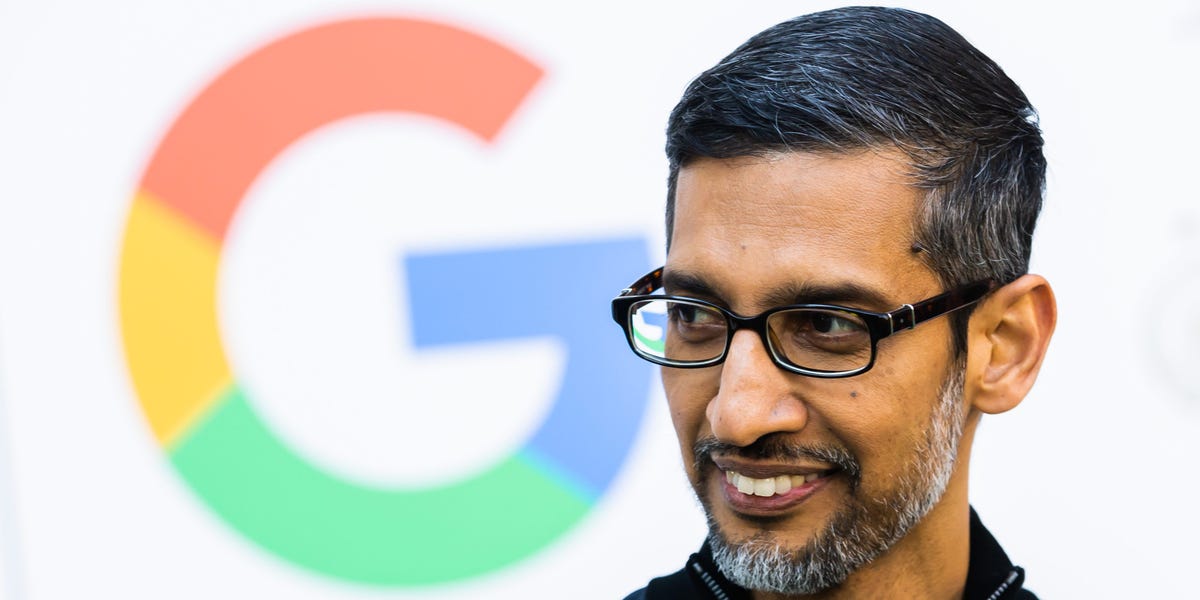

Owned by Alphabet, Inc., Google revealed its plans for the new large language model, Gemini 1.5, through a blog post authored by Sundar Pichai, CEO of Google and Alphabet, Inc., and Demis Hassabis, CEO of Google DeepMind. This unveiling follows closely on the heels of the original Gemini launch, aimed at rivaling OpenAI’s GPT-4 and other language models emerging in the industry.

Gemini represents a cutting-edge, multi-modal AI model capable of processing various data formats, including images, text, audio, video, and coding languages. Primarily designed as a versatile business tool and personal assistant, Gemini signifies Google’s ongoing advancements in AI technology.

Notably, Gemini 1.5 marks a significant leap forward from its predecessor. Here are some key features of the latest iteration:

Implementation of a ‘Mixture of Experts’ Model

Gemini 1.5 introduces a “mixture of experts” model, enhancing speed and efficiency. This technique allows the model to utilize specific processing components selectively, optimizing response generation without running the entire model for every query.

Expanded Context Window Capabilities

The power of an AI model lies in its context window, comprising the foundational elements for information processing, such as words, images, videos, audio, and code. Referred to as tokens in the AI domain, these building blocks determine the model’s analytical capacity.

While the original Gemini could handle up to 32,000 tokens, Gemini 1.5 Pro boasts an impressive context window capacity of up to 1 million tokens. This advancement enables the new model to analyze extensive data sets, including 1 hour of video, 11 hours of audio, codebases exceeding 30,000 lines of code, or over 700,000 words.

Enhanced Performance Relative to Previous Versions

In comparative testing against its predecessors, Gemini 1.5 Pro demonstrated superior performance in 87% of Google’s benchmark evaluations. Furthermore, during the “needle in a haystack” assessment, where the model had to locate specific text within data blocks containing up to a million tokens, Gemini 1.5 succeeded 99% of the time.

Moreover, Gemini 1.5 exhibits improved response generation for complex queries, streamlining user interactions without requiring extensive query refinement. Testers provided the model with a grammar manual for an obscure language, and Gemini 1.5 successfully translated text to English with human-like proficiency.

Stringent Safety Testing Procedures

As AI capabilities advance, concerns regarding safety implications, ranging from misuse to ethical considerations, have grown. Google emphasizes that Gemini 1.5 underwent rigorous ethics and safety evaluations before its broader deployment. The company has conducted thorough research on AI safety risks and developed strategies to address and mitigate potential harms associated with the technology.