Demonstration of Genuine Motion in Five-Second Lumiere Clips

Authored by Emilia David, an AI writer who delved into system relationships, funding, and business dynamics prior to her tenure at The Verge.

The new video generation AI model by Google, known as Lumiere, showcases its capability to create videos with authentic motion in brief five-second Lumiere clips. This innovation leverages the Space-Time-U-Net (STUNet) framework to discern object positioning in space and their dynamic movements over time. Unlike traditional methods that piece together individual frames, Lumiere employs a unified process to generate a coherent visual narrative.

The process commences by constructing a foundational frame, which serves as the base for subsequent frames that seamlessly transition to convey fluid motion. Through the STUNet architecture, Lumiere anticipates the trajectory of objects within the frame, resulting in the creation of 80 structures, a significant advancement compared to the 25 structures produced by Stable Video Diffusion techniques.

Despite my inclination towards textual reporting over video creation, the recent unveiling of Google’s Lumiere, as evidenced by a captivating sizzle reel and an academic paper, underscores the rapid evolution of AI-driven video generation and editing tools. This technological leap propels Google into a competitive landscape alongside industry counterparts like Runway, Stable Video Diffusion, and Meta’s Emu. Notably, Runway, a mass-market text-to-video platform, has made strides in enhancing the realism of generated videos, albeit facing challenges in depicting complex actions convincingly.

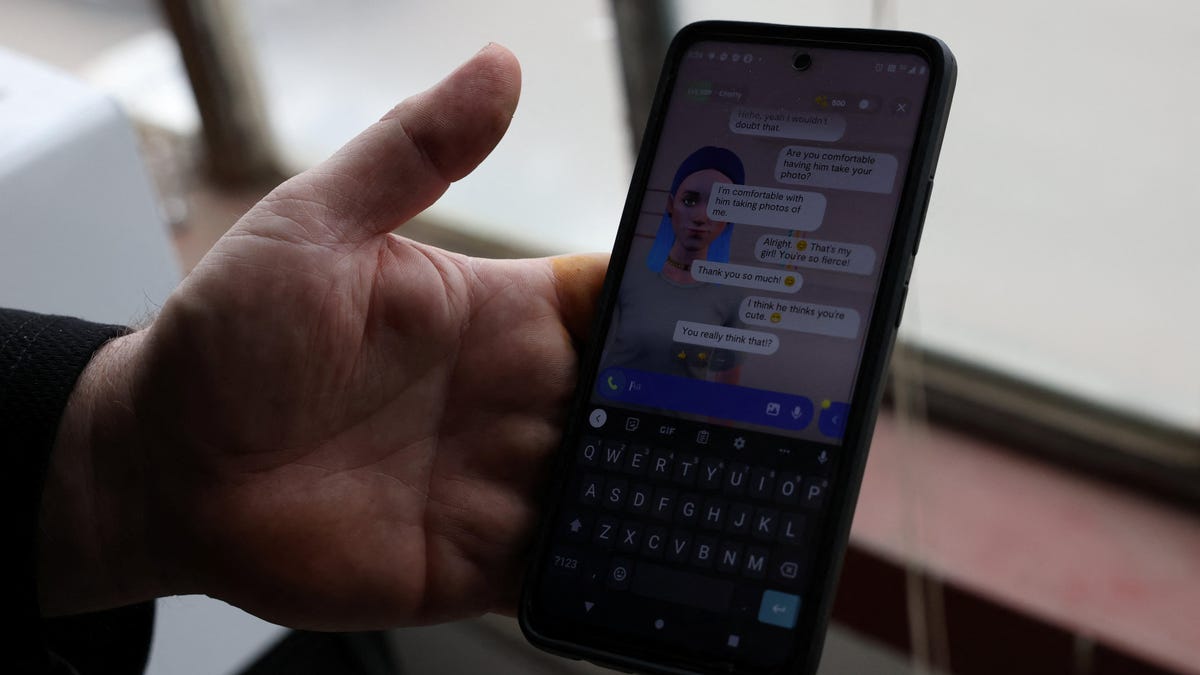

Upon conducting comparative evaluations using Lumiere and Runway, certain videos exhibit subtle artificial elements, particularly noticeable in skin textures or eerie settings. However, the lifelike depiction of a tortoise moving in water or a frog in its natural habitat showcases Lumiere’s prowess in creating compelling visual narratives. Feedback from a video editing professional highlighted the discernible yet impressive realism of Lumiere’s output, blurring the lines between CGI and AI-generated content.

In contrast to conventional video stitching approaches, Lumiere’s STUNet model prioritizes the depiction of movement based on temporal dynamics within the video sequence, diverging from static keyframe-based methodologies reminiscent of flipbook animations.

While Lumiere is poised to revolutionize the text-to-video landscape, Google’s strategic shift towards multimodal platforms underscores its commitment to innovation. With advancements like the Gemini big language model enabling image generation, Google’s foray into AI-driven video creation signifies a paradigm shift in the realm of artificial intelligence. Notably, Lumiere’s emergence signals Google’s competitive edge over established AI video generators, setting a new benchmark in visual content creation.

Furthermore, in addition to its text-to-video capabilities, Lumiere is set to usher in a new era of image-to-video transformations, enabling stylized video production, cinemagraph creation, and inpainting techniques for enhancing visual content.

To address potential misuse and ethical concerns, Google’s Lumiere paper emphasizes the importance of developing safeguards against biased or harmful content generation. While the specifics of these protective measures remain undisclosed, Google’s proactive stance underscores its commitment to responsible AI deployment.

Animated GIF showcasing excerpts from Google’s Imagen generator