A video highlighting Google’s AI model may not be as it initially appears.

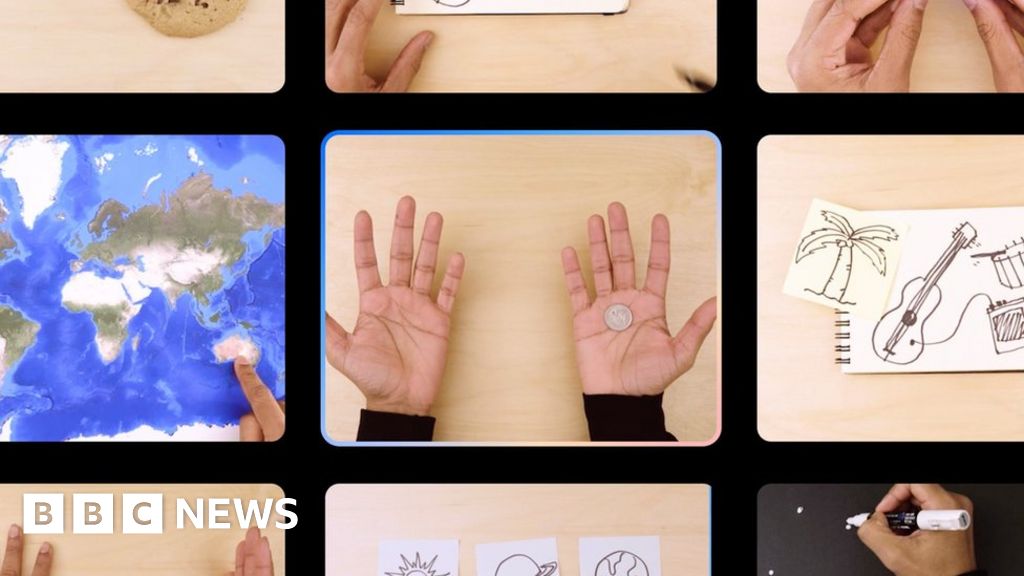

The Gemini video, featuring Google’s AI model, has gained significant traction with 1.6 million views on YouTube. However, Google has clarified that the interactions showcased in the video were sped up for demonstration purposes, deviating from real-time responsiveness.

Google openly acknowledges the limitations of its AI, stating that it lacks the capability to interpret messages or images accurately. The process behind the video’s creation was elucidated in a blog post, confirming that it involved merging still image frames and textual cues.

In a statement to the BBC, Google confirmed that the video’s content was not a real-time interaction but rather a result of combining images and text prompts. The video, titled “Our Hands on with Gemini,” aims to inspire developers by showcasing the AI’s functionalities.

Despite the impressive demonstration in the video, where the AI responds to user queries in real-time, Google disclosed that the AI’s object recognition was facilitated by still images and textual prompts, not instantaneous analysis.

Google’s AI, showcased in the video, exhibits capabilities similar to OpenAI’s GPT-4, raising questions about the competitive landscape in the AI industry, particularly with Sam Altman returning as CEO of OpenAI.

As Google continues to develop its next-gen AI iteration, competition with OpenAI intensifies. The Alpha Terms governing the use of Google’s AI emphasize its experimental nature, urging users to exercise discretion due to limited service obligations.

In essence, the video serves as a window into the advancing realm of artificial intelligence, characterized by innovation, competition, and technological progress, shaping the dynamic landscape of the AI industry. These Alpha Terms signify the evolving nature of AI technology and its impact on industry dynamics.