After extensive ‘final’ negotiations lasting nearly three days, European Union lawmakers have reached a political agreement on a risk-based framework for regulating artificial intelligence. This significant milestone follows the initial proposal in April 2021, with months of challenging three-way discussions required to finalize the deal. The agreement signals the imminent arrival of a comprehensive EU AI law.

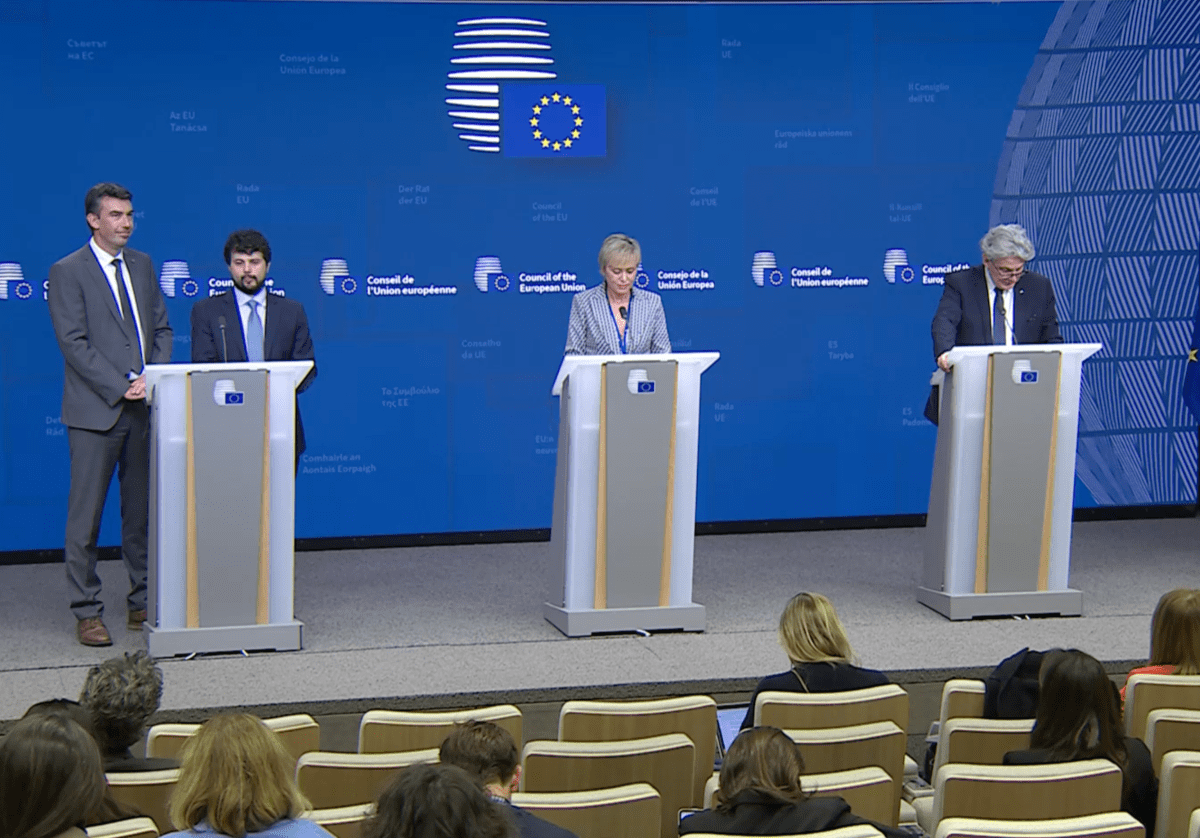

Following a successful but draining press briefing in the early hours of Friday night/Saturday morning local time, key representatives from the European Parliament, Council, and the Commission — the bloc’s co-legislators — celebrated the agreement as hard-won, a pivotal accomplishment, and historic, respectively.

In a tweet announcing the news, Ursula von der Leyen, the EU’s president, hailed the political agreement as a “global first,” emphasizing the unique legal framework aimed at fostering trustworthy AI development while safeguarding the rights of individuals and businesses.

While the full details of the agreement are pending the compilation and public release of a final text, a press release from the European Parliament confirms that the deal prohibits the use of AI for various purposes, including biometric categorization systems using sensitive characteristics, untargeted scraping of facial images, emotion recognition in specific settings, social scoring, manipulation of human behavior, and exploitation of vulnerabilities.

Furthermore, the agreement outlines strict limitations on the use of remote biometric identification technology in public spaces by law enforcement, with requirements for judicial authorization and specific crime-related usage. High-risk AI systems will be subject to mandatory fundamental rights impact assessments, extending to sectors like insurance and banking. Additionally, AI systems influencing elections and voter behavior are categorized as high-risk, empowering citizens to raise complaints and seek explanations for decisions impacting their rights.

The agreement also introduces a two-tier system for regulating general AI systems, including transparency requirements for foundational models. For high-impact general AI systems, stringent obligations are imposed to assess and mitigate systemic risks, conduct model evaluations, ensure cybersecurity, and report on energy efficiency.

The agreement promotes the establishment of regulatory sandboxes and real-world testing by national authorities to support the development and training of AI by startups and SMEs before market entry. Non-compliance may result in fines ranging from €7.5 million to €35 million or a percentage of global turnover, depending on the violation and company size.

The phased entry into force of the AI Act allows for a gradual implementation of rules, with specific timelines for prohibited use cases, transparency and governance requirements, and other obligations. The law’s enforcement is expected to be fully realized by 2026.

Carme Artigas, Spain’s secretary of state for digital and AI issues, described the agreement as a significant achievement for digital information in Europe and globally, highlighting the regulatory certainty it provides for European developers and startups.

In conclusion, the EU’s collaborative effort to establish comprehensive AI regulations reflects a human-centric approach that upholds fundamental rights and European values. The balanced legislation addresses concerns around protection and innovation, setting a blueprint for global convergence in addressing the challenges posed by artificial intelligence.