Shortly after the publication of a report by Apple Inc. detailing its advancements in multimodal LLMs, xAI Corp., an artificial intelligence startup led by Elon Musk, has today made the weights and architecture of its Grok-1 big language model available as open-source code.

Elon Musk had initially projected that xAI would unveil Grok as open source on March 11. However, today’s revelation of the company’s foundational design and weights marks its inaugural open-source contribution.

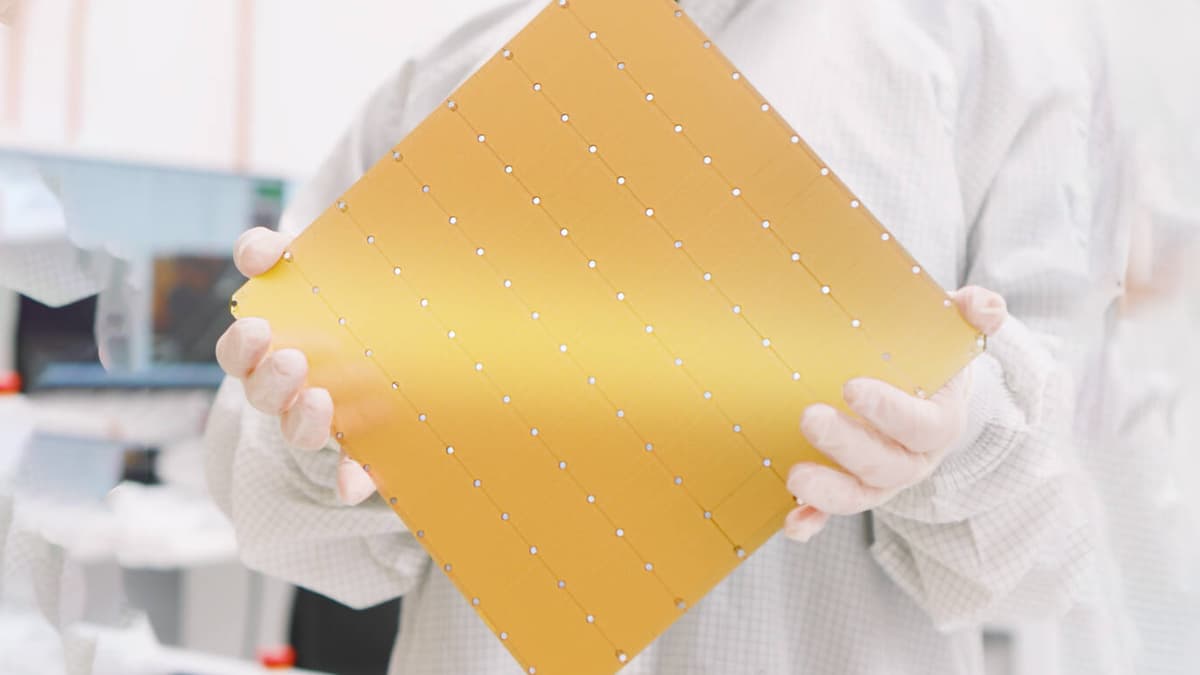

The public release includes insights into the structural layout of layers and nodes within Grok’s architecture. The fundamental unit weights, which have been adjusted during training to encode acquired knowledge and regulate the transformation of input data into output, are also disclosed.

Grok-1, a 314 billion parameter model utilizing a “Mixture-of-Experts” framework, was meticulously trained from scratch by xAI. This machine learning approach combines outputs from multiple specialized sub-models, or “experts,” to generate final predictions, optimizing for diverse tasks or subsets of data by leveraging the expertise of each specific model.

This release pertains to the raw base model checkpoint of the Grok-1 pre-training phase, culminating in October 2023. As stated by the company, “the model has not been fine-tuned for any specific application, such as dialogue.” The accompanying blog post was succinct, offering no further details.

In July, Musk disclosed his establishment of xAI to compete with offerings from tech giants like Google LLC and OpenAI. The inaugural model, Grok, is purportedly inspired by Douglas Adams’ renowned work “The Hitchhiker’s Guide to the Galaxy” and is designed to provide comprehensive answers and even suggest pertinent questions.

Concurrently, Apple, the brainchild of Steve Jobs, discreetly released a paper on Thursday outlining its work on MM1, a suite of multimodal LLMs tailored for image captioning, visual question answering, and natural language inference.

According to reports, MM1 is described as a family of multimodal models supporting up to 30 billion parameters and exhibiting competitive performance post supervised fine-tuning across various established multimodal benchmarks. The researchers emphasize that multimodal large language models represent the future foundation models, offering enhanced capabilities and paving the way for further advancements.

A multimodal LLM integrates diverse data types, such as text, images, and audio, enabling complex tasks by amalgamating varied information into an AI system capable of comprehension and response generation. Apple’s researchers believe that their model signifies a breakthrough, facilitating the scalability of these models to larger datasets with improved reliability and efficacy.

Previously, Apple quietly open-sourced Ferret, a model focused on multimodal LLMs, in October, which garnered attention in December.