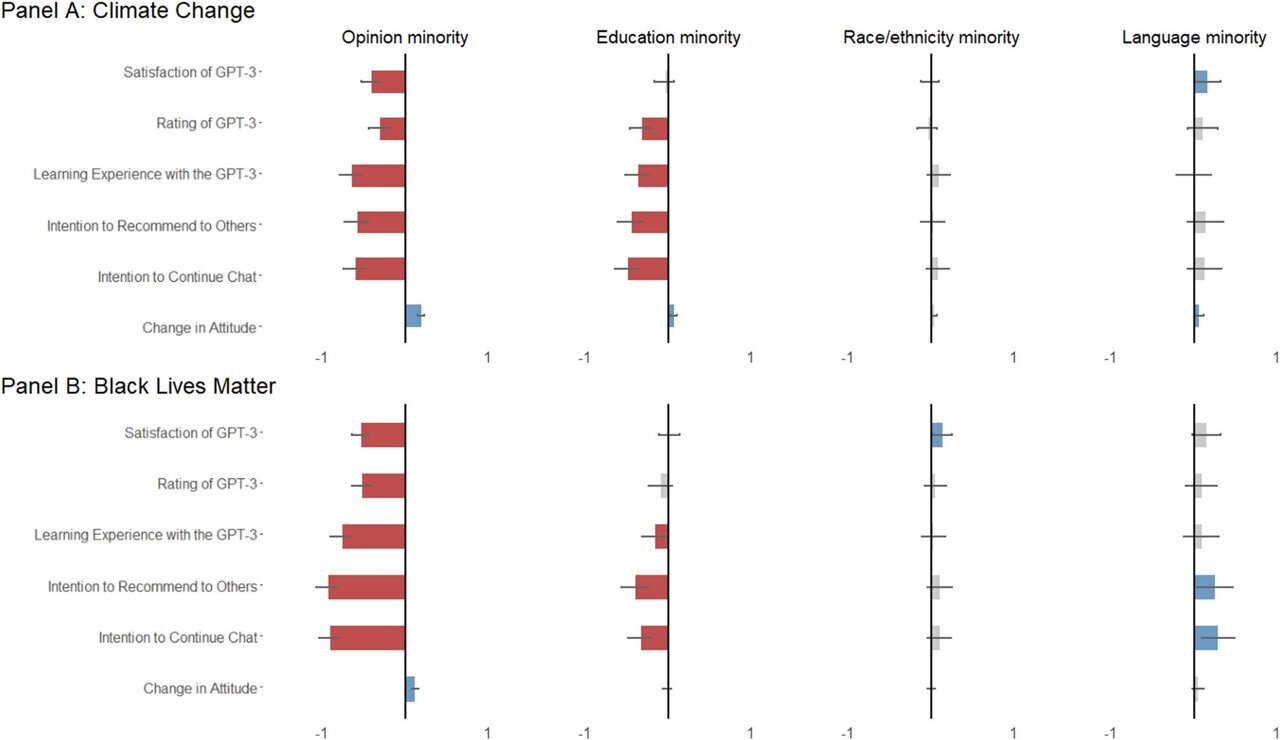

Individuals who engaged in conversations with a renowned AI entity and harbored skepticism towards either human-induced climate change or the Black Lives Matter (BLM) movement experienced disappointment during the interaction. However, after the dialogue, they tended to lean more towards supporting the BLM cause or the scientific consensus on climate change. Researchers investigating how these AI systems interact with individuals from diverse cultural backgrounds have highlighted this phenomenon.

Humans adept at adapting to their conversational partners’ social cues and expectations now find themselves engaging with advanced language models designed to emulate human communication. A team of researchers at the University of Wisconsin-Madison delved into the dynamics of interactions between GPT-3, a sophisticated large language model, and users from varied cultural backgrounds. ChatGPT, a prominent platform, served as the precursor to GPT-3. In late 2021 and early 2022, over 3,000 participants engaged in face-to-face discussions with GPT-3 on topics like climate change and BLM.

Kaiping Chen, a professor specializing in life sciences communication, emphasized the goal of enhancing mutual understanding in interactions, whether between individuals or agents. Collaborating with doctoral students Anqi Shao and Jirayu Burapacheep from Stanford University, along with Yixuan “Sharon” Li, a professor at UW-Madison focusing on AI safety and reliability, Chen published their research findings in the journal Scientific Reports.

During the study, participants interacted with GPT-3 via a chat system developed by Burapacheep, discussing BLM or climate change based on given instructions. The exchanges typically spanned around eight interactions, with most participants expressing similar levels of satisfaction.

Chen noted that the evaluations of user experiences did not significantly differ based on gender, race, or ethnicity. However, participants with contrasting views on divisive topics and varying educational backgrounds showcased notable disagreements. Those who expressed lower agreement with mainstream opinions on climate change or BLM reported higher dissatisfaction with their GPT-3 interactions.

Despite initial dissatisfaction, the conversations prompted shifts in perspectives on these contentious issues. Individuals who initially held reservations towards climate change or BLM demonstrated increased openness post-interaction. Notably, GPT-3 presented diverse perspectives on the topics, offering rationales for human-induced climate change while respectfully addressing skepticism towards BLM.

Chen emphasized the importance of fostering understanding and bridging gaps in perspectives through such interactions. While the ultimate goal isn’t always to change opinions, these dialogues play a crucial role in promoting empathy, cultural awareness, and constructive conversations within society.